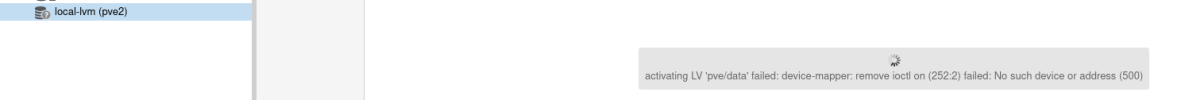

Hello. I create and up 3 proxmox virtual machines with VMware Workstation Player. All done well. Then I start to connect them to clulster, and cluster was created. But local-lvm storage icon in 2th and 3th nodes become with question mark. I can't do anything with them on that nodes, they are like inactive or disabled. Before joining to cluster all was normal.

So, what does it mean ? how to fix it ?

Thanks.

So, what does it mean ? how to fix it ?

Thanks.