Latest activity

-

MMichiel_1afa replied to the thread create a VLAN without having a physical switch or changing anything in the router.You often do not have to, but I would consider it good practice to do anyway so you have a clear indication of what lan these vms are on.

-

EllerholdAG replied to the thread ZFS Pool full even though VM limited to 50% of the pools size.This is - at least - surprising to me. You have a 500 GB disk on a zpool with 900 GB capacity. Youre using no snapshots, which could take up additional space. So whats eating the 399 GB here? Write amplification refers to each little change in...

EllerholdAG replied to the thread ZFS Pool full even though VM limited to 50% of the pools size.This is - at least - surprising to me. You have a 500 GB disk on a zpool with 900 GB capacity. Youre using no snapshots, which could take up additional space. So whats eating the 399 GB here? Write amplification refers to each little change in... -

IIsThisThingOn replied to the thread ZFS storage is very full, we would like to increase the space but....Just for fun, we can do the math for your setup and what you should get with 128k. Let's look at a 132k write. First block is an incompressible 128k write. Second is also a 128k block but with only 4k data, the rest zeros. LZ4 can compress that...

-

UdoB reacted to Impact's post in the thread Web UI reports critical memory usage, just caching with

UdoB reacted to Impact's post in the thread Web UI reports critical memory usage, just caching with Like.

I'd enjoy a more detailed RAM usage statistic there too. It could look similar like this one for VMs or better, a horizontally stacked bar The issue with considering ARC as being unused is that it's not usually freed fast enough to be useful...

Like.

I'd enjoy a more detailed RAM usage statistic there too. It could look similar like this one for VMs or better, a horizontally stacked bar The issue with considering ARC as being unused is that it's not usually freed fast enough to be useful... -

UdoB reacted to fstrankowski's post in the thread Web UI reports critical memory usage, just caching with

UdoB reacted to fstrankowski's post in the thread Web UI reports critical memory usage, just caching with Like.

Looks totally fine to me. You're using roughly 60GB without L2ARC, rest is L2ARC usage (~ 170GB) + some remaining free GB (~ 20). I see absolutely nothing wrong here. The graphs have different color. If you hover over these graphs it will tell...

Like.

Looks totally fine to me. You're using roughly 60GB without L2ARC, rest is L2ARC usage (~ 170GB) + some remaining free GB (~ 20). I see absolutely nothing wrong here. The graphs have different color. If you hover over these graphs it will tell... -

JJohannes S reacted to fabian's post in the thread Proxmox cloning corrupts the target image - extensive analysis included with

Like.

this sounds like an instance of "hole" mishandling, which is often caused by devices lying about their support for discarding. changing the block size might just cause the data to be aligned differently by the guest OS and thus avoid the issue...

Like.

this sounds like an instance of "hole" mishandling, which is often caused by devices lying about their support for discarding. changing the block size might just cause the data to be aligned differently by the guest OS and thus avoid the issue... -

JJohannes S reacted to IsThisThingOn's post in the thread ZFS storage is very full, we would like to increase the space but... with

Like.

That question is an oversimplification ;) But most likely, it would rise of course. Since a lets say from 128k down to 100k compressed block can make use of wider stripes and easier fit the pool geometry, padding has less impact and so on. But...

Like.

That question is an oversimplification ;) But most likely, it would rise of course. Since a lets say from 128k down to 100k compressed block can make use of wider stripes and easier fit the pool geometry, padding has less impact and so on. But... -

UdoB reacted to ThoSo's post in the thread Backup von VM und Container auf mein NAS. How to? with

UdoB reacted to ThoSo's post in the thread Backup von VM und Container auf mein NAS. How to? with Like.

Nein, kein Fehler beim System - eher Gedanklich beim Anwender ;-) Windows bzw SAMBA laufen immer nach dem schema \\server\freigabe Da gibt es bei der Benennung restriktionen wie länge oder die Zeichen. Windows ist es egal, ob du gross- oder klein...

Like.

Nein, kein Fehler beim System - eher Gedanklich beim Anwender ;-) Windows bzw SAMBA laufen immer nach dem schema \\server\freigabe Da gibt es bei der Benennung restriktionen wie länge oder die Zeichen. Windows ist es egal, ob du gross- oder klein... -

IIsThisThingOn replied to the thread ZFS storage is very full, we would like to increase the space but....That question is an oversimplification ;) But most likely, it would rise of course. Since a lets say from 128k down to 100k compressed block can make use of wider stripes and easier fit the pool geometry, padding has less impact and so on. But...

-

GGeg0r replied to the thread VM CPU issues: watchdog: BUG: soft lockup - CPU#7 stuck for 22s!.I have exactly the same problems since a view days... Not all VMs are effected but some (randomly) and resetting is the only chance to get them back. Proxmox 9.1.2 Linux proxmox 6.17.2-2-pve #1 SMP PREEMPT_DYNAMIC PMX 6.17.2-2...

-

EEduardo Taboada replied to the thread I can't access the web interface..1) the bridge is the correct? 2) you can ping the LXC? 3) try to do a pct enter LXC-number and show listening ports (ss -nltp)

-

JJohannes S reacted to alexskysilk's post in the thread ZFS storage is very full, we would like to increase the space but... with

Like.

Most likely yes. It tooks me a second to read the above discussion when I realized they're no longer speaking in terms of your question. The answer to your question depends on other factors you didnt mention, namely: 1. are you trying to...

Like.

Most likely yes. It tooks me a second to read the above discussion when I realized they're no longer speaking in terms of your question. The answer to your question depends on other factors you didnt mention, namely: 1. are you trying to... -

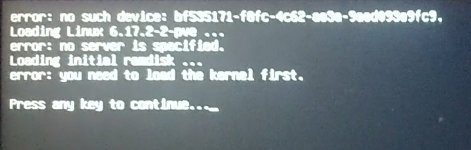

Sshodan replied to the thread Making root volume live in the lvm thin pool ?.Result, exact same error

-

WWolfLink115 replied to the thread Host system crash after VM power off/Reboot.Is this an issue that legit no one has had? I am confused-

-

Ccracked replied to the thread iGPU passthrough on Proxmox VE 9.1.1 – VM freezes on boot." LongQT-sea Thank you very much, I tried this solution. It didn't work for me. I don't understand why.

-

fireon reacted to fabian's post in the thread [SOLVED] Super slow, timeout, and VM stuck while backing up, after updated to PVE 9.1.1 and PBS 4.0.20 with

fireon reacted to fabian's post in the thread [SOLVED] Super slow, timeout, and VM stuck while backing up, after updated to PVE 9.1.1 and PBS 4.0.20 with Like.

testing whether the https://kernel.ubuntu.com/mainline/v6.16/ and https://kernel.ubuntu.com/mainline/v6.16.12/ kernels are showing the issue on your system(s) would be highly appreciated to narrow down the cause. we still cannot reproduce any...

Like.

testing whether the https://kernel.ubuntu.com/mainline/v6.16/ and https://kernel.ubuntu.com/mainline/v6.16.12/ kernels are showing the issue on your system(s) would be highly appreciated to narrow down the cause. we still cannot reproduce any... -

Sshodan replied to the thread Making root volume live in the lvm thin pool ?.Unfortunately, that did not work apparently, Here is what booting looks like So I rebooted into the installed, and chrooted again into the system root@proxmox:/# mkdir /mnt/root root@proxmox:/# mkdir /mnt/boot root@proxmox:/# mkdir...

-

Ttherokh reacted to BloodyIron's post in the thread Default settings of containers and virtual machines with

Like.

This aspect SHOULD NOT rely on a third party from the Proxmox group itself. Those scripts are well intentioned, but are NOT sufficiently vetted for security functions. Proxmox VE clusters are used in many sensitive environments and this can lead...

Like.

This aspect SHOULD NOT rely on a third party from the Proxmox group itself. Those scripts are well intentioned, but are NOT sufficiently vetted for security functions. Proxmox VE clusters are used in many sensitive environments and this can lead... -

Sshodan replied to the thread Making root volume live in the lvm thin pool ?.Continuing attempt to make root mostly a thin volume root@proxmox:/# lvcreate -L 512M -n boot pve Logical volume "boot" created. WARNING: This metadata update is NOT backed up. root@proxmox:/# mkfs.ext4 /dev/pve/boot mke2fs 1.47.2...

-

Ppugglewuggle posted the thread Convert BIOS (Legacy Boot) Proxmox PVE Host (VM) to UEFI Boot in Proxmox VE: Installation and configuration.Is there a tutorial for this anywhere? I see several posts - mostly this one https://forum.proxmox.com/threads/how-to-migrate-from-legacy-grub-to-uefi-boot-systemd-boot.120531/ - about how to do this with a ZFS root file system but nothing for...