Hello everybody,

I have a zvol with a volsize of 3.91T. This zvol is attached as a data disk to a VM that works as fileserver (SAMBA/NFS). Here you have the details of df -hT from inside the VM:

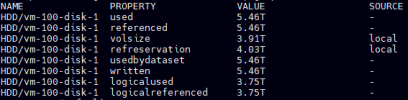

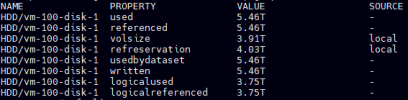

2.4T used from 3.9. The zvol has volblocksize = 8K, and is on a pool with only one RAIDz-1 of four disks and ashift=12(4kb). I have been reading about parity and padding and understand that for each block of data I want to write I will need 2 sectors for the data, 1 for the parity and 1 for padding because the total number of written sectors must be multiple of (p+1[2]). This in an efficiency of 50%, vs the 75% efficiency of an ideal RAIDz-1 the zvol should be wasting 1.5x (0.75/0.5) times more space than the ideal. That should be 3.6TB (2.4T * 1.5). But here you have what zfs get says to me:

Only with the space related fields:

Why 5.46T? Thats 2.3x greater. And the logicalused at 3.75? Can't understand... Any help?

Thanks for your time,

Héctor

I have a zvol with a volsize of 3.91T. This zvol is attached as a data disk to a VM that works as fileserver (SAMBA/NFS). Here you have the details of df -hT from inside the VM:

2.4T used from 3.9. The zvol has volblocksize = 8K, and is on a pool with only one RAIDz-1 of four disks and ashift=12(4kb). I have been reading about parity and padding and understand that for each block of data I want to write I will need 2 sectors for the data, 1 for the parity and 1 for padding because the total number of written sectors must be multiple of (p+1[2]). This in an efficiency of 50%, vs the 75% efficiency of an ideal RAIDz-1 the zvol should be wasting 1.5x (0.75/0.5) times more space than the ideal. That should be 3.6TB (2.4T * 1.5). But here you have what zfs get says to me:

Only with the space related fields:

Why 5.46T? Thats 2.3x greater. And the logicalused at 3.75? Can't understand... Any help?

Thanks for your time,

Héctor