The import should only happen on the host that "owns" the drive. Since you gave the drives to the VM only it should manage them.

Checking SMART info and such on the node it fine, of course, but if you try to import/mount it on both sides you will very likely get in trouble.

My suggestion is to make sure that

For the future the best practice when dealing with ZFS is to pass the whole HBA to the VM via PCI(e) passthrough. Or as mentioned earlier to let the node manage the ZFS pool and use ext4 or something inside the VM on a virtual disk.

Checking SMART info and such on the node it fine, of course, but if you try to import/mount it on both sides you will very likely get in trouble.

My suggestion is to make sure that

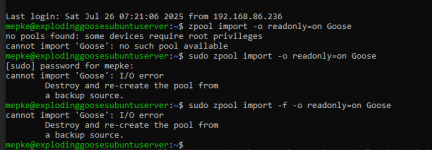

Goose and StoragePool are not listed on the node's zpool list and then try to import Goose inside the VM via the mentioned commands.For the future the best practice when dealing with ZFS is to pass the whole HBA to the VM via PCI(e) passthrough. Or as mentioned earlier to let the node manage the ZFS pool and use ext4 or something inside the VM on a virtual disk.

Last edited: