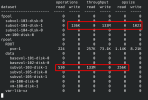

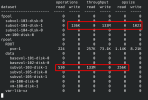

Hi, I'm migrating some large LXC containers from one ZFS array to another and I'm noticing some odd behavior. It seems that for some reason, whatever system that handles LXC migrations is writing the data in 1k chunks... I mean, credit to ZFS for managing nearly 140k 1kB IOPS on spinning rust, but this seems perhaps just a little sub-optimal... I'm using the really neat ZFS IO monitoring tool ioztat for tracking dataset IO activity.

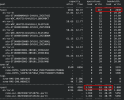

ZFS does seem to be coalescing all these 1k IOs into substantially larger chunks when the data actually hits the drives, so it doesn't seem to be a problem with the disks being flooded with small IO (the disk utilization is almost nothing - even for the HDDs)

Doing a normal (20GB) file copy seems fine so it doesn't look like a hardware issue either.

Anyone have any idea what might be going on here and if there's anything I can do about it? I'm sure I've done LXC migrations on ZFS before and had it scream pretty much full disk speed. It's not a showstopper as everything inside the container seems fine and easily hits 2GB/s when doing sequential reads, so it's more a mild annoyance than a major limitation. Still, any ideas what might be going on?

Cheers

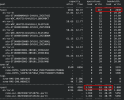

ZFS does seem to be coalescing all these 1k IOs into substantially larger chunks when the data actually hits the drives, so it doesn't seem to be a problem with the disks being flooded with small IO (the disk utilization is almost nothing - even for the HDDs)

Doing a normal (20GB) file copy seems fine so it doesn't look like a hardware issue either.

Anyone have any idea what might be going on here and if there's anything I can do about it? I'm sure I've done LXC migrations on ZFS before and had it scream pretty much full disk speed. It's not a showstopper as everything inside the container seems fine and easily hits 2GB/s when doing sequential reads, so it's more a mild annoyance than a major limitation. Still, any ideas what might be going on?

Cheers

Last edited: