ZFS Which is the highest iops?

- Thread starter djsami

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Raidz is like raid5 and raidz2 like raid6. Both are bad if you want high IOPS because you only get IOPS that are below 1x the performance of a single SSD . Best IOPS you would get with a stripe (aka raid0) which would give you 3x the IOPS but then you won't get any redundancy or bit rot protection and if a single SSD dies all data is lost. If you want redundancy best IOPS would give a striped mirror (aka raid10) with 2x IOPS but for that you need 4 SSDs.

Last edited:

Raidz is like raid5 and raidz2 like raid6. Both are bad if you want high IOPS because you only get IOPS that are below 1x the performance of a single SSD . Best IOPS you would get with a stripe (aka raid0) which would give you 3x the IOPS but then you won't get any redundancy or bit rot protection and if a single SSD dies all data is lost. If you want redundancy best IOPS would give a striped mirror (aka raid10) with 2x IOPS but for that you need 4 SSDs.

Raid 0 which

Sİngle ? mirror ?

Not sure about the UI. I always create my pools by CLI. I guess "Single" means a stripe/raid0. Mirror is like raid1. You could run

And ZFS also got some types of special devices. You could for example add a very fast SSD as a SLOG to increase the IOPS for sync writes or add a "special device" vdev that stores your metadata so data and metadata is stored on different SSDs instead of both on the same which should in theory also give more IOPS because each SSD needs to write/read less.

zpool status after creation to check it.And ZFS also got some types of special devices. You could for example add a very fast SSD as a SLOG to increase the IOPS for sync writes or add a "special device" vdev that stores your metadata so data and metadata is stored on different SSDs instead of both on the same which should in theory also give more IOPS because each SSD needs to write/read less.

I used both lvm thin before, but windows opens very slowly, so I want to switch to zfs from it for higher iopsNot sure about the UI. I always create my pools by CLI. I guess "Single" means a stripe/raid0. Mirror is like raid1. You could runzpool statusafter creation to check it.

And ZFS also got some types of special devices. You could for example add a very fast SSD as a SLOG to increase the IOPS for sync writes or add a "special device" vdev that stores your metadata so data and metadata is stored on different SSDs instead of both on the same which should in theory also give more IOPS because each SSD needs to write/read less.

LVM thin zfs better i guess

Best config for your setup is linux mdadm RAID10 F2.Hello

I have 3 nvme disks, how can I do the fastest and highest iops?

....

Capacity: 2x drives, Read 3x drives, Write: 2x drives, plus you can loose 1 drive and you're still online.

There are also RAID10 F3:

Capacity: 1,5x drives, Read 3x drives, Write: 1x drives, plus you can loose 2 drives and you're still online.

For highest IOPS: linux mdadm RAID0.

Capacity: 3x drives, Read 3x drives, Write: 3x drives, but if you loose 1 drive you loose all data of all 3x drives.

On top of it you can do what you want.

Best regards,

Emilien

Best config for your setup is linux mdadm RAID10 F2.

Capacity: 2x drives, Read 3x drives, Write: 2x drives, plus you can loose 1 drive and you're still online.

There are also RAID10 F3:

Capacity: 1,5x drives, Read 3x drives, Write: 1x drives, plus you can loose 2 drives and you're still online.

For highest IOPS: linux mdadm RAID0.

Capacity: 3x drives, Read 3x drives, Write: 3x drives, but if you loose 1 drive you loose all data of all 3x drives.

On top of it you can do what you want.

Best regards,

Emilien

ZFS raid 0

I also use proxmox backup 50 tb.

It doesn't matter if the data is gone

Jep. If you want you can also use all three drives instead of only two. But I wouldn't use "/dev/sdb" and so on but use IDs instead. That makes it clearer if a drive fails to find that drive and replace it.can i do it like this?

Disk /dev/sdb: 745.06 GiB,

Disk /dev/sdd: 745.06 GiB,

zpool create -f -o ashift=12 Nvem-SSD /dev/sdb /dev/sdd

??

You can find the IDs of your sdb and sdd by using the command

ls -l /dev/disk/by-id/ and then use something like this:zpool create -f -o ashift=12 Nvme-SSD /dev/disk/by-id/nvme-IdOfYourSdb /dev/disk/by-id/nvme-IdOfYourSdd

Last edited:

Jep. If you want you can also use all three drives instead of only two. But I wouldn't use "/dev/sdb" and so on but use IDs instead. That makes it clearer if a drive fails to find that drive and replace it.

You can find the IDs of your sdb and sdd by using the commandls -l /dev/disk/by-id/and then use something like this:

zpool create -f -o ashift=12 Nvme-SSD /dev/disk/by-id/nvme-IdOfYourSdb /dev/disk/by-id/nvme-IdOfYourSdd

lrwxrwxrwx 1 root root 9 Jan 29 17:01 scsi-3600508e07e7261770206638b08998303 -> ../../sdd

lrwxrwxrwx 1 root root 9 Jan 29 18:36 scsi-3600508e07e726177e059c283dc64e40b -> ../../sdc

lrwxrwxrwx 1 root root 9 Jan 29 18:11 scsi-3600508e07e726177e44cdc856251e205 -> ../../sdb

lrwxrwxrwx 1 root root 9 Jan 29 17:01 wwn-0x600508e07e7261770206638b08998303 -> ../../sdd

lrwxrwxrwx 1 root root 9 Jan 29 18:36 wwn-0x600508e07e726177e059c283dc64e40b -> ../../sdc

lrwxrwxrwx 1 root root 9 Jan 29 18:11 wwn-0x600508e07e726177e44cdc856251e205 -> ../../sdb

which one should i use?

Both will work. Eitherlrwxrwxrwx 1 root root 9 Jan 29 17:01 scsi-3600508e07e7261770206638b08998303 -> ../../sdd

lrwxrwxrwx 1 root root 9 Jan 29 18:36 scsi-3600508e07e726177e059c283dc64e40b -> ../../sdc

lrwxrwxrwx 1 root root 9 Jan 29 18:11 scsi-3600508e07e726177e44cdc856251e205 -> ../../sdb

lrwxrwxrwx 1 root root 9 Jan 29 17:01 wwn-0x600508e07e7261770206638b08998303 -> ../../sdd

lrwxrwxrwx 1 root root 9 Jan 29 18:36 wwn-0x600508e07e726177e059c283dc64e40b -> ../../sdc

lrwxrwxrwx 1 root root 9 Jan 29 18:11 wwn-0x600508e07e726177e44cdc856251e205 -> ../../sdb

which one should i use?

zpool create -f -o ashift=12 Nvme-SSD /dev/disk/by-id/scsi-3600508e07e726177e44cdc856251e205 /dev/disk/by-id/scsi-3600508e07e7261770206638b08998303 or zpool create -f -o ashift=12 Nvme-SSD /dev/disk/by-id/wwn-0x600508e07e726177e44cdc856251e205 /dev/disk/by-id/wwn-0x600508e07e7261770206638b08998303 if you want sdb + sdd.Both will work. Eitherzpool create -f -o ashift=12 Nvme-SSD /dev/disk/by-id/scsi-3600508e07e726177e44cdc856251e205 /dev/disk/by-id/scsi-3600508e07e7261770206638b08998303orzpool create -f -o ashift=12 Nvme-SSD /dev/disk/by-id/wwn-0x600508e07e726177e44cdc856251e205 /dev/disk/by-id/wwn-0x600508e07e7261770206638b08998303if you want sdb + sdd.

will proxmox appear after this process?

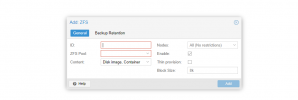

If you manually create your pool you will need to add it as a storge first. Go in the WebUI to Datacenter -> Storage -> Add -> ZFS and tell it to use your pool "Nvme-SSD" with a 8K blocksize and "thin provisioning" enabled. The ID you can choose what you like.will proxmox appear after this process?

Last edited: