Hi all.

I have Proxmox PVE 9.0.10 and created a ZFS pool using one disk connected via SATA. Proxmox is running on bare metal.

The Pool was then passed through to an LXC as a Mount Point. Everything was fine until I a power cut and now the pool is missing. I've done some googling and the usual things aren't working.

The status currently shows up as "unknown" in the UI.

The disk is correct when I list by id:

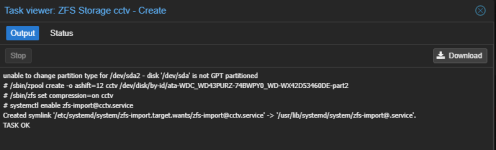

The services are not all started, which I've seen in many posts online.

I have a (fairly small) cache file from when the pool was created

Importing fails too

Any ideas on what to try next are appreciated!

I have Proxmox PVE 9.0.10 and created a ZFS pool using one disk connected via SATA. Proxmox is running on bare metal.

The Pool was then passed through to an LXC as a Mount Point. Everything was fine until I a power cut and now the pool is missing. I've done some googling and the usual things aren't working.

The status currently shows up as "unknown" in the UI.

lsblk shows the disk (no partitions but this normal based on my reading)

Code:

root@proxmox:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 3.6T 0 diskThe disk is correct when I list by id:

Code:

ls -l /dev/disk/by-id/ata-WDC_WD43PURZ-74BWPY0_WD-WX42D53460DE

lrwxrwxrwx 1 root root 9 Nov 4 14:33 /dev/disk/by-id/ata-WDC_WD43PURZ-74BWPY0_WD-WX42D53460DE -> ../../sdazpool status -v and zpool list both say no pools availableThe services are not all started, which I've seen in many posts online.

Code:

# systemctl status zfs-import-cache.service zfs-import-scan.service zfs-import.target zfs-import.service

○ zfs-import-cache.service - Import ZFS pools by cache file

Loaded: loaded (/usr/lib/systemd/system/zfs-import-cache.service; disabled; preset: enabled)

Active: inactive (dead)

Docs: man:zpool(8)

○ zfs-import-scan.service - Import ZFS pools by device scanning

Loaded: loaded (/usr/lib/systemd/system/zfs-import-scan.service; disabled; preset: disabled)

Active: inactive (dead)

Docs: man:zpool(8)

● zfs-import.target - ZFS pool import target

Loaded: loaded (/usr/lib/systemd/system/zfs-import.target; enabled; preset: enabled)

Active: active since Tue 2025-11-04 14:33:18 GMT; 6h ago

Invocation: 644d990405d940ba9d852b5971d77a10

Nov 04 14:33:18 proxmox systemd[1]: Reached target zfs-import.target - ZFS pool import target.

○ zfs-import.service

Loaded: masked (Reason: Unit zfs-import.service is masked.)

Active: inactive (dead)I have a (fairly small) cache file from when the pool was created

Code:

# ls -lh /etc/zfs/zpool.cache

-rw-r--r-- 1 root root 1.5K Oct 14 20:48 /etc/zfs/zpool.cacheImporting fails too

Code:

# zpool import -d /dev/disk/by-id/ata-WDC_WD43PURZ-74BWPY0_WD-WX42D53460DE cctv

cannot import 'cctv': no such pool availableAny ideas on what to try next are appreciated!