Hello,

I’m posting hear because we have some weird information on ours proxmox cluster.

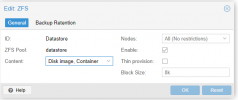

We use 4 identical OVH servers in a cluster. The servers are in use from 10 months. The cluster is composed of 2 “Main” servers and 2 “replications” servers. All the servers have been upgraded from proxmox v6 to v7. The replications append every 2 hours. A daily backup whit Proxmox backup server is made every night at 23h.

We don’t understand why whit the thin provision disabled (it was never activated) the space on ours ZFS storage keep decreasing. Without any interaction, no snapshoot, no vm creation.

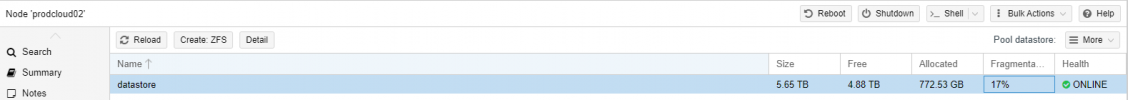

We don’t understand to all the information on the space used/left on the ZFS are conflicting. The some of the VM HDD (1.6 To) does not correspond to the Usage (2.5 To) neither to the ZFS Allocated (772 Go). Screen shot of the proxmox web interface joined.

Can you have some hint for why the space is reduced on the ZFS?

Can you tell us what is the remaining space value to consider?

Thanks in advance,

Raphael TRAINI

I’m posting hear because we have some weird information on ours proxmox cluster.

We use 4 identical OVH servers in a cluster. The servers are in use from 10 months. The cluster is composed of 2 “Main” servers and 2 “replications” servers. All the servers have been upgraded from proxmox v6 to v7. The replications append every 2 hours. A daily backup whit Proxmox backup server is made every night at 23h.

We don’t understand why whit the thin provision disabled (it was never activated) the space on ours ZFS storage keep decreasing. Without any interaction, no snapshoot, no vm creation.

We don’t understand to all the information on the space used/left on the ZFS are conflicting. The some of the VM HDD (1.6 To) does not correspond to the Usage (2.5 To) neither to the ZFS Allocated (772 Go). Screen shot of the proxmox web interface joined.

Can you have some hint for why the space is reduced on the ZFS?

Can you tell us what is the remaining space value to consider?

Thanks in advance,

Raphael TRAINI