I'm running Proxmox 6.2-11 with 256GB ECC RAM and the following raidz3 pool:

pool: rpool

state: ONLINE

scan: scrub repaired 0B in 0 days 15:25:14 with 0 errors on Sun Jan 10 15:49:29 2021

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

raidz3-0 ONLINE 0 0 0

ata-HGST_HUS726T6TALE6L4_V8JDUHAR-part4 ONLINE 0 0 0

ata-HGST_HUS726T6TALE6L4_V8JDWWAR-part4 ONLINE 0 0 0

ata-HGST_HUS726T6TALE6L4_V8JB5AYR-part4 ONLINE 0 0 0

ata-HGST_HUS726T6TALE6L4_V8JBYYTR-part4 ONLINE 0 0 0

ata-ST6000NM0115-1YZ110_ZAD9MHXH-part4 ONLINE 0 0 0

ata-ST6000NM0115-1YZ110_ZAD9M248-part4 ONLINE 0 0 0

ata-ST6000NM0115-1YZ110_ZAD9MQ9E-part4 ONLINE 0 0 0

ata-ST6000NM0115-1YZ110_ZAD9MN5A-part4 ONLINE 0 0 0

errors: No known data errors

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

rpool 43.6T 9.71T 33.9T - - 10% 22% 1.00x ONLINE -

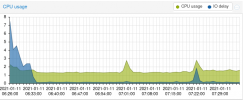

On the proxmox host from few weeks I feel a big increase in the IO wait (2-3%), seeing txg_sync maxing at 95-99.99% IO via iotop. On one of the guest instances /Debian/ I can see jdb2/vda1-8 having again 99.99% most of the time.

Plenty of RAM available on both guest & host. What can the issue be?

pool: rpool

state: ONLINE

scan: scrub repaired 0B in 0 days 15:25:14 with 0 errors on Sun Jan 10 15:49:29 2021

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

raidz3-0 ONLINE 0 0 0

ata-HGST_HUS726T6TALE6L4_V8JDUHAR-part4 ONLINE 0 0 0

ata-HGST_HUS726T6TALE6L4_V8JDWWAR-part4 ONLINE 0 0 0

ata-HGST_HUS726T6TALE6L4_V8JB5AYR-part4 ONLINE 0 0 0

ata-HGST_HUS726T6TALE6L4_V8JBYYTR-part4 ONLINE 0 0 0

ata-ST6000NM0115-1YZ110_ZAD9MHXH-part4 ONLINE 0 0 0

ata-ST6000NM0115-1YZ110_ZAD9M248-part4 ONLINE 0 0 0

ata-ST6000NM0115-1YZ110_ZAD9MQ9E-part4 ONLINE 0 0 0

ata-ST6000NM0115-1YZ110_ZAD9MN5A-part4 ONLINE 0 0 0

errors: No known data errors

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

rpool 43.6T 9.71T 33.9T - - 10% 22% 1.00x ONLINE -

On the proxmox host from few weeks I feel a big increase in the IO wait (2-3%), seeing txg_sync maxing at 95-99.99% IO via iotop. On one of the guest instances /Debian/ I can see jdb2/vda1-8 having again 99.99% most of the time.

Plenty of RAM available on both guest & host. What can the issue be?