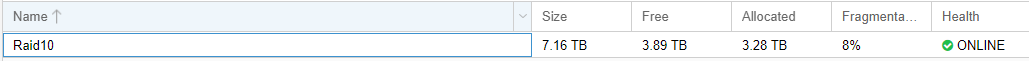

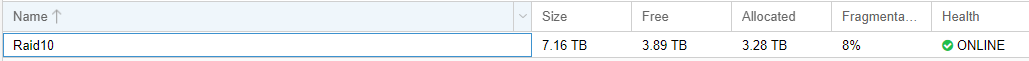

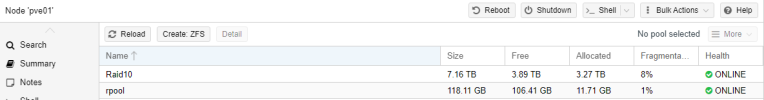

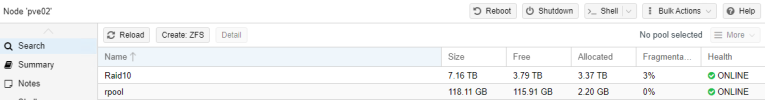

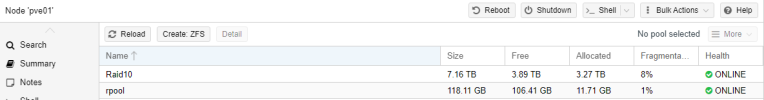

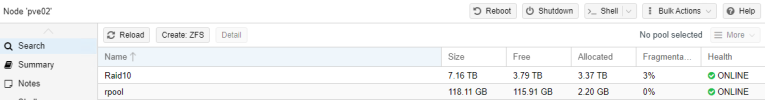

I have a ZFS Raid volume on each of my hosts and when I look at the Node's ZFS Window I see the correct utilization. To be clear, each host has 12 drives, configured in a raid 10 using ZFS. I named them the same due to what I saw was a requirement for replication to function in a cluster.

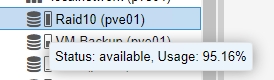

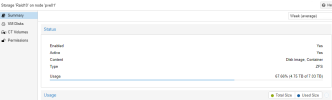

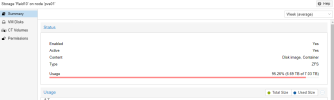

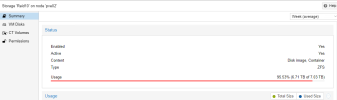

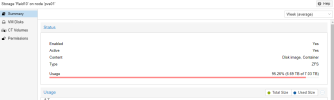

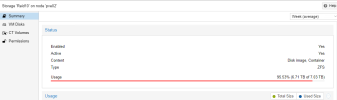

However, if I look at the storage object it shows me almost double the usage which doesn't make any sense.

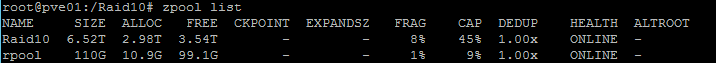

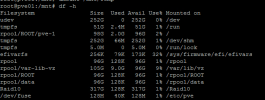

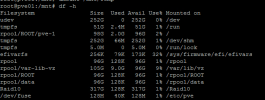

I connected to the host using SSH and tried to run df -h to see if I could figure out why it's reporting this way, however df -h does not show me the RAID10 volume properly.

Can anyone tell me what's going on and how to fix it? Backups are not going to this volume, though I do have Replication setup to replicate the hosts from one host to another. I also checked and did not see any snapshots on any of the VMs. I'm hoping this is just some kind of statistics error that can be easily fixed.

However, if I look at the storage object it shows me almost double the usage which doesn't make any sense.

I connected to the host using SSH and tried to run df -h to see if I could figure out why it's reporting this way, however df -h does not show me the RAID10 volume properly.

Can anyone tell me what's going on and how to fix it? Backups are not going to this volume, though I do have Replication setup to replicate the hosts from one host to another. I also checked and did not see any snapshots on any of the VMs. I'm hoping this is just some kind of statistics error that can be easily fixed.

Attachments

Last edited: