Hi everybody,

at a PBS the backup storage, which is a ZFS, is completely full, so the GC Job fails:

In the Server all physical ports are used so we can't simply add more HDDs for extending the pool.

In theory we just need some few megs of space so the GC Job can do it's work.. we even tried to move away one or two dirs under .chunks (for later moving them back of course), but the space remains completely full.

What can we do to get a little more free space in the ZFS?

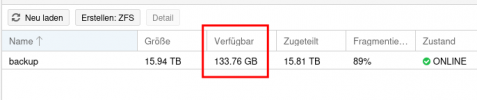

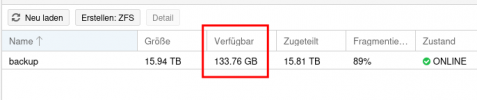

And we are wondering what this column is about:

It sais "133.76GB" available .. but where? And how could we make it usable to the backup pool?

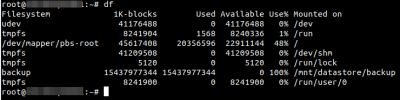

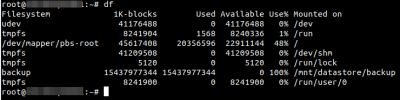

This is what "df" sais:

Any hint? Thx in advance!

at a PBS the backup storage, which is a ZFS, is completely full, so the GC Job fails:

In the Server all physical ports are used so we can't simply add more HDDs for extending the pool.

In theory we just need some few megs of space so the GC Job can do it's work.. we even tried to move away one or two dirs under .chunks (for later moving them back of course), but the space remains completely full.

What can we do to get a little more free space in the ZFS?

And we are wondering what this column is about:

It sais "133.76GB" available .. but where? And how could we make it usable to the backup pool?

This is what "df" sais:

Any hint? Thx in advance!