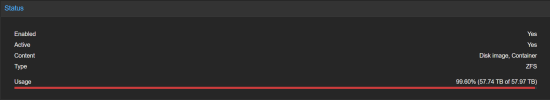

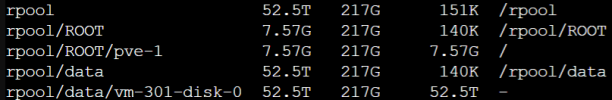

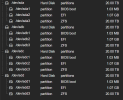

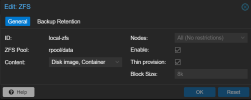

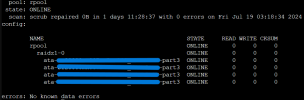

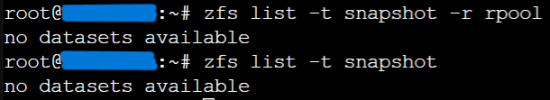

I have a PVE host that is currently reporting that "local-zfs" is nearly full. There is only one guest with one virtual disk stored in this pool; the virtual disk was originally 50TB and I have since reduced it to 43TB using

. Anyone experience this before, or can share some advice on how to fix this?

Bash:

zfs set volsize=43T rpool/data/vm-301-disk-0