Hi,

I've had some interesting issues this last week, things now mostly ok but I want to ask advice on this-

Proxmox installed on a ZFS mirror

Had RAM issues and Proxmox now will not boot

Can't scrub the zpool because it was created on another system.

I've installed Proxmox on another drive, but the zpool won't import because

and it causes a KP when I try to 'zpool import -f' as suggested-

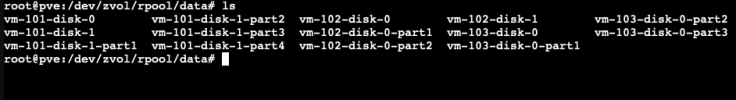

Quite happy to blow it away and start again, but I would like to mount at least one of the drives to recover a VM image

But-

Can I either-

1. remove the boot partitions from the ZFS mirror disks, fix the raid and mount the drive?

or

2. re-install Proxmox on the ZFS mirror to fix the boot issue, without wiping the ZFS mirror disks?

It just seems dumb that I can't mount a drive and pull a file off it...

I've had some interesting issues this last week, things now mostly ok but I want to ask advice on this-

Proxmox installed on a ZFS mirror

Had RAM issues and Proxmox now will not boot

Can't scrub the zpool because it was created on another system.

I've installed Proxmox on another drive, but the zpool won't import because

root@pve02:~# zpool import -d /dev rpool cannot import 'rpool': pool was previously in use from another system. Last accessed by (none) (hostid=fd84ad1) at Mon May 9 20:09:38 2022 The pool can be imported, use 'zpool import -f' to import the pool.and it causes a KP when I try to 'zpool import -f' as suggested-

Message from syslogd@pve02 at May 12 10:24:01 ...

kernel:[ 743.768395] PANIC: zfs: adding existent segment to range tree (offset=9f9731000 size=be001000)Quite happy to blow it away and start again, but I would like to mount at least one of the drives to recover a VM image

But-

root@pve02:~# zpool import -o readonly=on rpool

cannot import 'rpool': pool was previously in use from another system.

Last accessed by (none) (hostid=fd84ad1) at Mon May 9 20:09:38 2022

The pool can be imported, use 'zpool import -f' to import the pool.Can I either-

1. remove the boot partitions from the ZFS mirror disks, fix the raid and mount the drive?

or

2. re-install Proxmox on the ZFS mirror to fix the boot issue, without wiping the ZFS mirror disks?

It just seems dumb that I can't mount a drive and pull a file off it...