Thanks - the first link is the one I have been reading, still a lot to understand about this. ie.

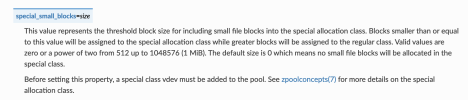

There is also no info in the Proxmox doco about what happens if the special_small_blocks fill up the SSD. If I set to 4K how do I monitor usage? How do I even decide on a value?

So if my pool is a RAID10 consisting of 3 x mirrors, can my special volume be a single mirror or does it need to be 3 x mirrors?The redundancy of the special device should match the one of the pool, since the special device is a point of failure for the whole pool.

There is also no info in the Proxmox doco about what happens if the special_small_blocks fill up the SSD. If I set to 4K how do I monitor usage? How do I even decide on a value?