Hello,

we're facing serious issues with Proxmox since upgrading to PVE 5.1.

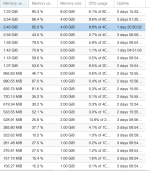

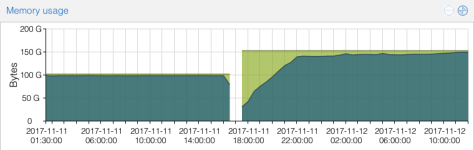

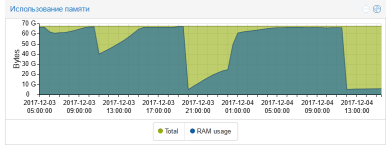

The host uses more than 95% memory usage and then swaps heavily which causes ZFS to crash due to blocked tasks (task x blocked for more than 120 seconds). This happens on a daily basis, although the VMs itself do not use much RAM and ZFS ARC is limited to 3 GB (for testing).

ZFS Parameters: https://pastebin.com/1QuPwV9H

Memory usage is currently at 85% and going even higher. Maybe there is a memory leak in ZFS as I can not see high memory usage in top.

we're facing serious issues with Proxmox since upgrading to PVE 5.1.

The host uses more than 95% memory usage and then swaps heavily which causes ZFS to crash due to blocked tasks (task x blocked for more than 120 seconds). This happens on a daily basis, although the VMs itself do not use much RAM and ZFS ARC is limited to 3 GB (for testing).

Code:

total used free shared buff/cache available

Mem: 96640 82148 14298 56 192 13888

Swap: 8191 400 7791

Code:

MemTotal: 98959416 kB

MemFree: 14505500 kB

MemAvailable: 14084660 kB

Buffers: 0 kB

Cached: 124884 kB

SwapCached: 3128 kB

Active: 18564224 kB

Inactive: 1284172 kB

Active(anon): 18530840 kB

Inactive(anon): 1265452 kB

Active(file): 33384 kB

Inactive(file): 18720 kB

Unevictable: 228432 kB

Mlocked: 228432 kB

SwapTotal: 8388604 kB

SwapFree: 7978492 kB

Dirty: 28 kB

Writeback: 24 kB

AnonPages: 19950472 kB

Mapped: 73668 kB

Shmem: 62212 kB

Slab: 2813728 kB

SReclaimable: 76060 kB

SUnreclaim: 2737668 kB

KernelStack: 19912 kB

PageTables: 84644 kB

NFS_Unstable: 0 kB

Bounce: 0 kB

WritebackTmp: 0 kB

CommitLimit: 57868312 kB

Committed_AS: 68509212 kB

VmallocTotal: 34359738367 kB

VmallocUsed: 0 kB

VmallocChunk: 0 kB

HardwareCorrupted: 0 kB

AnonHugePages: 1759232 kB

ShmemHugePages: 0 kB

ShmemPmdMapped: 0 kB

CmaTotal: 0 kB

CmaFree: 0 kB

HugePages_Total: 0

HugePages_Free: 0

HugePages_Rsvd: 0

HugePages_Surp: 0

Hugepagesize: 2048 kB

DirectMap4k: 478892 kB

DirectMap2M: 19408896 kB

DirectMap1G: 80740352 kB

Code:

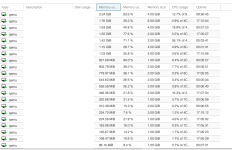

43624 root 20 0 2995380 2.030g 7996 S 67.0 2.2 16:12.18 kvm

164 root 25 5 0 0 0 S 52.9 0.0 153:15.23 ksmd

64317 root 20 0 5136908 2.143g 8016 S 36.9 2.3 36:07.25 kvm

56653 root 20 0 3029508 1.080g 4764 S 30.7 1.1 348:01.25 kvm

48314 root 20 0 7276344 1.956g 6324 S 24.8 2.1 190:31.68 kvm

52790 root 20 0 2985336 970128 7912 S 16.3 1.0 3:02.96 kvm

48118 root 20 0 1882308 432452 7880 S 9.8 0.4 1:32.24 kvm

6287 root 20 0 3036688 1.883g 4832 S 9.2 2.0 150:56.06 kvm

59919 root 20 0 5236348 3.904g 5124 S 7.5 4.1 145:19.38 kvm

9324 root 20 0 3114608 1.081g 4984 S 5.6 1.1 62:30.45 kvm

5872 root 20 0 316440 71324 7848 S 5.2 0.1 0:48.35 pvestatd

11425 root 20 0 1886420 363444 4932 S 4.9 0.4 48:06.05 kvm

47694 root 20 0 4247644 283364 4840 S 4.6 0.3 59:23.60 kvm

48049 root 20 0 5214828 1.733g 4892 S 4.6 1.8 67:22.25 kvm

56808 root 20 0 5203572 1.646g 7992 S 4.6 1.7 2:07.44 kvm

29439 root 20 0 2968040 1.219g 7984 S 3.3 1.3 2:22.91 kvm

54045 root 20 0 1941100 250880 4740 S 2.9 0.3 42:57.13 kvm

441 root 20 0 2965740 773216 7848 S 2.0 0.8 0:50.43 kvm

47339 root 20 0 1871000 232064 4936 S 2.0 0.2 20:22.70 kvm

2 root 20 0 0 0 0 S 1.3 0.0 13:55.21 kthreadd

431 root 20 0 0 0 0 S 1.3 0.0 2:24.06 arc_reclaim

34552 root 10 -10 1877908 218236 8232 S 1.3 0.2 15:23.28 ovs-vswitchd

39637 root 20 0 45844 4192 2676 R 1.3 0.0 0:00.15 top

48454 root 20 0 0 0 0 S 1.0 0.0 15:24.69 vhost-48314

53439 root 20 0 0 0 0 S 1.0 0.0 0:06.50 vhost-52790

418 root 0 -20 0 0 0 S 0.7 0.0 6:34.05 spl_dynamic_tas

3919 root 20 0 317724 71240 6504 S 0.7 0.1 0:06.16 pve-firewall

17078 root 20 0 1881264 203200 7860 S 0.7 0.2 0:17.57 kvm

24515 root 20 0 3036688 465872 7956 S 0.7 0.5 1:09.30 kvm

46240 root 20 0 1892572 293304 4760 S 0.7 0.3 3:44.11 kvm

47079 www-data 20 0 545876 106096 10824 S 0.7 0.1 0:02.08 pveproxy worker

47686 www-data 20 0 545880 106716 11292 S 0.7 0.1 0:03.33 pveproxy worker

47742 root 20 0 0 0 0 S 0.7 0.0 8:35.77 vhost-47694

50676 root 20 0 1914096 1.016g 7912 S 0.7 1.1 1:07.20 kvm

8 root 20 0 0 0 0 S 0.3 0.0 2:07.07 ksoftirqd/0

41 root 20 0 0 0 0 S 0.3 0.0 1:10.84 ksoftirqd/5

419 root 0 -20 0 0 0 S 0.3 0.0 1:08.40 spl_kmem_cache

425 root 0 -20 0 0 0 S 0.3 0.0 10:44.95 zvol

6580 root 20 0 0 0 0 S 0.3 0.0 0:00.29 kworker/22:1

8545 root 1 -19 0 0 0 S 0.3 0.0 0:25.06 z_wr_iss

8549 root 1 -19 0 0 0 S 0.3 0.0 0:24.78 z_wr_iss

8560 root 1 -19 0 0 0 S 0.3 0.0 0:24.91 z_wr_iss

8566 root 0 -20 0 0 0 S 0.3 0.0 0:30.17 z_wr_int_2

12531 root 0 -20 0 0 0 S 0.3 0.0 0:34.06 z_null_int

12532 root 0 -20 0 0 0 S 0.3 0.0 0:00.32 z_rd_iss

12533 root 0 -20 0 0 0 S 0.3 0.0 0:19.90 z_rd_int_0

12534 root 0 -20 0 0 0 S 0.3 0.0 0:20.24 z_rd_int_1

12536 root 0 -20 0 0 0 S 0.3 0.0 0:19.59 z_rd_int_3

12538 root 0 -20 0 0 0 S 0.3 0.0 0:19.47 z_rd_int_5

Code:

NAME SIZE ALLOC FREE EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

hddpool 2.72T 428G 2.30T - 15% 15% 1.00x ONLINE -

mirror 928G 143G 785G - 15% 15%

ata-HGST_HTS721010A9E630_JR1004D31UZBAM - - - - - -

ata-HGST_HTS721010A9E630_JR1004D31VDMKM - - - - - -

mirror 928G 143G 785G - 16% 15%

ata-HGST_HTS721010A9E630_JR1020D31401XM - - - - - -

ata-HGST_HTS721010A9E630_JR10044M2LE1MM - - - - - -

mirror 928G 142G 786G - 16% 15%

ata-HGST_HTS721010A9E630_JR1020D314GPDM - - - - - -

ata-HGST_HTS721010A9E630_JR1020D3146ALM - - - - - -

log - - - - - -

ata-WDC_WDS250G1B0A-00H9H0_164230800081-part1 4.97G 3.39M 4.97G - 63% 0%

cache - - - - - -

ata-WDC_WDS250G1B0A-00H9H0_164230800081-part2 228G 7.29G 221G - 0% 3%

rpool 136G 17.5G 119G - 45% 12% 1.00x ONLINE -

mirror 136G 17.5G 119G - 45% 12%

sdi2 - - - - - -

sdj2 - - - - - -

ssdpool 464G 46.9G 417G - 28% 10% 1.00x ONLINE -

mirror 464G 46.9G 417G - 28% 10%

ata-WDC_WDS500G1B0A-00H9H0_165161800154 - - - - - -

ata-WDC_WDS500G1B0A-00H9H0_165161800854 - - - - - -

webhddpool 1.81T 409G 1.41T - 29% 22% 1.00x ONLINE -

mirror 928G 203G 725G - 30% 21%

ata-HGST_HTS721010A9E630_JR1020D314GAWM - - - - - -

ata-HGST_HTS721010A9E630_JR1020D314RKGE - - - - - -

mirror 928G 206G 722G - 29% 22%

ata-HGST_HTS721010A9E630_JR1020D310X1XN - - - - - -

ata-HGST_HTS721010A9E630_JR1020D315HP4E - - - - - -

log - - - - - -

ata-WDC_WDS250G1B0A-00H9H0_164304A00904-part1 4.97G 1.38M 4.97G - 43% 0%

cache - - - - - -

ata-WDC_WDS250G1B0A-00H9H0_164304A00904-part2 228G 697M 227G - 0% 0%

webssdpool 464G 36.7G 427G - 33% 7% 1.00x ONLINE -

mirror 464G 36.7G 427G - 33% 7%

ata-WDC_WDS500G1B0A-00H9H0_164501A01151 - - - - - -

ata-WDC_WDS500G1B0A-00H9H0_164401A02B23 - - - - - -ZFS Parameters: https://pastebin.com/1QuPwV9H

Memory usage is currently at 85% and going even higher. Maybe there is a memory leak in ZFS as I can not see high memory usage in top.