Hi there,

I'm not sure if this is me misunderstanding how ZFS works, or if this is even related to ZFS. I'd love if it someone could help explain to me exactly what's going on, or figure out if it is a bug.

Latest version, up to date:

proxmox-ve : 7.3-1

pve-manager: 7.3-4

I have 5 8TB HDDs, I have setup a RAIDZ2, so I have 23.53 TB of available space on this ZFS pool.

On this zpool I have 5 VM disks, and 1 CT volume. Based on the size shown in the VM Disks and the CT Volumes menu, they take up in total 14211GB or 14.2TB.

The summary shows I am using 19.06TB of 23.53 TB.

Where is the other 4.8TB? What is that being used for?

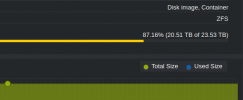

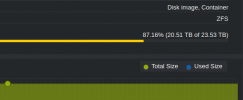

If I create a new VM, with 1TB of space, my space usage goes from 19.06TB / 23.53 TB to a 20.51TB/23.53TB. This 1000GB is showing as 1.07TB. Based on my available space, it is using 1.45TB. That's 35% more than it should be using. What am I not understanding?

After creating a 1000GB drive. 1.45TB increase, instead of 1TB, or 1.07TB.

As a side note, under pve > Disks > ZFS it shows as 14.01 TB free space, 26TB allotted. This is entirely different from what's showing in the pool summary.

Thank you in advance. I hope to at least understand if there's an issue in what is being reported.

I'm not sure if this is me misunderstanding how ZFS works, or if this is even related to ZFS. I'd love if it someone could help explain to me exactly what's going on, or figure out if it is a bug.

Latest version, up to date:

proxmox-ve : 7.3-1

pve-manager: 7.3-4

I have 5 8TB HDDs, I have setup a RAIDZ2, so I have 23.53 TB of available space on this ZFS pool.

On this zpool I have 5 VM disks, and 1 CT volume. Based on the size shown in the VM Disks and the CT Volumes menu, they take up in total 14211GB or 14.2TB.

The summary shows I am using 19.06TB of 23.53 TB.

Where is the other 4.8TB? What is that being used for?

If I create a new VM, with 1TB of space, my space usage goes from 19.06TB / 23.53 TB to a 20.51TB/23.53TB. This 1000GB is showing as 1.07TB. Based on my available space, it is using 1.45TB. That's 35% more than it should be using. What am I not understanding?

After creating a 1000GB drive. 1.45TB increase, instead of 1TB, or 1.07TB.

As a side note, under pve > Disks > ZFS it shows as 14.01 TB free space, 26TB allotted. This is entirely different from what's showing in the pool summary.

Thank you in advance. I hope to at least understand if there's an issue in what is being reported.