Hi, new to the boards and am finally joining after getting thoroughly stuck with my homelab build. Been researching this for a couple of days now, and I keep finding partial answers, but never exactly what (I think) I'm after. I am trying to understand why I am losing 55% from a host ZFS pool when assigning it to a VM. Here's a breakdown of what I'm seeing.

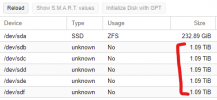

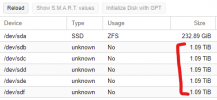

I have 5 x 1.2 TB drives unused on the host:

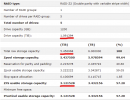

I create a ZFS pool (RAIDZ2, compression = lz4, ashift = 12) which reads as 5.45 TiB:

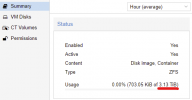

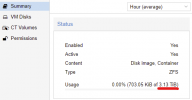

The Storage summary page shows 3.13 TiB:

This all totally makes sense so far, based on the ZFS calculator I used. I get that you lose space to parity, slop, etc.

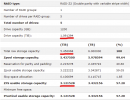

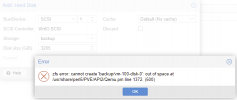

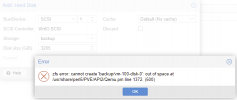

But when I go to add 3205 GiB to my VM (which displays 3.13 TiB) available, it throws an out of space error:

Through trial and error, I found that I could only use 1769 GiB, 55% less than what is shown to be available. And the Storage summary screen now shows 99.97% usage.

So yeah, I'm super confused and assuming there's just something fundamental with how Proxmox handles ZFS storage I'm not grasping. Sorry if this has been addressed before, and thanks in advance for any help!

I have 5 x 1.2 TB drives unused on the host:

I create a ZFS pool (RAIDZ2, compression = lz4, ashift = 12) which reads as 5.45 TiB:

The Storage summary page shows 3.13 TiB:

This all totally makes sense so far, based on the ZFS calculator I used. I get that you lose space to parity, slop, etc.

But when I go to add 3205 GiB to my VM (which displays 3.13 TiB) available, it throws an out of space error:

Through trial and error, I found that I could only use 1769 GiB, 55% less than what is shown to be available. And the Storage summary screen now shows 99.97% usage.

So yeah, I'm super confused and assuming there's just something fundamental with how Proxmox handles ZFS storage I'm not grasping. Sorry if this has been addressed before, and thanks in advance for any help!