Dear Members,

I have a freshly installed Proxmox 8 with two ZFS.

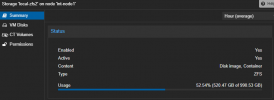

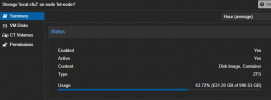

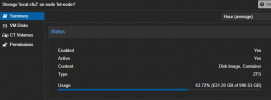

Current disk usage (btw its not valid usage):

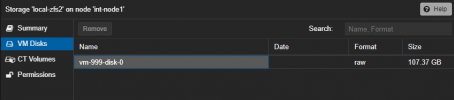

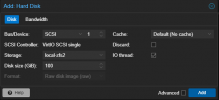

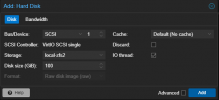

I'll add a 100GB VM disk (full empty, just adding):

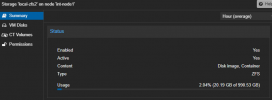

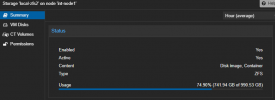

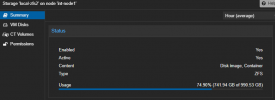

Current usage:

Why my ZFS work like this?

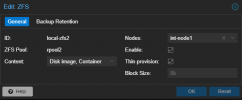

ZFS details:

What can i do to enable thin provision?

I have a freshly installed Proxmox 8 with two ZFS.

Current disk usage (btw its not valid usage):

I'll add a 100GB VM disk (full empty, just adding):

Current usage:

Why my ZFS work like this?

ZFS details:

Code:

root@int-node1:~# zpool get all

NAME PROPERTY VALUE SOURCE

rpool2 size 952G -

rpool2 capacity 29% -

rpool2 altroot - default

rpool2 health ONLINE -

rpool2 guid 17368552471780374021 -

rpool2 version - default

rpool2 bootfs - default

rpool2 delegation on default

rpool2 autoreplace off default

rpool2 cachefile - default

rpool2 failmode wait default

rpool2 listsnapshots off default

rpool2 autoexpand off default

rpool2 dedupratio 1.00x -

rpool2 free 672G -

rpool2 allocated 280G -

rpool2 readonly off -

rpool2 ashift 12 local

rpool2 comment - default

rpool2 expandsize - -

rpool2 freeing 0 -

rpool2 fragmentation 0% -

rpool2 leaked 0 -

rpool2 multihost off default

rpool2 checkpoint - -

rpool2 load_guid 2149096279672757092 -

rpool2 autotrim off default

rpool2 compatibility off default

rpool2 feature@async_destroy enabled local

rpool2 feature@empty_bpobj active local

rpool2 feature@lz4_compress active local

rpool2 feature@multi_vdev_crash_dump enabled local

rpool2 feature@spacemap_histogram active local

rpool2 feature@enabled_txg active local

rpool2 feature@hole_birth active local

rpool2 feature@extensible_dataset active local

rpool2 feature@embedded_data active local

rpool2 feature@bookmarks enabled local

rpool2 feature@filesystem_limits enabled local

rpool2 feature@large_blocks enabled local

rpool2 feature@large_dnode enabled local

rpool2 feature@sha512 enabled local

rpool2 feature@skein enabled local

rpool2 feature@edonr enabled local

rpool2 feature@userobj_accounting active local

rpool2 feature@encryption enabled local

rpool2 feature@project_quota active local

rpool2 feature@device_removal enabled local

rpool2 feature@obsolete_counts enabled local

rpool2 feature@zpool_checkpoint enabled local

rpool2 feature@spacemap_v2 active local

rpool2 feature@allocation_classes enabled local

rpool2 feature@resilver_defer enabled local

rpool2 feature@bookmark_v2 enabled local

rpool2 feature@redaction_bookmarks enabled local

rpool2 feature@redacted_datasets enabled local

rpool2 feature@bookmark_written enabled local

rpool2 feature@log_spacemap active local

rpool2 feature@livelist enabled local

rpool2 feature@device_rebuild enabled local

rpool2 feature@zstd_compress enabled local

rpool2 feature@draid enabled local

root@int-node1:~#

Code:

root@int-node1:~# zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

rpool2 952G 280G 672G - - 0% 29% 1.00x ONLINE -

root@int-node1:~#What can i do to enable thin provision?