[SOLVED] zero-out data on image when disk is being removed takes a long time

- Thread starter Appollonius

- Start date

-

- Tags

- storage

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

These are SSD's (Samsung 860 EVO) if I am not mistaken. Well I create the vm with terraform, but normally with version 6.4 they would disappear instantly. But since Proxmox 7.0 there have been issues with either removing a vm which is not removing entirely because VMID disk still existed so I did append the 'saferemove' to the /etc/pve/storage.cfg file and now this. The removal of the VM disk which takes about 10 - 30 minutes on itself..This has always been a matter of seconds here ...

What disk do you use?

2 other vm disk images. I do have the feeling that it has something to do with the 'saferemove' parameter in the /etc/pve/storage.cfg file. As before it would remove the image without any issue, but then when this image has been removed there would be some leftovers (as I have read somewhere else on this forum since Proxmox 7.0) which blocks the use of the same VMID.Are there other VM images running on the disk when you do that?

If so, how many?

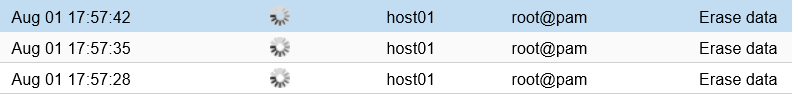

Also at this moment, Proxmox is still busy with 'Erasing the data' of these images. So it is about 1 hour in now.

Last edited:

Well I dont know if you can do anything with it or suggest a better approach, but here is the /etc/pve/storage.cfg file:Hm okay, three operations in parallel should indeed not be an issue.

lvm: SSD02-HOST01

vgname SSD02-HOST01

content images,rootdir

nodes host01

shared 0

saferemove 1

lvm: SSD02-HOST02

vgname SSD02-HOST02

content images

nodes host02

shared 0

saferemove 1

lvm: SSD03-HOST02

vgname SSD03-HOST02

content images

nodes host02

shared 0

saferemove 1

lvmthin: SSD01-HOST01

thinpool data

vgname pve

content images

nodes host01

lvmthin: SSD01-HOST02

thinpool data

vgname pve

content images

nodes host02

EDIT: I have a 2 node cluster

Yeah well I think it is the saferemove option... As it zero's out the disk. But without the saferemove option the vm image disks are not being wiped completely thus disabling the re-use of that VMID.There are lots of guys with more experience in the hardware section. My guess would have been excessive IO but that doesn't seem to be the case.

Hi,

you shouldn't needYeah well I think it is the saferemove option... As it zero's out the disk. But without the saferemove option the vm image disks are not being wiped completely thus disabling the re-use of that VMID.

saferemove anymore if you are using the latest version. It's true there was an issue, because the newer LVM version seems to complain more often about signatures, but the issue in Proxmox VE was fixed. Make sure you have at least libpve-storage-perl >= 7.0-8.Yep I believe it is solved now, I run libpve-storage-perl 7.0.9 and removed the 'saferemove' from /etc/pve/storage.cfg. It instantly removes the disk image right now. Thanks alot for the heads upHi,

you shouldn't needsaferemoveanymore if you are using the latest version. It's true there was an issue, because the newer LVM version seems to complain more often about signatures, but the issue in Proxmox VE was fixed. Make sure you have at leastlibpve-storage-perl >= 7.0-8.

Sorry to grab this thread again. I have the same problem. Saferemove is not activated but erasing (and pruning) takes forever. Deleting the backup dump files manually over the shell works in <1s per dump. Any ideas?

P.S. I am using the latest Proxmox 7.

P.P.S. Even stopping the action using the GUI does not work ...

P.S. I am using the latest Proxmox 7.

P.P.S. Even stopping the action using the GUI does not work ...

Last edited:

Hi,

please share the output ofSorry to grab this thread again. I have the same problem. Saferemove is not activated but erasing (and pruning) takes forever. Deleting the backup dump files manually over the shell works in <1s per dump. Any ideas?

P.S. I am using the latest Proxmox 7.

P.P.S. Even stopping the action using the GUI does not work ...

pveversion -v and cat /etc/pve/storage.cfg and tell us which storage has the issues. Are there any errors/warnings in the Erase data task log (double click on task to get the log) or in /var/log/syslog?No errors in syslog. Strangely, this problem seems resolved now after having freed up some space by deleting backups manually. The storage was full (98%) when it did not work while it is only occupied 60% now that it works. I also observed high I/O delay with full storage. Is that the reason the delete action was that slow?

The backups are stored on tank-300g.

The backups are stored on tank-300g.

pveversion -v

Code:

root@pve:~# pveversion -v

proxmox-ve: 7.0-2 (running kernel: 5.11.22-3-pve)

pve-manager: 7.0-11 (running version: 7.0-11/63d82f4e)

pve-kernel-5.11: 7.0-6

pve-kernel-helper: 7.0-6

pve-kernel-5.4: 6.4-4

pve-kernel-5.11.22-3-pve: 5.11.22-6

pve-kernel-5.11.22-2-pve: 5.11.22-4

pve-kernel-5.11.22-1-pve: 5.11.22-2

pve-kernel-5.4.124-1-pve: 5.4.124-1

pve-kernel-5.4.119-1-pve: 5.4.119-1

pve-kernel-5.4.114-1-pve: 5.4.114-1

pve-kernel-5.4.106-1-pve: 5.4.106-1

pve-kernel-5.4.73-1-pve: 5.4.73-1

ceph-fuse: 14.2.21-1

corosync: 3.1.2-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: 0.8.36

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.21-pve1

libproxmox-acme-perl: 1.2.0

libproxmox-backup-qemu0: 1.2.0-1

libpve-access-control: 7.0-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.0-5

libpve-guest-common-perl: 4.0-2

libpve-http-server-perl: 4.0-2

libpve-storage-perl: 7.0-10

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 4.0.9-4

lxcfs: 4.0.8-pve2

novnc-pve: 1.2.0-3

proxmox-backup-client: 2.0.8-1

proxmox-backup-file-restore: 2.0.8-1

proxmox-mini-journalreader: 1.2-1

proxmox-widget-toolkit: 3.3-6

pve-cluster: 7.0-3

pve-container: 4.0-9

pve-docs: 7.0-5

pve-edk2-firmware: 3.20200531-1

pve-firewall: 4.2-2

pve-firmware: 3.2-4

pve-ha-manager: 3.3-1

pve-i18n: 2.4-1

pve-qemu-kvm: 6.0.0-3

pve-xtermjs: 4.12.0-1

pve-zsync: 2.2

qemu-server: 7.0-13

smartmontools: 7.2-pve2

spiceterm: 3.2-2

vncterm: 1.7-1

zfsutils-linux: 2.0.5-pve1

Code:

root@pve:~# cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content iso,backup,vztmpl

lvmthin: local-lvm

thinpool data

vgname pve

content images,rootdir

dir: vm-backups

path /tank-300g/vm-backups

content backup

prune-backups keep-all=1

shared 0

pbs: pbs

disable

datastore pool

server X.X.X.X

content backup

encryption-key XXX

fingerprint XXX

prune-backups keep-all=1

username XXXSorry, it does not work completely ... now here's the deal. It worked for all Backups from August but not for the ones from July. I deleted the August backups partially manually. In the directory, I got lots of *.log files without the respective dump files. Is that part of the problem and should I delete the *.log files in the "dump" directory? Could they break something?

Assuming the directory is on top of ZFS, I think that's likely. One shouldn't let ZFS get too full as it's copy-on-write.No errors in syslog. Strangely, this problem seems resolved now after having freed up some space by deleting backups manually. The storage was full (98%) when it did not work while it is only occupied 60% now that it works. I also observed high I/O delay with full storage. Is that the reason the delete action was that slow?

The log files can be safely removed if no backups for them exist anymore.Sorry, it does not work completely ... now here's the deal. It worked for all Backups from August but not for the ones from July. I deleted the August backups partially manually. In the directory, I got lots of *.log files without the respective dump files. Is that part of the problem and should I delete the *.log files in the "dump" directory? Could they break something?

View attachment 28566