I had some trouble to get it to work with my configuration. The solution I found (after a lot of restarts and trial-and-error) to be reliable is to unbind the gpu from vfio-pci and bind it to amd after the VM (with GPU passed-through) stopped.

If I run that before the VM starts, the GPU gets stuck and only a system reboot would help.

What I noticed as well is that I don't have problems using 256MB for BAR2. Previously it seems to only work with Windows if it is only 8MB, but it is not the case anymore (

at least for me).

Everything is working so far and I successfully tested my config with multiple reboots of the Windows VM.

It might be that I had trouble because of my iGPU (Intel) being available to proxmox at first, but as soon as my media VM starts it gets passed-through and proxmox only has the 9070 XT amdgpu left to use.

Here are my steps which results into a reliable 9070 XT passthrough:

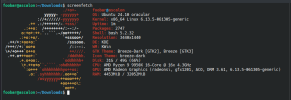

Update Kernel

I updated my kernel to 6.11 (latest kernel version for proxmox at the moment). In order to do that go to the linked post and follow the steps:

Opt-in Linux 6.11 Kernel for Proxmox VE 8 available on test & no-subscription

Edit /etc/modules file

Since I had an AMD GPU before this upgrade (old VEGA 64) I removed kernel modules which were not needed anymore. The only kernel modules I have now are the following in /etc/modules

code_language.shell:

# /etc/modules: kernel modules to load at boot time.#

# This file contains the names of kernel modules that should be loaded

# at boot time, one per line. Lines beginning with "#" are ignored.

# Parameters can be specified after the module name.

vfio

vfio_iommu_type1

vfio_pci

If you had to change your /etc/modules file, you need to run the following:

code_language.shell:

update-initramfs -u -k all

Reboot proxmox

If you updated the kernel or changed the /etc/modules file you need to perform a reboot.

Download and use the GPU ROM file

The following example is for my PowerColor RX 9070 XT Reaper. Head over to

TechPowerUp (for XT) or

TechPowerUp (for non-XT) and copy the download link of your GPU models ROM file.

SSH into your proxmox and cd into the kvm folder:

Download the copied ROM file of your GPU model into the folder:

code_language.shell:

# this would be for my PowerColor RX 9070 XT Reaper model

# wget https://www.techpowerup.com/vgabios/274342/Powercolor.RX9070XT.16384.241204_1.rom

wget REPLACE_YOUR_COPIED_DOWNLOAD_URL_HERE

Rename the downloaded file and name it 9070xt.rom or 9070.rom.

code_language.shell:

#example for my PowerColor RX 9070 XT Reaper model

mv Powercolor.RX9070XT.16384.241204_1.rom 9070xt.rom

Edit your vm .conf file (located in /etc/pve/qemu-server/). The name of the .conf file is the ID of your VM. Mine is for example 200.

code_language.shell:

nano /etc/pve/qemu-server/200.conf

Add your ROM file to the configuration. Since mine is a 9070 XT I will use the renamed .rom filename "9070xt.rom".

Locate your hostpci entry of your GPU and add "pcie=1,x-vga=1,romfile=9070xt.rom". A complete example of this line for my configuration with the GPU being pci address 0000:03:00 is below:

code_language.shell:

hostpci1: 0000:03:00,pcie=1,x-vga=1,romfile=9070xt.rom

Create / Edit and add your hookup script

I created a hookup script for the GPU use and have the following script located at

/var/lib/vz/snippets: (I called the script vmGPU.sh)

code_language.shell:

phase="$2"

if [ "$phase" == "post-stop" ]; then

# Unbind gpu from vfio-pci

sleep 5

echo "0000:03:00.0" > /sys/bus/pci/drivers/vfio-pci/unbind 2>/dev/null

sleep 2

# Bind amdgpu

echo "0000:03:00.0" > /sys/bus/pci/drivers/amdgpu/bind 2>/dev/null

sleep 2

fi

Now we need to add it to our VM configuration. As described in the previous step head to your .conf file of your VM (mine is:

/etc/pve/qemu-server/200.conf) and edit it to add the following line:

code_language.shell:

hookscript: local:snippets/vmGPU.sh

I hope this helps someone else to get the 9070 (XT / non-XT) working. THANKS for the previous comments on this thread otherwise I would'nt have made it to work!