I am trying to automate creation of my Ubuntu VM in Proxmox using Ansible, proxmoxer, and cloudinit.

I've seen (old) tutorial videos download the Ubuntu cloudinit IMG file to the

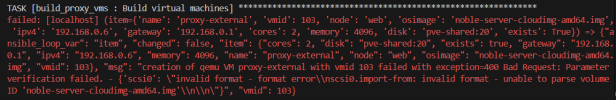

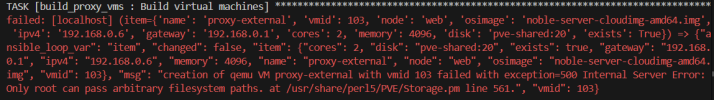

What am I doing wrong? I know it has something to do with the right way of referring to the

I've seen (old) tutorial videos download the Ubuntu cloudinit IMG file to the

/var/lib/vz/images path and then successfully use the image file by using the volume name import-from=local:images/noble-server-cloudimg-amd64.img to initialize the attached hard drive. However when I try this myself I get 500 Internal Server Error: unable to parse directory volume name 'images/noble-server-cloudimg-amd64.img'What am I doing wrong? I know it has something to do with the right way of referring to the

/var/lib/vz/images path, but documentation on this is pretty sparse and I've tried multiple ways of reaching this folder without any success.