Well, we're quite positive about that, but as windows is weird and such big threads definitively attracted many posts with different issues/problems to there's hardly anything we can guarantee for 100% in such a general way in a "monster thread" like this.Ergo, it pretty safe to assume this issue is fixed if we start upgrading next week?

Windows VMs stuck on boot after Proxmox Upgrade to 7.0

- Thread starter lolomat

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hi everyone !

The fix included in Qemu 7.0 seems to work fine.

No VM from Windows 2012 to 2022 has more problems in reboot.

Windows 2008 never introduced them.

There remains a small problem, in my opinion related, at least to the timer and long time to run type.

All Windows 7 VMs (and only those), I'm talking about a dozen VMs with different histories ... import from vmware, import from bare metal, etc. all working perfectly (within Windows limits) when restarted after a prolonged uptime (greater than 15-20 days) typically during the upgrade phase (yes, we will use them until 12/31 with extended security support) they crash in the reboot phase. All the same way, but BEFORE the reboot ... windows reboot in progress and they remain (stop start and as usual the problem is solved). If I restart the VM after a shorter uptime, no problem.

It looks like something related to the problem just solved, but it shows up in the pre-reboot reboot phase and not immediately after the reboot.

I forgot, the problem also occurred in Qemu 6.x

The fix included in Qemu 7.0 seems to work fine.

No VM from Windows 2012 to 2022 has more problems in reboot.

Windows 2008 never introduced them.

There remains a small problem, in my opinion related, at least to the timer and long time to run type.

All Windows 7 VMs (and only those), I'm talking about a dozen VMs with different histories ... import from vmware, import from bare metal, etc. all working perfectly (within Windows limits) when restarted after a prolonged uptime (greater than 15-20 days) typically during the upgrade phase (yes, we will use them until 12/31 with extended security support) they crash in the reboot phase. All the same way, but BEFORE the reboot ... windows reboot in progress and they remain (stop start and as usual the problem is solved). If I restart the VM after a shorter uptime, no problem.

It looks like something related to the problem just solved, but it shows up in the pre-reboot reboot phase and not immediately after the reboot.

I forgot, the problem also occurred in Qemu 6.x

Last edited:

Note that the bug fixed in qemu 7.0, is a clock counter bug, that occur mainly after 1 or 2 months after the the start of the vm.

Note that we moved QEMU 7.0 to the enterprise repository just now, it ran well since about two months internally on all our infrastructure and well over a week with quite a bit of positive feedback on no-subscription.

I already looked a few pages back in this thread but the mentioned fix included in Qemu 7.0, what is it for exactly?

Does it fix the "Guest has not initialized the display (yet)" error that appears during the boot of OVMF VMs?

I am asking because the error just appeared again on my Home Assistant OVMF VM.

Code:

proxmox-ve: 7.2-1 (running kernel: 5.15.53-1-pve)

pve-manager: 7.2-11 (running version: 7.2-11/b76d3178)

pve-kernel-helper: 7.2-12

pve-kernel-5.15: 7.2-10

pve-kernel-5.15.53-1-pve: 5.15.53-1

pve-kernel-5.15.39-3-pve: 5.15.39-3

pve-kernel-5.15.30-2-pve: 5.15.30-3

ceph-fuse: 15.2.16-pve1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve1

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.2-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.2-2

libpve-guest-common-perl: 4.1-2

libpve-http-server-perl: 4.1-3

libpve-storage-perl: 7.2-8

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.2.6-1

proxmox-backup-file-restore: 2.2.6-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.5.1

pve-cluster: 7.2-2

pve-container: 4.2-2

pve-docs: 7.2-2

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-6

pve-firmware: 3.5-1

pve-ha-manager: 3.4.0

pve-i18n: 2.7-2

pve-qemu-kvm: 7.0.0-3

pve-xtermjs: 4.16.0-1

qemu-server: 7.2-4

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.5-pve1After trying for the whole day. The only way for me to get that VM to start again is to remove the EFI Disk.

Last edited:

Yes sadly vor Ubuntu VM's too with actual qemu (3 VM's) on us Ceph-Cluster. All Windowserrors are definitely gone away

Thank you for confirming that the error is still present for Linux and BSD guests.Yes sadly vor Ubuntu VM's too with actual qemu (3 VM's) on us Ceph-Cluster. All Windowserrors are definitely gone away

Last edited:

sorry ... I use 28 FreeBSD VMs distributed on 6 Proxmox clusters and I have never had problems ... OK, I don't use Ubuntu, only Debian, but relative to FreeBSD .... ???

I also experienced the issue with my OPNsense VM (FreeBSD) and because of that reinstalled it in a SeaBIOS VM.sorry ... I use 28 FreeBSD VMs distributed on 6 Proxmox clusters and I have never had problems ... OK, I don't use Ubuntu, only Debian, but relative to FreeBSD .... ???

The thing is that the issue doesn't appear directly after VM creation and guest OS installation. It starts after some time, which can be days, weeks or even months.

Last edited:

Yes, I use 28 PfSense (FreeBSD) on similar VMs on SeaBIOS... never a problem.... after 180+ days runtime

Ahh, well SeaBIOS is also fine for me! Only OVMF (UEFI) VMs are affected!Yes, I use 28 PfSense (FreeBSD) on similar VMs on SeaBIOS... never a problem.... after 180+ days runtime

My Windows 10 VM hung last night after automatic updates so I don't think the problem is fixed. It had an uptime of 7 days. I restarted the VM on the 24th of september because it was stuck on that day too. I updated to QEMU 7 before that day but I don't remember if my VM was restarted after that. But this last time it was definately rebooted while at version 7. I am not running the Enterprise version.

Here is my pveversion output:

And this is the VM config:

Here is my pveversion output:

Code:

proxmox-ve: 7.2-1 (running kernel: 5.15.53-1-pve)

pve-manager: 7.2-11 (running version: 7.2-11/b76d3178)

pve-kernel-helper: 7.2-12

pve-kernel-5.15: 7.2-11

pve-kernel-5.13: 7.1-9

pve-kernel-5.11: 7.0-10

pve-kernel-5.0: 6.0-11

pve-kernel-5.15.60-1-pve: 5.15.60-1

pve-kernel-5.15.53-1-pve: 5.15.53-1

pve-kernel-5.15.39-3-pve: 5.15.39-3

pve-kernel-5.15.39-1-pve: 5.15.39-1

pve-kernel-5.15.35-2-pve: 5.15.35-5

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-4-pve: 5.13.19-9

pve-kernel-5.13.19-3-pve: 5.13.19-7

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.13.19-1-pve: 5.13.19-3

pve-kernel-5.11.22-7-pve: 5.11.22-12

pve-kernel-5.0.21-5-pve: 5.0.21-10

pve-kernel-5.0.15-1-pve: 5.0.15-1

ceph-fuse: 14.2.21-1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: 0.8.36+pve1

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve1

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.2-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.2-2

libpve-guest-common-perl: 4.1-2

libpve-http-server-perl: 4.1-3

libpve-storage-perl: 7.2-9

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.2.6-1

proxmox-backup-file-restore: 2.2.6-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.5.1

pve-cluster: 7.2-2

pve-container: 4.2-2

pve-docs: 7.2-2

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-6

pve-firmware: 3.5-3

pve-ha-manager: 3.4.0

pve-i18n: 2.7-2

pve-qemu-kvm: 7.0.0-3

pve-xtermjs: 4.16.0-1

qemu-server: 7.2-4

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.5-pve1And this is the VM config:

Code:

agent: 1

bootdisk: ide0

cores: 2

ide0: local-lvm:vm-101-disk-0,size=100G,ssd=1

ide2: none,media=cdrom

memory: 4096

name: beheer02

net0: e1000=DA:EC:F1:D0:41:2F,bridge=vmbr0,firewall=1

numa: 0

onboot: 1

ostype: win10

parent: Clean_install

scsihw: virtio-scsi-pci

smbios1: uuid=87f8a0a5-af7b-4e94-8041-fa798ae8f79e

sockets: 1

startup: order=6

usb0: host=10c4:ea60

vga: vmware

vmgenid: 9dfcbb19-38a7-4f8b-bcba-faef38f85d1aHi all

I have customer with Proxmox VE 7.2-7 with Windows Server VM and after update the vm stuck on reboot, so the bug is not fixed, any update?

7.2-7 ? Uses Qemu 7.0 ?

Hi,

how long was the VM running approximately? Are you sure the VM was shutdown+started after installing QEMU 7.0 or migrated to a host with QEMU 7.0 installed? Where exactly did it get stuck?Hi all

I have customer with Proxmox VE 7.2-7 with Windows Server VM and after update the vm stuck on reboot, so the bug is not fixed, any update?

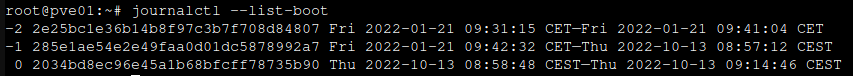

The guest needs at least a power off/power on so that the fix really adapts. If this VM was running and you Update QEMU/PVE it will at least hang one more time on reboot if you did not stop/start or migrate the VMs....Hi upgrade the version of Proxmox at 23th August but i check now:

View attachment 42157

And as you can see after upgrade the version of proxmox i don't reboot the server last reboot exclude todayt is 21 Jan 2022, maybe the problem is because i don't reboot the server ?

o

ok now i update proxmox al latest version and reboot the server, i update if the problem remain, thanks.The guest needs at least a power off/power on so that the fix really adapts. If this VM was running and you Update QEMU/PVE it will at least hang one more time on reboot if you did not stop/start or migrate the VMs....

Hi,

yes, the most common issue mentioned in this thread was solved withHi

Was there any conclusion to this? Is this resolved with a specific QEMU or kernel version?

pve-qemu-kvm >= 7.0.0-1. But it is important that the VM actually runs with a new enough version, i.e. it has been stopped+started or migrated to a host with the update. But there were some other less common issues mentioned too, and I'm not sure all of them are resolved. Might be better to open a new thread and provide details there if you are still experiencing issues.