Could be, but since we have never seen this issue on our 6.4 cluster and we've only seen this on our 7.1 cluster - Im not so sure.A real annoying problem with Windows.

Microsoft have this problem on their own systems too. So either they run Proxmox VE on Azure (I assume not, but who knows ...) or the issue is probably not related to the Proxmox stack.

Read more on https://docs.microsoft.com/en-us/tr...achines/troubleshoot-vm-boot-configure-update

Windows VMs stuck on boot after Proxmox Upgrade to 7.0

- Thread starter lolomat

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

No, 99% that can't be the problem. The reasons for this sentence are in the posts I wrote previously. I don't value Windows (and I never did) just as I don't value Microsoft's policies. But in this case a correlation of low-level events is evident. With Proxmox 6.4 latest version, Windows 2012 2016 and 2019 work and update perfectly, never miss a beat. With Proxmox 7.x there are always problems, perfectly repeatable. I will be wrong but there must be a concomitance of low-level factors. Kernel 5.13 and 5.15 and / or Qemu 6.x. Along with Windows (which takes its own).Could be, but since we have never seen this issue on our 6.4 cluster and we've only seen this on our 7.1 cluster - Im not so sure.

Last edited:

@tom No one that we are aware of has reported "Getting Windows Ready". That is a different problem.A real annoying problem with Windows.

Microsoft have this problem on their own systems too. So either they run Proxmox VE on Azure (I assume not, but who knows ...) or the issue is probably not related to the Proxmox stack.

Read more on https://docs.microsoft.com/en-us/tr...achines/troubleshoot-vm-boot-configure-update

Has anyone reviewed the information we provided that was requested by @Moayad ?

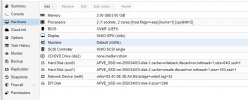

@Moayad Here is the same data from another hung VM. It is the "classic" black screen, Windows logo and endlessly spinning balls.

Power off (Stop) the VM, power on and it started normally.

Power off (Stop) the VM, power on and it started normally.

Attachments

Hi,

Thank you for the output!

Thank you for the output!

I already reviewed the provided information, I'm still troubleshooting.@Moayad Here is the same data from another hung VM. It is the "classic" black screen, Windows logo and endlessly spinning balls.

@Moayad for any need to investigate the issue, please let us know.

We are going crazy managing dozens and dozens of Windows VMs that go into stuck after each update.

We are going crazy managing dozens and dozens of Windows VMs that go into stuck after each update.

We can confirm we are having these same issues on 4 of our 6 clusters, all running proxmox 7 with kernels 5.15.30 or newer. The ones running proxmox 6 do not have this issue. They lock up in varying stages of preboot (black screen, guest init, proxmox spinning wheel). Since it's inconsistent we haven't been able to nail down reproducibility, but for us it does seem that reboots only lock after a proxmox backup (sometimes), and more likely if you reboot a bunch around the same time (IO/CPU load?). Having virtio drivers installed/not installed, qemu agent installed/not installed has not made a difference for us, fwiw.

... for this reason I am reasonably convinced that the problem is Qemu 6.x.We can confirm we are having these same issues on 4 of our 6 clusters, all running proxmox 7 with kernels 5.15.30 or newer. The ones running proxmox 6 do not have this issue. They lock up in varying stages of preboot (black screen, guest init, proxmox spinning wheel). Since it's inconsistent we haven't been able to nail down reproducibility, but for us it does seem that reboots only lock after a proxmox backup (sometimes), and more likely if you reboot a bunch around the same time (IO/CPU load?). Having virtio drivers installed/not installed, qemu agent installed/not installed has not made a difference for us, fwiw.

@Moayad, is it possible to create a pure testing package with Qemu 7.0, or with a 5.x version? Try tapping one variable at a time to identify the problem (if it fails to identify through debugging). IMHO

We have the the same problem on cluster running pve6 and pve7, never on pve5.

@andrewrf for all the VMs that have hung, are you seeing the following:We can confirm we are having these same issues on 4 of our 6 clusters, all running proxmox 7 with kernels 5.15.30 or newer. The ones running proxmox 6 do not have this issue. They lock up in varying stages of preboot (black screen, guest init, proxmox spinning wheel). Since it's inconsistent we haven't been able to nail down reproducibility, but for us it does seem that reboots only lock after a proxmox backup (sometimes), and more likely if you reboot a bunch around the same time (IO/CPU load?). Having virtio drivers installed/not installed, qemu agent installed/not installed has not made a difference for us, fwiw.

- Only Windows VMs, Server 2012 or later

- Windows does not report anything in bootlog (ntbtlog.txt) -- meaning it had not got far enough in booting to start that logging

- Only hangs on a reboot and only if the VM has been running for a while (ie not on full power off and power back on)

- To resolve you have to hard power off VM and power back on. VMs always boots up fine after the hard power off.

- You are using one of these storage types for the VM: Ceph, ZFS and NFS

- All the VMs were built before and upgraded from 6.x to 7.x

You may also want to get on the CC for the bug report: https://bugzilla.proxmox.com/show_bug.cgi?id=3933

all the VMs that have hung, are you seeing the following:@andrewrf for all the VMs that have hung, are you seeing the following:

- Only Windows VMs, Server 2012 or later

- Windows does not report anything in bootlog (ntbtlog.txt) -- meaning it had not got far enough in booting to start that logging

- Only hangs on a reboot and only if the VM has been running for a while (ie not on full power off and power back on)

- To resolve you have to hard power off VM and power back on. VMs always boots up fine after the hard power off.

- You are using one of these storage types for the VM: Ceph, ZFS and NFS

- All the VMs were built before and upgraded from 6.x to 7.x

Have you had any of your VMs do this twice? We have not had the problem more than once with any one VM.

You may also want to get on the CC for the bug report: https://bugzilla.proxmox.com/show_bug.cgi?id=3933

- Only Windows VMs, Server 2012 or later

- YES, 2016 or later, and Win10 or later

- Windows does not report anything in bootlog (ntbtlog.txt) -- meaning it had not got far enough in booting to start that logging

- UNKNOWN (I think that file only gets created if it try's to boot to safe mode, which ours aren't)

- Only hangs on a reboot and only if the VM has been running for a while (ie not on full power off and power back on)

- YES

- To resolve you have to hard power off VM and power back on. VMs always boots up fine after the hard power off.

- YES

- You are using one of these storage types for the VM: Ceph, ZFS and NFS

- YES, CEPH

- All the VMs were built before and upgraded from 6.x to 7.x

- NO, we have this issue on vms that were built in 7.x as well, and some under 2 months old

- Have you had any of your VMs do this twice? We have not had the problem more than once with any one VM.

- YES, we have had this issue on the same vm more than once

Last edited:

Yes, I can confirm exactly every step!all the VMs that have hung, are you seeing the following:

- Only Windows VMs, Server 2012 or later

- YES, 2016 or later, and Win10 or later

- Windows does not report anything in bootlog (ntbtlog.txt) -- meaning it had not got far enough in booting to start that logging

- UNKNOWN (I think that file only gets created if it try's to boot to safe mode, which ours aren't)

- Only hangs on a reboot and only if the VM has been running for a while (ie not on full power off and power back on)

- YES

- To resolve you have to hard power off VM and power back on. VMs always boots up fine after the hard power off.

- YES

- You are using one of these storage types for the VM: Ceph, ZFS and NFS

- YES, CEPH

- All the VMs were built before and upgraded from 6.x to 7.x

- NO, we have this issue on vms that were built in 7.x as well, and some under 2 months old

- Have you had any of your VMs do this twice? We have not had the problem more than once with any one VM.

- YES, we have had this issue on the same vm more than once

We have this also sometimes on Ubuntu 20.04 VMs when they do scheduled reboot for updates.@andrewrf for all the VMs that have hung, are you seeing the following:

Have you had any of your VMs do this twice? We have not had the problem more than once with any one VM.

- Only Windows VMs, Server 2012 or later

- Windows does not report anything in bootlog (ntbtlog.txt) -- meaning it had not got far enough in booting to start that logging

- Only hangs on a reboot and only if the VM has been running for a while (ie not on full power off and power back on)

- To resolve you have to hard power off VM and power back on. VMs always boots up fine after the hard power off.

- You are using one of these storage types for the VM: Ceph, ZFS and NFS

- All the VMs were built before and upgraded from 6.x to 7.x

You may also want to get on the CC for the bug report: https://bugzilla.proxmox.com/show_bug.cgi?id=3933

@itNGO It is interesting that you are seeing it on Ubuntu 20.04. Not many people are seeing this. Looking back over previous posts, you just get a black screen. What is happening with your VMs seems to be slightly different. I wonder if there is a clue in that.We have this also sometimes on Ubuntu 20.04 VMs when they do scheduled reboot for updates.

Would you share some details about the VMs that hang? Perhaps anything that you do that others may not be doing? Anything that might contribute to the different behaviour you are seeing.

D

Deleted member 116138

Guest

I'm experiencing more than boot problems on existing VMs. Even setting up new VMs is painful slow or doesn't work.

On an AMD Threadripper Pro system with 256GB ECC RAM and 6x Enterprise NVME in ZFS RAID1+0 setting up a simple Debian 11 VM takes 20-30 minutes with the netinstaller. Windows 11 or Windows 10 stuck on the initial UEFI boot screen after "Press any key to boot from DVD..." passed.

On an AMD Threadripper Pro system with 256GB ECC RAM and 6x Enterprise NVME in ZFS RAID1+0 setting up a simple Debian 11 VM takes 20-30 minutes with the netinstaller. Windows 11 or Windows 10 stuck on the initial UEFI boot screen after "Press any key to boot from DVD..." passed.

@Huch this post is about VMs getting stuck when rebooting (mostly Windows). No one else has reported slowness related to this issue. You might have a different issue or two separate problems.I'm experiencing more than boot problems on existing VMs. Even setting up new VMs is painful slow or doesn't work.

On an AMD Threadripper Pro system with 256GB ECC RAM and 6x Enterprise NVME in ZFS RAID1+0 setting up a simple Debian 11 VM takes 20-30 minutes with the netinstaller. Windows 11 or Windows 10 stuck on the initial UEFI boot screen after "Press any key to boot from DVD..." passed.

D

Deleted member 116138

Guest

No, I have the same problem with Windows VMs and reboots. The other problem showed up at the same time.@Huch this post is about VMs getting stuck when rebooting (mostly Windows). No one else has reported slowness related to this issue. You might have a different issue or two separate problems.

It just the MetricVM with InfluxDB for Proxmox-Metric-Collection.@itNGO It is interesting that you are seeing it on Ubuntu 20.04. Not many people are seeing this. Looking back over previous posts, you just get a black screen. What is happening with your VMs seems to be slightly different. I wonder if there is a clue in that.

Would you share some details about the VMs that hang? Perhaps anything that you do that others may not be doing? Anything that might contribute to the different behaviour you are seeing.

It does auto-update security patches and then reboot once a week, and guess what... hangs once a week....

Please open new thread... I believe this is not the same issue discussed here....I'm experiencing more than boot problems on existing VMs. Even setting up new VMs is painful slow or doesn't work.

On an AMD Threadripper Pro system with 256GB ECC RAM and 6x Enterprise NVME in ZFS RAID1+0 setting up a simple Debian 11 VM takes 20-30 minutes with the netinstaller. Windows 11 or Windows 10 stuck on the initial UEFI boot screen after "Press any key to boot from DVD..." passed.

On a hunch, can anybody try with the 5.15.35 kernel while turning some spectre/meltdown security mitigations off (shouldn't be done if you host for untrusted and/or third parties).

You'd need to add

https://pve.proxmox.com/pve-docs/chapter-sysadmin.html#sysboot_edit_kernel_cmdline

Then don't forget to

Because I could reproduce a Windows issue on an older host with outdated FW that gets fixed by that (not the whole spectre/meltdown patches are at fault, just some extra ones that got in with 5.15.27-1-pve that I'm still investigating more closely), while the symptoms are are partially the same as described in this (and other similar) thread, it's a bit hard to tell for sure that this is the exact same problem most of you are facing, which also may be more than one issue.

Note that in our case we got an identical host with a newer, up-to-date BIOS/Firmware, which also fixes the this particular issue on this particular HW.

You'd need to add

mitigations=off to your kernel boot command linehttps://pve.proxmox.com/pve-docs/chapter-sysadmin.html#sysboot_edit_kernel_cmdline

Then don't forget to

update-grub or proxmox-boot-tool refresh and also do a full host reboot.Because I could reproduce a Windows issue on an older host with outdated FW that gets fixed by that (not the whole spectre/meltdown patches are at fault, just some extra ones that got in with 5.15.27-1-pve that I'm still investigating more closely), while the symptoms are are partially the same as described in this (and other similar) thread, it's a bit hard to tell for sure that this is the exact same problem most of you are facing, which also may be more than one issue.

Note that in our case we got an identical host with a newer, up-to-date BIOS/Firmware, which also fixes the this particular issue on this particular HW.

Last edited: