Hello,

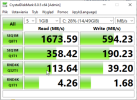

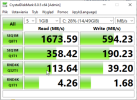

I am looking for a way to improve the performance of random reads and writes on a virtual machine with windows server 2022. VM configuration:

The machine is located on a single node on which ceph is installed, whose configuration is the default:

Changing disk types from virtio and scsi does not give any meaningful results.

Is there any option in the configuration to improve the performance of random reads and writes?

I am looking for a way to improve the performance of random reads and writes on a virtual machine with windows server 2022. VM configuration:

agent: 1boot: order=virtio0;ide2;net0;ide0cores: 6cpu: qemu64machine: pc-i440fx-9.0memory: 16384meta: creation-qemu=9.0.0,ctime=1724249118name: win2022-virtionuma: 1ostype: win11scsihw: virtio-scsi-singlesmbios1: uuid=23024246-a995-44cc-a67d-d2af956fec5fsockets: 2virtio0: vms:vm-101-disk-0,discard=on,iothread=1,size=50Gvmgenid: ab52be2a-c650-4af6-b1e4-e035fb838120The machine is located on a single node on which ceph is installed, whose configuration is the default:

[global]. auth_client_required = cephx auth_cluster_required = cephx auth_service_required = cephx cluster_network = 172.16.18.1/24 fsid = 17f1b434-e301-4db9-b5dc-7645f8cbbb3 mon_allow_pool_delete = true mon_host = 172.16.17.1 ms_bind_ipv4 = true ms_bind_ipv6 = false osd_pool_default_min_size = 2 osd_pool_default_size = 2 public_network = 172.16.17.1/24[client] keyring = /etc/pve/priv/$cluster.$name.keyring[client.crash]. keyring = /etc/pve/ceph/$cluster.$name.keyring[mon.cf01] public_addr = 172.16.17.1Changing disk types from virtio and scsi does not give any meaningful results.

Is there any option in the configuration to improve the performance of random reads and writes?