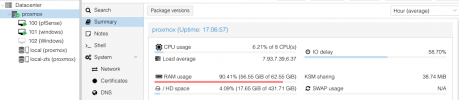

I've just installed Proxmox on my mini PC. It's weird because the CPU is very low according to Proxmox dashboard - around 1% but RAM is around 18GBs when there's just Windows launched. I've allocated 20GBs of RAM thinking that would be an overkill but it seems it's not.

Proxmox host:

CPU: i7-1165G7

RAM: 64GB

RAID 1 ZFS

Windows 11 VM:

Config:

root@proxmox:~# qm config 101

perl: warning: Setting locale failed.

perl: warning: Please check that your locale settings:

LANGUAGE = (unset),

LC_ALL = (unset),

LC_TERMINAL = "iTerm2",

LC_CTYPE = "UTF-8",

LANG = "pl_PL.UTF-8"

are supported and installed on your system.

perl: warning: Falling back to a fallback locale ("pl_PL.UTF-8").

agent: 1

bios: ovmf

boot: order=scsi0;ide2;net0;ide0

cores: 8

efidisk0: local-zfs:vm-101-disk-0,efitype=4m,pre-enrolled-keys=1,size=1M

ide0: local:iso/virtio-win-0.1.221.iso,media=cdrom,size=519030K

ide2: local:iso/Win11_Polish_x64v1.iso,media=cdrom,size=5332826K

machine: pc-q35-6.2

memory: 20480

meta: creation-qemu=6.2.0,ctime=1659462817

name: windows

net0: virtio=06:0E:8A:4F:F6:B6,bridge=vmbr0,firewall=1

numa: 0

ostype: win11

scsi0: local-zfs:vm-101-disk-1,size=300G

scsihw: virtio-scsi-pci

smbios1: uuid=bb72fafe-d117-45e2-b8aa-9fc76442af63

sockets: 1

tpmstate0: local-zfs:vm-101-disk-2,size=4M,version=v2.0

vmgenid: 7e5a0975-bae9-4985-8afe-2a23c05d2ce8

Would appreciate any hints how to TSHOOT this.

Proxmox host:

CPU: i7-1165G7

RAM: 64GB

RAID 1 ZFS

Windows 11 VM:

Config:

root@proxmox:~# qm config 101

perl: warning: Setting locale failed.

perl: warning: Please check that your locale settings:

LANGUAGE = (unset),

LC_ALL = (unset),

LC_TERMINAL = "iTerm2",

LC_CTYPE = "UTF-8",

LANG = "pl_PL.UTF-8"

are supported and installed on your system.

perl: warning: Falling back to a fallback locale ("pl_PL.UTF-8").

agent: 1

bios: ovmf

boot: order=scsi0;ide2;net0;ide0

cores: 8

efidisk0: local-zfs:vm-101-disk-0,efitype=4m,pre-enrolled-keys=1,size=1M

ide0: local:iso/virtio-win-0.1.221.iso,media=cdrom,size=519030K

ide2: local:iso/Win11_Polish_x64v1.iso,media=cdrom,size=5332826K

machine: pc-q35-6.2

memory: 20480

meta: creation-qemu=6.2.0,ctime=1659462817

name: windows

net0: virtio=06:0E:8A:4F:F6:B6,bridge=vmbr0,firewall=1

numa: 0

ostype: win11

scsi0: local-zfs:vm-101-disk-1,size=300G

scsihw: virtio-scsi-pci

smbios1: uuid=bb72fafe-d117-45e2-b8aa-9fc76442af63

sockets: 1

tpmstate0: local-zfs:vm-101-disk-2,size=4M,version=v2.0

vmgenid: 7e5a0975-bae9-4985-8afe-2a23c05d2ce8

Would appreciate any hints how to TSHOOT this.