As the title says the machine was fine and running but at any point Im not able to boot the machine. It Stucks on this blue screen saying "Automatic Repair mode". And does not go through

Im really stuck here. I com into the "secured" mode of windows. I found the the Memory.dmp but unfortunately I had not installed the "Windows debug utils" to view it with dumpchk.

I read over several threads, but nothing helped. I have two backups but it seem restoring Leeds to the same problem.

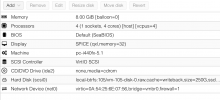

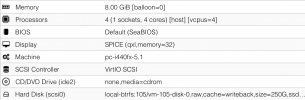

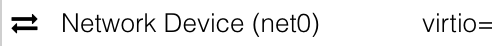

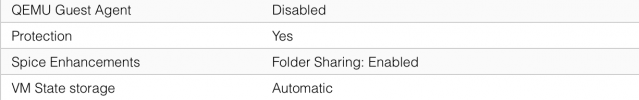

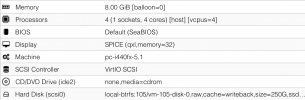

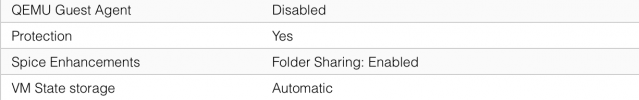

I think some setting in Proxmox could solve this problem but I really do not know which one. or where this comes from.

Im really stuck here. I com into the "secured" mode of windows. I found the the Memory.dmp but unfortunately I had not installed the "Windows debug utils" to view it with dumpchk.

I read over several threads, but nothing helped. I have two backups but it seem restoring Leeds to the same problem.

I think some setting in Proxmox could solve this problem but I really do not know which one. or where this comes from.

Attachments

Last edited: