Hi all,

I have a cluster of 4 Proxmox servers, all running version 6.2-4, a version that I prefer not upgrade unless I necessary have to.

I have a preinstalled Windows 10 VM template (with 4 CPU cores and 6GB RAM with minimal hardware) which I used to create linked clones from.

When I create ~30 linked clones all at once (programmatically through API of course), and started them all together, I am seeing one or two VM out of all those 30 VMs that will hang during boot. It may or may not be the first boot of that clone. However, if I power off and then power on again, the VM boots normally.

If I take a snapshot with RAM, and restore to it (hung state), it continues to hang.

If I modify through the vmid.conf and change the CPU count/RAM, then the restored system continues to boot (lol, wut?).

It gives a black screen with "Guest has not initialized the display (yet)." usually. Sometimes, it is the Windows logo with the spinny thing, but stopped.

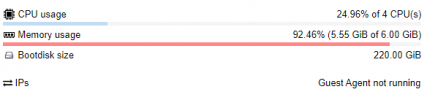

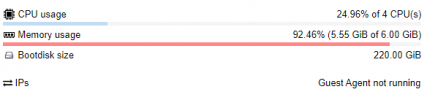

One of the CPU cores will be fully utilized, making the Proxmox UI to show a constant 25% CPU usage. The RAM usage is also constant, sometimes high usage as shown, sometimes low usage (15%).

It seems like it is still somewhat interactive with

Some of the configurations I tried changing to no avail:

Have you guys ever encounter this kind of behavior before? I tried searching around, doesn't seem like anyone been seeing this before.

I have a cluster of 4 Proxmox servers, all running version 6.2-4, a version that I prefer not upgrade unless I necessary have to.

I have a preinstalled Windows 10 VM template (with 4 CPU cores and 6GB RAM with minimal hardware) which I used to create linked clones from.

When I create ~30 linked clones all at once (programmatically through API of course), and started them all together, I am seeing one or two VM out of all those 30 VMs that will hang during boot. It may or may not be the first boot of that clone. However, if I power off and then power on again, the VM boots normally.

If I take a snapshot with RAM, and restore to it (hung state), it continues to hang.

If I modify through the vmid.conf and change the CPU count/RAM, then the restored system continues to boot (lol, wut?).

It gives a black screen with "Guest has not initialized the display (yet)." usually. Sometimes, it is the Windows logo with the spinny thing, but stopped.

One of the CPU cores will be fully utilized, making the Proxmox UI to show a constant 25% CPU usage. The RAM usage is also constant, sometimes high usage as shown, sometimes low usage (15%).

It seems like it is still somewhat interactive with

qm monitor, with some commands failing.Some of the configurations I tried changing to no avail:

- Increasing and decreasing the CPU core count

- Increasing and decreasing the RAM

- Use Windows 10 1909 and 22H2.

Have you guys ever encounter this kind of behavior before? I tried searching around, doesn't seem like anyone been seeing this before.

code_language.shell:

~# pveversion -v

proxmox-ve: 6.2-1 (running kernel: 5.4.34-1-pve)

pve-manager: 6.2-4 (running version: 6.2-4/9824574a)

pve-kernel-5.4: 6.2-1

pve-kernel-helper: 6.2-1

pve-kernel-5.4.34-1-pve: 5.4.34-2

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.0.3-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.15-pve1

libproxmox-acme-perl: 1.0.3

libpve-access-control: 6.1-1

libpve-apiclient-perl: 3.0-3

libpve-common-perl: 6.1-2

libpve-guest-common-perl: 3.0-10

libpve-http-server-perl: 3.0-5

libpve-storage-perl: 6.1-7

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.2-1

lxcfs: 4.0.3-pve2

novnc-pve: 1.1.0-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.2-1

pve-cluster: 6.1-8

pve-container: 3.1-5

pve-docs: 6.2-4

pve-edk2-firmware: 2.20200229-1

pve-firewall: 4.1-2

pve-firmware: 3.1-1

pve-ha-manager: 3.0-9

pve-i18n: 2.1-2

pve-qemu-kvm: 5.0.0-2

pve-xtermjs: 4.3.0-1

qemu-server: 6.2-2

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-1

zfsutils-linux: 0.8.3-pve1

Last edited: