I have a Proxmox Server 6.3.3 new installed with 2x WD RED 4TB.

Previously they ran in the same configuration with pve version 6.1.2 over 12 months with good speed as mirror.

Now extremly slowly. The Backup of the VM on disk is at after 10 hours 52%. Earlier the Duration of the backup was max. 4 hours.

The situation is:

Proxmox Server with 32 GB,

2x Western Digital 4TB as ZFS MIRROR

1 VM with:

ZVOL vm-100-disk-0 Win Server 2019 -> zpool datastore

ZVOL vm-301-disk-1 Oracle SQL Datenbank -> zpool datastore

Update to "virt-IO 0.1.190" driver -> nothing better

smartctl i.O.,

scrub i.O.

return to pve version 6.1.2 -> nothing better

Reading very, very slow!!!!!

- Proxmox backup with Proxmox engine (GUI) needed 10 hours vor 52%, earlier working for 100% in 4 hours => incredible!!!!

- the vm starts and works extremely slowly

It is a bug of Proxmox or can someone tell me, whats going wrong? Perhaps a problem with "blocksize"?

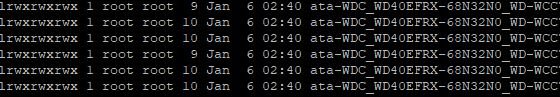

2x WD RED EFRC with 2 partitions:

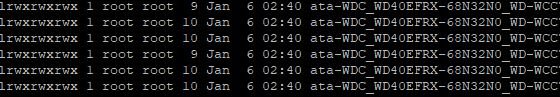

ls /dev/disk/by-id -l:

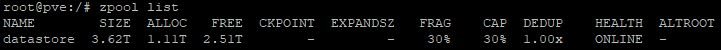

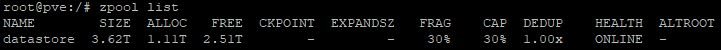

zpool list:

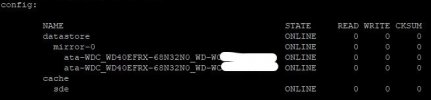

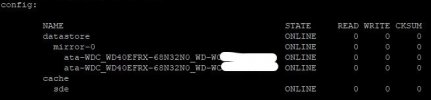

zpool status:

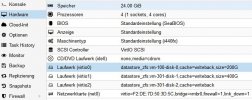

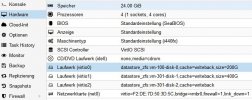

VM-Configuration:

T

Previously they ran in the same configuration with pve version 6.1.2 over 12 months with good speed as mirror.

Now extremly slowly. The Backup of the VM on disk is at after 10 hours 52%. Earlier the Duration of the backup was max. 4 hours.

The situation is:

Proxmox Server with 32 GB,

2x Western Digital 4TB as ZFS MIRROR

1 VM with:

ZVOL vm-100-disk-0 Win Server 2019 -> zpool datastore

ZVOL vm-301-disk-1 Oracle SQL Datenbank -> zpool datastore

Update to "virt-IO 0.1.190" driver -> nothing better

smartctl i.O.,

scrub i.O.

return to pve version 6.1.2 -> nothing better

Reading very, very slow!!!!!

- Proxmox backup with Proxmox engine (GUI) needed 10 hours vor 52%, earlier working for 100% in 4 hours => incredible!!!!

- the vm starts and works extremely slowly

It is a bug of Proxmox or can someone tell me, whats going wrong? Perhaps a problem with "blocksize"?

2x WD RED EFRC with 2 partitions:

ls /dev/disk/by-id -l:

zpool list:

zpool status:

VM-Configuration:

T