FYI: I found the problematic commit, which is sadly not a real bug but a correction to ACPI devices UIDs to make QEMU behave more standard conform. As its late Saturday and such things are not those which can be decided alone I cannot make any final solution, rather I've informed the original author of the patch and some other QEMU and EDK2 maintainers with good in-depth knowledge of all pieces involved (which is mostly firmware like SeaBIOS/OVMF and QEMU) so that we can get to a sane, future proof solution, if there's any, or a workaround/better upgrade to this behaviour in a more defined manner.

Until then, if you want to stay on QEMU 5.2 you can use the following package I build, it has only that commit reverted and makes my reproducer happy again (note, the other new "ghost" device won't go away, but the original Ethernet adapter will be in use again, from Windows POV).

You can download and install that build by doing:

Bash:

wget http://download.proxmox.com/temp/pve-qemu-5.2-with-acpi-af1b80ae56-reverted/pve-qemu-kvm_5.2.0-2%2B1~windevfix_amd64.deb

# verify check sum:

sha256sum pve-qemu-kvm_5.2.0-2+1\~windevfix_amd64.deb

33e8ce10b5a4005160c68f79c979d53b1a84a1d79436adbd00c48ec93d3bf1de pve-qemu-kvm_5.2.0-2+1~windevfix_amd64.deb

# install it

apt install ./pve-qemu-kvm_5.2.0-2+1~windevfix_amd64.deb

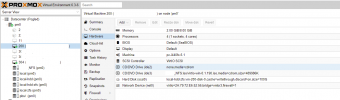

After installation do a fresh boot of the VM, if unsure shut it down completely and then start it through the Proxmox VE webinterface again.