POST NETWORK BONDING UPDATE

As you know i was going to make some major changes to the Proxmox/CEPH Cluster to increase network bandwidth. I am happy to say all changes seems to working fine, although there were some unforeseen glitches which resulted servers reboot and interruption of all VMs access. Below are some of the main changes took place:

1. Each Proxmox nodes has been upgraded to 3 gbps network bandwidth by using NIC bonding.

2. Addition of 3rd CEPH node

3. Rearranging of Proxmox network bridges.

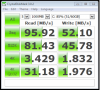

This is what CrystalDiskMark reported before any changes:

This is what after changes took place:

This is how cluster looked while CrystalDiskMark was running:

I ran benchmark multiples times on different VMs to make sure the result was consistent. It came +/- few decimal points. Benchmarks were done on Windows 7 Pro Virtual Machines with writeback cache enabled. Could some of you please run some benchmarks and see how the result stack up? I would like to know how realistic this new numbers are

A new 3rd node was added to CEPH cluster with 3 2TB SATA HDDs. The 3rd image shows the cluster stat while one of the benchmark was running. nload and htop was used to show the stats.

This one is purely for testing. I configured one of the Windows 7 VM with 16 cores to see how it performs under Proxmox. What are you guys getting CPU scores for your machines?