When I migrate a ubuntu vm from pve0 to pve1 or pve2 in a cluster I lose vnc access. When I move the vm back to pve0 I get vnc access.

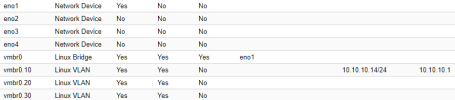

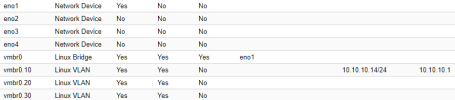

Environment:

- 3 identical hosts in a proxmox cluster.

- ubuntu23.04.3 Minimal with qemu-guest-agent

The vm lives on vlan 20. I don't want the vms to have access to the host that the reason the host doesn't have ip on vlan20.

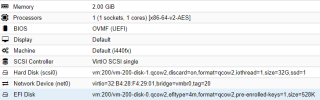

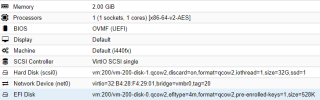

Vm structure.

Migration logs

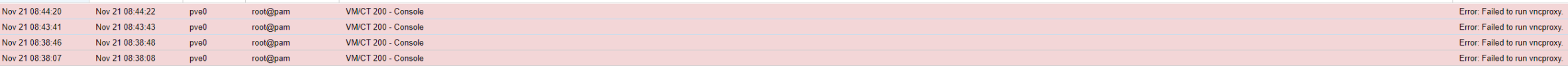

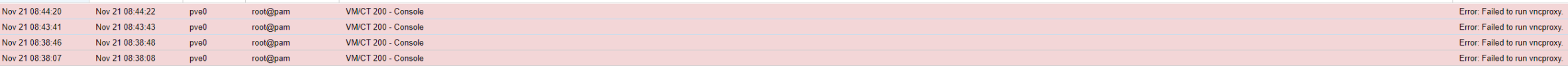

Error.

There is one thing that I find very odd, the errors still how pve0 and not pve1 where the vm is.

thanks for your help

Brad

Environment:

- 3 identical hosts in a proxmox cluster.

- ubuntu23.04.3 Minimal with qemu-guest-agent

The vm lives on vlan 20. I don't want the vms to have access to the host that the reason the host doesn't have ip on vlan20.

Vm structure.

Code:

2023-11-21 08:37:37 starting migration of VM 200 to node 'pve2' (10.10.10.16)

2023-11-21 08:37:37 found local disk 'vm:200/vm-200-disk-0.qcow2' (attached)

2023-11-21 08:37:37 found local disk 'vm:200/vm-200-disk-1.qcow2' (attached)

2023-11-21 08:37:37 starting VM 200 on remote node 'pve2'

2023-11-21 08:37:41 volume 'vm:200/vm-200-disk-0.qcow2' is 'vm:200/vm-200-disk-0.qcow2' on the target

2023-11-21 08:37:41 volume 'vm:200/vm-200-disk-1.qcow2' is 'vm:200/vm-200-disk-1.qcow2' on the target

2023-11-21 08:37:41 start remote tunnel

2023-11-21 08:37:42 ssh tunnel ver 1

2023-11-21 08:37:42 starting storage migration

2023-11-21 08:37:42 scsi0: start migration to nbd:unix:/run/qemu-server/200_nbd.migrate:exportname=drive-scsi0

drive mirror is starting for drive-scsi0

drive-scsi0: transferred 2.9 GiB of 32.0 GiB (8.94%) in 1s

drive-scsi0: transferred 3.2 GiB of 32.0 GiB (9.89%) in 2s

drive-scsi0: transferred 5.3 GiB of 32.0 GiB (16.48%) in 3s

drive-scsi0: transferred 5.6 GiB of 32.0 GiB (17.57%) in 4s

drive-scsi0: transferred 6.0 GiB of 32.0 GiB (18.84%) in 5s

drive-scsi0: transferred 6.5 GiB of 32.0 GiB (20.33%) in 6s

drive-scsi0: transferred 6.9 GiB of 32.0 GiB (21.60%) in 7s

drive-scsi0: transferred 7.3 GiB of 32.0 GiB (22.95%) in 8s

drive-scsi0: transferred 7.6 GiB of 32.0 GiB (23.88%) in 9s

drive-scsi0: transferred 9.0 GiB of 32.0 GiB (28.25%) in 11s

drive-scsi0: transferred 13.0 GiB of 32.0 GiB (40.69%) in 12s

drive-scsi0: transferred 15.3 GiB of 32.0 GiB (47.74%) in 13s

drive-scsi0: transferred 15.6 GiB of 32.0 GiB (48.74%) in 14s

drive-scsi0: transferred 17.4 GiB of 32.0 GiB (54.36%) in 15s

drive-scsi0: transferred 32.0 GiB of 32.0 GiB (100.00%) in 16s, ready

all 'mirror' jobs are ready

2023-11-21 08:37:58 efidisk0: start migration to nbd:unix:/run/qemu-server/200_nbd.migrate:exportname=drive-efidisk0

drive mirror is starting for drive-efidisk0

drive-efidisk0: transferred 0.0 B of 528.0 KiB (0.00%) in 0s

drive-efidisk0: transferred 528.0 KiB of 528.0 KiB (100.00%) in 1s, ready

all 'mirror' jobs are ready

2023-11-21 08:37:59 starting online/live migration on unix:/run/qemu-server/200.migrate

2023-11-21 08:37:59 set migration capabilities

2023-11-21 08:37:59 migration downtime limit: 100 ms

2023-11-21 08:37:59 migration cachesize: 256.0 MiB

2023-11-21 08:37:59 set migration parameters

2023-11-21 08:37:59 start migrate command to unix:/run/qemu-server/200.migrate

2023-11-21 08:38:00 migration active, transferred 100.7 MiB of 2.0 GiB VM-state, 310.8 MiB/s

2023-11-21 08:38:01 migration active, transferred 267.6 MiB of 2.0 GiB VM-state, 2.3 GiB/s

2023-11-21 08:38:02 migration active, transferred 450.5 MiB of 2.0 GiB VM-state, 972.0 MiB/s

2023-11-21 08:38:03 average migration speed: 517.2 MiB/s - downtime 424 ms

2023-11-21 08:38:03 migration status: completed

all 'mirror' jobs are ready

drive-efidisk0: Completing block job_id...

drive-efidisk0: Completed successfully.

drive-scsi0: Completing block job_id...

drive-scsi0: Completed successfully.

drive-efidisk0: mirror-job finished

drive-scsi0: mirror-job finished

2023-11-21 08:38:04 stopping NBD storage migration server on target.

2023-11-21 08:38:11 migration finished successfully (duration 00:00:35)

TASK OKMigration logs

Code:

LC_PVE_TICKET not set, VNC proxy without password is forbidden

TASK ERROR: Failed to run vncproxy.Error.

There is one thing that I find very odd, the errors still how pve0 and not pve1 where the vm is.

thanks for your help

Brad