That article has been updated yesterday to include that information, as you can see in the history: https://pve.proxmox.com/mediawiki/index.php?title=Upgrade_from_6.x_to_7.0&action=history

VM shutdown, KVM: entry failed, hardware error 0x80000021

- Thread starter tzzz90

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

And did you follow the guide?After 2 weeks, my Windows Server 2019 VM was shutdown again

Code:proxmox-ve: 7.2-1 (running kernel: 5.15.35-2-pve) pve-manager: 7.2-4 (running version: 7.2-4/ca9d43cc) pve-kernel-5.15: 7.2-4 pve-kernel-helper: 7.2-4 pve-kernel-5.13: 7.1-9 pve-kernel-5.11: 7.0-10 pve-kernel-5.15.35-2-pve: 5.15.35-5 pve-kernel-5.15.35-1-pve: 5.15.35-3 pve-kernel-5.15.30-2-pve: 5.15.30-3 pve-kernel-5.13.19-6-pve: 5.13.19-15 pve-kernel-5.13.19-2-pve: 5.13.19-4 pve-kernel-5.11.22-7-pve: 5.11.22-12 pve-kernel-5.11.22-4-pve: 5.11.22-9 ceph-fuse: 15.2.14-pve1 corosync: 3.1.5-pve2 criu: 3.15-1+pve-1 glusterfs-client: 9.2-1 ifupdown2: 3.1.0-1+pmx3 ksm-control-daemon: 1.4-1 libjs-extjs: 7.0.0-1 libknet1: 1.24-pve1 libproxmox-acme-perl: 1.4.2 libproxmox-backup-qemu0: 1.3.1-1 libpve-access-control: 7.2-2 libpve-apiclient-perl: 3.2-1 libpve-common-perl: 7.2-2 libpve-guest-common-perl: 4.1-2 libpve-http-server-perl: 4.1-2 libpve-storage-perl: 7.2-4 libspice-server1: 0.14.3-2.1 lvm2: 2.03.11-2.1 lxc-pve: 4.0.12-1 lxcfs: 4.0.12-pve1 novnc-pve: 1.3.0-3 proxmox-backup-client: 2.2.3-1 proxmox-backup-file-restore: 2.2.3-1 proxmox-mini-journalreader: 1.3-1 proxmox-widget-toolkit: 3.5.1 pve-cluster: 7.2-1 pve-container: 4.2-1 pve-docs: 7.2-2 pve-edk2-firmware: 3.20210831-2 pve-firewall: 4.2-5 pve-firmware: 3.4-2 pve-ha-manager: 3.3-4 pve-i18n: 2.7-2 pve-qemu-kvm: 6.2.0-10 pve-xtermjs: 4.16.0-1 qemu-server: 7.2-3 smartmontools: 7.2-pve3 spiceterm: 3.2-2 swtpm: 0.7.1~bpo11+1 vncterm: 1.7-1 zfsutils-linux: 2.1.4-pve1

https://pve.proxmox.com/mediawiki/index.php?title=Upgrade_from_6.x_to_7.0&action=history

@Stoiko IvanovGiving it a go... Unpinned kernel5.13.19-6-pveand setkvm.tdp_mmu=N.

I don't have a reliable way to recreate the issue, but my Windows 11 VM would usually dump on me within a few days time. I'll report back!

It's been about a week and I haven't had any problems with normal usage after applying the workaround.

Here's a breakdown of my setup:

- ASUS ROG Strix Z490-E Gaming Motherboard (BIOS 2403 - 2021/12/16)

- Intel Core i9-10850K Processor

- G.Skill Ripjaws V 64 GB (4 x 16 GB) DDR4-3600 CL16 Memory

- EVGA GeForce RTX 3060 Ti 8 GB XC GAMING Video Card

- intel-microcode is installed

- Latest updates from enterprise repository

- Windows 11 VM with GPU passthrough used as "daily driver"

- Mix of Ubuntu VMs and LCX Containers

Thanks to all those who provided the fix and confirmed it's working for them. After repeatedly having random shutdowns also, the kvm.tdp_mmu=N setting worked for me.

Question - Does it mean this isn't an issue for anyone who's set up a new Proxmox 7.2 install, and only a problem for those who've upgraded from 6.x to 7?

Question - Does it mean this isn't an issue for anyone who's set up a new Proxmox 7.2 install, and only a problem for those who've upgraded from 6.x to 7?

I did not upgrade from 6.x to 7 and I had this issue. Now after applying the change kvm.tdp_mmu=N I have been stable for over 48 hours which is the longest yet for me.

Can confirm newest Kernel and kvm.tdp_mmu=N for older CPUs finally helps....

I didn't realize 10th Gen Intel chips were considered old. They were available in pre-built systems on Newegg about a year ago lol

Can confirm newest Kernel and kvm.tdp_mmu=N for older CPUs finally helps....

What's considered an older CPU? Also out of curiosity what does that setting do?

I think the statement about older CPUs is misleading. I have 11th gen Intel host with this issue, yet another system with 9th gen Intel is fine.What's considered an older CPU? Also out of curiosity what does that setting do?

kvm.tdp_mmu=N seems to have helped though. It's been 2 days now.

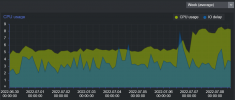

Somehow CPU Usage went up a little though, after applying kvm.tdp_mmu=N . Not sure if it's related, but it happened right at the time when I went back to 5.15* kernel from 5.13 and applied kvm.tdp_mmu=N workaround

Last edited:

I think the statement about older CPUs is misleading. I have 11th gen Intel host with this issue, yet another system with 9th gen Intel is fine.

kvm.tdp_mmu=N seems to have helped though. It's been 2 days now.

Somehow CPU Usage went up a little though, after applying kvm.tdp_mmu=N . Not sure if it's related, but it happened right at the time when I went back to 5.15* kernel from 5.13 and applied kvm.tdp_mmu=N workaround

View attachment 38790

Interesting, good noticing the higher CPU usage. I didn't pay attention to if there's a difference in that

What's considered an older CPU? Also out of curiosity what does that setting do?

This setting seems to prevent Windows Server 2019 and 2022 from randomly crashing within Proxmox 7.0

Last edited:

What a weird setting, surely you wouldn't want your windows VMs to crashThis setting seems to prevent Windows Server 2022 from randomly crashing within Proxmox 7.0

I checked... and also see a slight bump in CPU usage. Nothing too drastic, just a few percent higher on average.Somehow CPU Usage went up a little though, after applying kvm.tdp_mmu=N

With lvm.tcp_mmu=? ??? Only new Kernel or something else?From kernel PVE 5.15.35-6 (Fri, 17 Jun 2022 13:42:35 +0200) no more crash happend

Only with new kernel.With lvm.tcp_mmu=? ??? Only new Kernel or something else?

My machine setting is pc-q35-5.2 I dont know if version 6 work too.

I see in changelog of kernel and backup apk fix io_uring in backup and I think it is possible fix the bug.

Every day crash and now is running from 10 days ago

Still stable.... so it looks this or PVE 5.15.35-6 (Fri, 17 Jun 2022 13:42:35 +0200) finally fixes this.....Can confirm newest Kernel and kvm.tdp_mmu=N for older CPUs finally helps....

I updated to the latest kernel PVE 5.15.39-1 and it was unstable until applying the kvm.tdp_mmu=N option. After applying that option it's been running stable

I also have this issue with 2 up-2-date Dell R720's in a cluster. Occasionally some VM's would fail with that error

This only happens to certain VM's and not all Windows VM's Running Xeon E5-2640 and E5-2643's in a cluster with CEPH

I will try applying that kvm.tdp_mmu=N Option and see if that would fix it

This only happens to certain VM's and not all Windows VM's Running Xeon E5-2640 and E5-2643's in a cluster with CEPH

I will try applying that kvm.tdp_mmu=N Option and see if that would fix it

Last edited: