Hello everyone, I have long set up the migration of machines with policy defined in the "migration" menu. I noticed, although I did not define a priority in the nodes, that this migration takes place only on 2 nodes. I ask any of you if you can give me any answers.

Vm migration to HA

- Thread starter frankz

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hi and thank you for replying.could you include the output ofpveversion -v? there was an issue in pve-ha-manager where resource distribution didn't work properly in some edge cases that got fixed recently.

Code:

proxmox-ve: 7.2-1 (running kernel: 5.15.39-3-pve)

pve-manager: 7.2-7 (running version: 7.2-7/d0dd0e85)

pve-kernel-5.15: 7.2-8

pve-kernel-helper: 7.2-8

pve-kernel-5.13: 7.1-9

pve-kernel-5.0: 6.0-11

pve-kernel-5.15.39-3-pve: 5.15.39-3

pve-kernel-5.15.39-2-pve: 5.15.39-2

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.0.21-5-pve: 5.0.21-10

pve-kernel-5.0.15-1-pve: 5.0.15-1

ceph-fuse: 14.2.21-1

corosync: 3.1.5-pve2

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: 0.8.36+pve1

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve1

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.2-4

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.2-2

libpve-guest-common-perl: 4.1-2

libpve-http-server-perl: 4.1-3

libpve-storage-perl: 7.2-7

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.2.5-1

proxmox-backup-file-restore: 2.2.5-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.5.1

pve-cluster: 7.2-2

pve-container: 4.2-2

pve-docs: 7.2-2

pve-edk2-firmware: 3.20210831-2

pve-firewall: 4.2-5

pve-firmware: 3.5-1

pve-ha-manager: 3.4.0

pve-i18n: 2.7-2

pve-qemu-kvm: 6.2.0-11

pve-xtermjs: 4.16.0-1

qemu-server: 7.2-3

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.7.1~bpo11+1

vncterm: 1.7-1

zfsutils-linux: 2.1.5-pve1Yes Fabian, what I wrote is that I noticed that when I reboot from one of the 3 nodes, the Vms are always migrated to node 4. the cluster consists of 3 nodes, pve, pv3, pve4 - If I reboot from pve4 these are migrated to pve. In short, in short, it seems that pv3 is ignored ....okay, that's already the newest version. could you describe in detail what you are seeing and what you are expecting, and include all the relevant config files/logs? thanks!

how many resources are there? how many of them are on each node prior to the reboot?

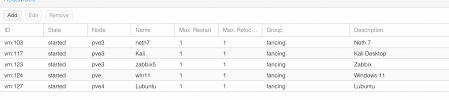

Attachments

could you post the output ofha-manager status?

Code:

root@pve:~# ha-manager status

quorum OK

master pve (active, Wed Aug 17 15:58:22 2022)

lrm pve (active, Wed Aug 17 15:58:18 2022)

lrm pve3 (active, Wed Aug 17 15:58:26 2022)

lrm pve4 (idle, Wed Aug 17 15:58:24 2022)

service vm:103 (pve3, started)

service vm:117 (pve3, started)

service vm:123 (pve3, started)

service vm:124 (pve, started)

service vm:127 (pve3, started)

root@pve:~# ha-manager status

quorum OK

master pve (active, Wed Aug 17 16:00:12 2022)

lrm pve (active, Wed Aug 17 16:00:08 2022)

lrm pve3 (active, Wed Aug 17 16:00:11 2022)

lrm pve4 (wait_for_agent_lock, Wed Aug 17 16:00:09 2022)

service vm:103 (pve3, started)

service vm:117 (pve3, started)

service vm:123 (pve3, started)

service vm:124 (pve, started)

service vm:127 (pve4, starting)

root@pve:~#

Last edited:

I also wanted to inform you that even if I load VMs in HA fairly on the 3 nodes, when I reboot, it's worth what I had written before.could you post the output ofha-manager status?

No Fabian I had changed the real situation. However, if I currently move VMs fairly, as I wrote earlier VMs are migrated between pve and pve4. If pve3 restarts the vms almost always migrate to pve4 .but in your example it's clearly visible that vm 127 moves from 3 (back?) to 4?

If you want I can move the VMs to Ha as you want to do tests.

Attachments

it's still really hard to determine what is going on, please provide the following:

- HA config files, /etc/pve/datacenter.cfg

-

-

-

- HA config files, /etc/pve/datacenter.cfg

-

ha-manager status before node reboot-

journalctl -f -u pve-ha-lrm -u pve-ha-crm of all nodes starting before the reboot-

ha-manager status after node rebootit's still really hard to determine what is going on, please provide the following:

- HA config files, /etc/pve/datacenter.cfg

-ha-manager statusbefore node reboot

-journalctl -f -u pve-ha-lrm -u pve-ha-crmof all nodes starting before the reboot

-ha-manager statusafter node reboot

Code:

email_from: pve@internal2.lan

ha: shutdown_policy=migrate

keyboard: it

migration: secure,network=192.168.9.34/24

Code:

ha-manager status

quorum OK

master pve (active, Thu Aug 18 11:25:54 2022)

lrm pve (active, Thu Aug 18 11:25:55 2022)

lrm pve3 (active, Thu Aug 18 11:25:56 2022)

lrm pve4 (active, Thu Aug 18 11:26:04 2022)

service vm:103 (pve3, started)

service vm:117 (pve3, started)

service vm:123 (pve3, started)

service vm:124 (pve, started)

service vm:127 (pve4, started)

Code:

Aug 17 15:52:23 pve pve-ha-lrm[440455]: Task 'UPID:pve:0006B889:0121B3EF:62FCF27E:qmigrate:103:root@pam:' still active, waiting

Aug 17 15:52:28 pve pve-ha-lrm[440455]: Task 'UPID:pve:0006B889:0121B3EF:62FCF27E:qmigrate:103:root@pam:' still active, waiting

Aug 17 15:52:33 pve pve-ha-lrm[440455]: Task 'UPID:pve:0006B889:0121B3EF:62FCF27E:qmigrate:103:root@pam:' still active, waiting

Aug 17 15:52:38 pve pve-ha-lrm[440455]: Task 'UPID:pve:0006B889:0121B3EF:62FCF27E:qmigrate:103:root@pam:' still active, waiting

Aug 17 15:52:39 pve pve-ha-lrm[440455]: <root@pam> end task UPID:pve:0006B889:0121B3EF:62FCF27E:qmigrate:103:root@pam: OK

Aug 17 15:52:42 pve pve-ha-crm[2936]: service 'vm:103': state changed from 'migrate' to 'started' (node = pve3)

Aug 17 15:59:42 pve pve-ha-crm[2936]: got crm command: migrate vm:127 pve4

Aug 17 15:59:42 pve pve-ha-crm[2936]: migrate service 'vm:127' to node 'pve4'

Aug 17 15:59:42 pve pve-ha-crm[2936]: service 'vm:127': state changed from 'started' to 'migrate' (node = pve3, target = pve4)

Aug 17 16:00:12 pve pve-ha-crm[2936]: service 'vm:127': state changed from 'migrate' to 'started' (node = pve4)Reboot only pve4 and migrate to pve :

Code:

Aug 18 11:31:14 pve pve-ha-crm[2936]: node 'pve4': state changed from 'online' => 'maintenance'

Aug 18 11:31:14 pve pve-ha-crm[2936]: migrate service 'vm:127' to node 'pve' (running)

Aug 18 11:31:14 pve pve-ha-crm[2936]: service 'vm:127': state changed from 'started' to 'migrate' (node = pve4, target = pve)

Aug 18 11:31:44 pve pve-ha-crm[2936]: service 'vm:127': state changed from 'migrate' to 'started' (node = pve)

Aug 18 11:33:14 pve pve-ha-crm[2936]: node 'pve4': state changed from 'maintenance' => 'online'

Aug 18 11:33:14 pve pve-ha-crm[2936]: moving service 'vm:127' back to 'pve4', node came back from maintenance.

Aug 18 11:33:14 pve pve-ha-crm[2936]: migrate service 'vm:127' to node 'pve4' (running)

Aug 18 11:33:14 pve pve-ha-crm[2936]: service 'vm:127': state changed from 'started' to 'migrate' (node = pve, target = pve4)

Aug 18 11:33:15 pve pve-ha-lrm[2090643]: <root@pam> starting task UPID:pve:001FE694:018DDA1E:62FE075B:qmigrate:127:root@pam:

Aug 18 11:33:20 pve pve-ha-lrm[2090643]: Task 'UPID:pve:001FE694:018DDA1E:62FE075B:qmigrate:127:root@pam:' still active, waiting

Aug 18 11:33:25 pve pve-ha-lrm[2090643]: Task 'UPID:pve:001FE694:018DDA1E:62FE075B:qmigrate:127:root@pam:' still active, waiting

Aug 18 11:33:30 pve pve-ha-lrm[2090643]: Task 'UPID:pve:001FE694:018DDA1E:62FE075B:qmigrate:127:root@pam:' still active, waiting

Aug 18 11:33:35 pve pve-ha-lrm[2090643]: Task 'UPID:pve:001FE694:018DDA1E:62FE075B:qmigrate:127:root@pam:' still active, waiting

Code:

Aug 18 11:33:40 pve pve-ha-lrm[2090643]: <root@pam> end task UPID:pve:001FE694:018DDA1E:62FE075B:qmigrate:127:root@pam: OK

Aug 18 11:33:44 pve pve-ha-crm[2936]: service 'vm:127': state changed from 'migrate' to 'started' (node = pve4)

Code:

journalctl -f -u pve-ha-lrm -u pve-ha-crm

-- Journal begins at Thu 2021-07-22 20:18:28 CEST. --

Aug 16 11:21:42 pve3 pve-ha-lrm[570977]: <root@pam> starting task UPID:pve3:0008B664:00847E14:62FB61A6:qmstart:117:root@pam:

Aug 16 11:21:45 pve3 pve-ha-lrm[570977]: <root@pam> end task UPID:pve3:0008B664:00847E14:62FB61A6:qmstart:117:root@pam: OK

Aug 16 11:21:45 pve3 pve-ha-lrm[570977]: service status vm:117 started

Aug 17 15:59:46 pve3 pve-ha-lrm[1234110]: <root@pam> starting task UPID:pve3:0012D4BF:0121C8FC:62FCF452:qmigrate:127:root@pam:

Aug 17 15:59:51 pve3 pve-ha-lrm[1234110]: Task 'UPID:pve3:0012D4BF:0121C8FC:62FCF452:qmigrate:127:root@pam:' still active, waiting

Aug 17 15:59:56 pve3 pve-ha-lrm[1234110]: Task 'UPID:pve3:0012D4BF:0121C8FC:62FCF452:qmigrate:127:root@pam:' still active, waiting

Aug 17 16:00:01 pve3 pve-ha-lrm[1234110]: Task 'UPID:pve3:0012D4BF:0121C8FC:62FCF452:qmigrate:127:root@pam:' still active, waiting

Aug 17 16:00:06 pve3 pve-ha-lrm[1234110]: Task 'UPID:pve3:0012D4BF:0121C8FC:62FCF452:qmigrate:127:root@pam:' still active, waiting

Aug 17 16:00:11 pve3 pve-ha-lrm[1234110]: Task 'UPID:pve3:0012D4BF:0121C8FC:62FCF452:qmigrate:127:root@pam:' still active, waiting

Aug 17 16:00:11 pve3 pve-ha-lrm[1234110]: <root@pam> end task UPID:pve3:0012D4BF:0121C8FC:62FCF452:qmigrate:127:root@pam: OK

journalctl -f -u pve-ha-lrm -u pve-ha-crm

-- Journal begins at Mon 2022-08-15 19:14:13 CEST. --

Aug 18 11:33:13 pve4 pve-ha-crm[2269]: status change startup => wait_for_quorum

Aug 18 11:33:13 pve4 systemd[1]: Started PVE Cluster HA Resource Manager Daemon.

Aug 18 11:33:13 pve4 systemd[1]: Starting PVE Local HA Resource Manager Daemon...

Aug 18 11:33:14 pve4 pve-ha-lrm[2280]: starting server

Aug 18 11:33:14 pve4 pve-ha-lrm[2280]: status change startup => wait_for_agent_lock

Aug 18 11:33:14 pve4 systemd[1]: Started PVE Local HA Resource Manager Daemon.

Aug 18 11:33:23 pve4 pve-ha-crm[2269]: status change wait_for_quorum => slave

Aug 18 11:33:49 pve4 pve-ha-lrm[2280]: successfully acquired lock 'ha_agent_pve4_lock'

Aug 18 11:33:49 pve4 pve-ha-lrm[2280]: watchdog active

Aug 18 11:33:49 pve4 pve-ha-lrm[2280]: status change wait_for_agent_lock => active

journalctl -f -u pve-ha-lrm -u pve-ha-crm

-- Journal begins at Sat 2021-07-24 16:23:42 CEST. --

Aug 18 11:33:14 pve pve-ha-crm[2936]: moving service 'vm:127' back to 'pve4', node came back from maintenance.

Aug 18 11:33:14 pve pve-ha-crm[2936]: migrate service 'vm:127' to node 'pve4' (running)

Aug 18 11:33:14 pve pve-ha-crm[2936]: service 'vm:127': state changed from 'started' to 'migrate' (node = pve, target = pve4)

Aug 18 11:33:15 pve pve-ha-lrm[2090643]: <root@pam> starting task UPID:pve:001FE694:018DDA1E:62FE075B:qmigrate:127:root@pam:

Aug 18 11:33:20 pve pve-ha-lrm[2090643]: Task 'UPID:pve:001FE694:018DDA1E:62FE075B:qmigrate:127:root@pam:' still active, waiting

Aug 18 11:33:25 pve pve-ha-lrm[2090643]: Task 'UPID:pve:001FE694:018DDA1E:62FE075B:qmigrate:127:root@pam:' still active, waiting

Aug 18 11:33:30 pve pve-ha-lrm[2090643]: Task 'UPID:pve:001FE694:018DDA1E:62FE075B:qmigrate:127:root@pam:' still active, waiting

Aug 18 11:33:35 pve pve-ha-lrm[2090643]: Task 'UPID:pve:001FE694:018DDA1E:62FE075B:qmigrate:127:root@pam:' still active, waiting

Aug 18 11:33:40 pve pve-ha-lrm[2090643]: <root@pam> end task UPID:pve:001FE694:018DDA1E:62FE075B:qmigrate:127:root@pam: OK

Aug 18 11:33:44 pve pve-ha-crm[2936]: service 'vm:127': state changed from 'migrate' to 'started' (node = pve4)

Last edited:

First of all thank you, adeso I try to start all the VMs, and I look at the memory load and CPU. Then I'll write to you.this is completely expected behaviour - you start off with 3 guests on pve3, 1 each on pve4 and pve, you reboot pve4, the guest will be migrated to the least-used host (pve) and then back again once pve4 comes up again.

Hi Fabian sorry but the translation is not clearnote that the distribution is pretty simple (it just looks at number of HA resources IIRC?)