that's a question you have to ask nvidia. i'm not sure what their current policy regarding lts + newer kernels are, but only they have the capability to update the older branch of the driver for newer kernels...

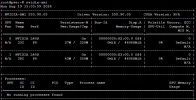

As of v16.6 (or possibly v16.5), the kernel module for the R535 drivers does build correctly for the 6.8 kernels. I was asking specifically about this new way of handling vGPUs. As I recently found, booting into the 6.8 kernel with the v16.6 kernel module seems to work correctly except that no mdevs show up under mdevctl.

@AbsolutelyFree: https://docs.nvidia.com/vgpu/index.html - the R470 and R535 branches still are available. I don't know whether they've been updated for 6.8 yet since Ubuntu LTS is still on 6.5.

It is "safe" to pin 6.5 for a while depending on your security profile and features you want from the 6.8 kernel. Not sure if Canonical backports things into 6.5 for LTS.

In your OP, you said that this new way of handling vGPUs requires the R550 drivers. However, the relevant section of the documentation for the R535 drivers ( https://docs.nvidia.com/vgpu/16.0/pdf/grid-vgpu-user-guide.pdf#page71 ) seems quite similar to the documentation for the R550 drivers and implies that this new method of vGPU handling works with the R535 drivers as well. There was also another recent v16.7 update which I haven't had a chance to install yet and AFAIK the R535 drivers are supported until 2026, so I believe that support for the 6.8 kernel is being provided with the R535 drivers as well, but I haven't had the time to try this out yet.