Hi I've got the same problem. Very slow restore process, it's take almost 11 hours. Please suggest.

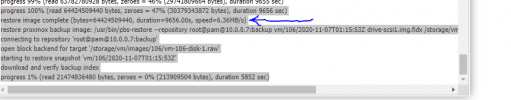

restore vma archive: zstd -q -d -c /var/lib/vz/dump/vzdump-qemu-100-2020_09_21-20_16_08.vma.zst | vma extract -v -r /var/tmp/vzdumptmp5015.fifo - /var/tmp/vzdumptmp5015

CFG: size: 577 name: qemu-server.conf

DEV: dev_id=1 size: 214748364800 devname: drive-scsi0

DEV: dev_id=2 size: 322122547200 devname: drive-scsi1

CTIME: Mon Sep 21 20:16:09 2020

Logical volume "vm-100-disk-0" created.

new volume ID is 'local-lvm:vm-100-disk-0'

Logical volume "vm-100-disk-1" created.

new volume ID is 'local-lvm:vm-100-disk-1'

map 'drive-scsi0' to '/dev/pve/vm-100-disk-0' (write zeros = 0)

map 'drive-scsi1' to '/dev/pve/vm-100-disk-1' (write zeros = 0)

progress 1% (read 5368709120 bytes, duration 589 sec)

progress 2% (read 10737418240 bytes, duration 2100 sec)

progress 3% (read 16106127360 bytes, duration 2761 sec)

progress 4% (read 21474836480 bytes, duration 2811 sec)

progress 5% (read 26843545600 bytes, duration 2811 sec)

progress 6% (read 32212254720 bytes, duration 2812 sec)

progress 7% (read 37580963840 bytes, duration 2812 sec)

progress 8% (read 42949672960 bytes, duration 2833 sec)

progress 9% (read 48318382080 bytes, duration 2949 sec)

progress 10% (read 53687091200 bytes, duration 2949 sec)

progress 11% (read 59055800320 bytes, duration 2984 sec)

progress 12% (read 64424509440 bytes, duration 2984 sec)

progress 13% (read 69793218560 bytes, duration 2984 sec)

progress 14% (read 75161927680 bytes, duration 2984 sec)

progress 15% (read 80530636800 bytes, duration 2985 sec)

progress 16% (read 85899345920 bytes, duration 2985 sec)

progress 17% (read 91268055040 bytes, duration 2985 sec)

progress 18% (read 96636764160 bytes, duration 2985 sec)

progress 19% (read 102005473280 bytes, duration 2986 sec)

progress 20% (read 107374182400 bytes, duration 3473 sec)

progress 21% (read 112742891520 bytes, duration 3674 sec)

progress 22% (read 118111600640 bytes, duration 3675 sec)

progress 23% (read 123480309760 bytes, duration 3675 sec)

progress 24% (read 128849018880 bytes, duration 3675 sec)

progress 25% (read 134217728000 bytes, duration 3927 sec)

progress 26% (read 139586437120 bytes, duration 6700 sec)

progress 27% (read 144955146240 bytes, duration 9380 sec)

progress 28% (read 150323855360 bytes, duration 12137 sec)

progress 29% (read 155692564480 bytes, duration 14945 sec)

progress 30% (read 161061273600 bytes, duration 16850 sec)

progress 31% (read 166429982720 bytes, duration 16850 sec)

progress 32% (read 171798691840 bytes, duration 16850 sec)

progress 33% (read 177167400960 bytes, duration 16994 sec)

progress 34% (read 182536110080 bytes, duration 18629 sec)

progress 35% (read 187904819200 bytes, duration 19253 sec)

progress 36% (read 193273528320 bytes, duration 19390 sec)

progress 37% (read 198642237440 bytes, duration 19662 sec)

progress 38% (read 204010946560 bytes, duration 19817 sec)

progress 39% (read 209379655680 bytes, duration 20304 sec)

progress 40% (read 214748364800 bytes, duration 20476 sec)

progress 41% (read 220117073920 bytes, duration 23254 sec)

progress 42% (read 225485783040 bytes, duration 25749 sec)

progress 43% (read 230854492160 bytes, duration 27863 sec)

progress 44% (read 236223201280 bytes, duration 30329 sec)

progress 45% (read 241591910400 bytes, duration 31937 sec)

progress 46% (read 246960619520 bytes, duration 32278 sec)

progress 47% (read 252329328640 bytes, duration 32696 sec)

progress 48% (read 257698037760 bytes, duration 32696 sec)

progress 49% (read 263066746880 bytes, duration 32696 sec)

progress 50% (read 268435456000 bytes, duration 32704 sec)

progress 51% (read 273804165120 bytes, duration 32749 sec)

progress 52% (read 279172874240 bytes, duration 32749 sec)

progress 53% (read 284541583360 bytes, duration 32750 sec)

progress 54% (read 289910292480 bytes, duration 32750 sec)

progress 55% (read 295279001600 bytes, duration 32750 sec)

progress 56% (read 300647710720 bytes, duration 32750 sec)

progress 57% (read 306016419840 bytes, duration 32751 sec)

progress 58% (read 311385128960 bytes, duration 32751 sec)

progress 59% (read 316753838080 bytes, duration 32824 sec)

progress 60% (read 322122547200 bytes, duration 32863 sec)

progress 61% (read 327491256320 bytes, duration 32908 sec)

progress 62% (read 332859965440 bytes, duration 33216 sec)

progress 63% (read 338228674560 bytes, duration 33354 sec)

progress 64% (read 343597383680 bytes, duration 33375 sec)

progress 65% (read 348966092800 bytes, duration 33376 sec)

progress 66% (read 354334801920 bytes, duration 33376 sec)

progress 67% (read 359703511040 bytes, duration 33376 sec)

progress 68% (read 365072220160 bytes, duration 33376 sec)

progress 69% (read 370440929280 bytes, duration 33377 sec)

progress 70% (read 375809638400 bytes, duration 33429 sec)

progress 71% (read 381178347520 bytes, duration 33894 sec)

progress 72% (read 386547056640 bytes, duration 33911 sec)

progress 73% (read 391915765760 bytes, duration 33917 sec)

progress 74% (read 397284474880 bytes, duration 33917 sec)

progress 75% (read 402653184000 bytes, duration 34058 sec)

progress 76% (read 408021893120 bytes, duration 34090 sec)

progress 77% (read 413390602240 bytes, duration 34090 sec)

progress 78% (read 418759311360 bytes, duration 34091 sec)

progress 79% (read 424128020480 bytes, duration 34091 sec)

progress 80% (read 429496729600 bytes, duration 34091 sec)

progress 81% (read 434865438720 bytes, duration 34091 sec)

progress 82% (read 440234147840 bytes, duration 34091 sec)

progress 83% (read 445602856960 bytes, duration 34092 sec)

progress 84% (read 450971566080 bytes, duration 34092 sec)

progress 85% (read 456340275200 bytes, duration 34092 sec)

progress 86% (read 461708984320 bytes, duration 34093 sec)

progress 87% (read 467077693440 bytes, duration 34129 sec)

progress 88% (read 472446402560 bytes, duration 34130 sec)

progress 89% (read 477815111680 bytes, duration 34130 sec)

progress 90% (read 483183820800 bytes, duration 34130 sec)

progress 91% (read 488552529920 bytes, duration 34290 sec)

progress 92% (read 493921239040 bytes, duration 34295 sec)

progress 93% (read 499289948160 bytes, duration 34295 sec)

progress 94% (read 504658657280 bytes, duration 34295 sec)

progress 95% (read 510027366400 bytes, duration 34296 sec)

progress 96% (read 515396075520 bytes, duration 34296 sec)

progress 97% (read 520764784640 bytes, duration 34319 sec)

progress 98% (read 526133493760 bytes, duration 34319 sec)

progress 99% (read 531502202880 bytes, duration 34319 sec)

progress 100% (read 536870912000 bytes, duration 34319 sec)

total bytes read 536870912000, sparse bytes 468128428032 (87.2%)

space reduction due to 4K zero blocks 1.33%

rescan volumes...

TASK OK