Hi guys,

a few days ago, I did backup import onto HDD pool on the same server that also has SSD pool.

After a few minutes all guest VMs (they run only on SSD pool) started reporting problems with hung tasks, etc and services on them stopped working.

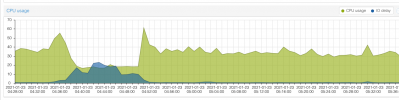

Host has had high IOWait and low CPU usage.

I canceled the import and after a few minutes, everything went back to normal.

(Graphs which I can attach later, show read write speeds of VMs at 40 to 80 Gigabytes / s during that time. .. )

)

Most of the writing was done by some [vma] process.

The storage system is not under any load at any time and iowait is under 1 most of the time.

To be frank, there is a NVMe device shared as a special mirror for HDDs and slog for SSDs, but it is plenty fast.

Source of the backup can do 100 - 200 Mbps, over 10Gb line.

Disks are on SATA ports on Supermicro board.

Why would I experience the slowdown of all SSD backed VMs when using HDD pool?

a few days ago, I did backup import onto HDD pool on the same server that also has SSD pool.

After a few minutes all guest VMs (they run only on SSD pool) started reporting problems with hung tasks, etc and services on them stopped working.

Host has had high IOWait and low CPU usage.

I canceled the import and after a few minutes, everything went back to normal.

(Graphs which I can attach later, show read write speeds of VMs at 40 to 80 Gigabytes / s during that time. ..

Most of the writing was done by some [vma] process.

The storage system is not under any load at any time and iowait is under 1 most of the time.

To be frank, there is a NVMe device shared as a special mirror for HDDs and slog for SSDs, but it is plenty fast.

Source of the backup can do 100 - 200 Mbps, over 10Gb line.

Disks are on SATA ports on Supermicro board.

Why would I experience the slowdown of all SSD backed VMs when using HDD pool?

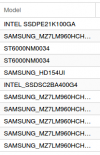

Code:

pool: hddpool

state: ONLINE

scan: scrub repaired 0B in 0 days 01:46:41 with 0 errors on Sun Jan 10 02:10:42 2021

remove: Removal of vdev 2 copied 180K in 0h0m, completed on Mon Oct 26 23:46:17 2020

192 memory used for removed device mappings

config:

NAME STATE READ WRITE CKSUM

hddpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

scsi-35000c5008455beff ONLINE 0 0 0

scsi-35000c50084560403 ONLINE 0 0 0

special

mirror-3 ONLINE 0 0 0

nvme-INTEL_SSDPE21K100GA_PHKE831500DJ100EGN-part4 ONLINE 0 0 0

ata-INTEL_SSDSC2BA400G4_BTHV513606WT400NGN-part1 ONLINE 0 0 0

logs

nvme-INTEL_SSDPE21K100GA_PHKE831500DJ100EGN-part2 ONLINE 0 0 0

errors: No known data errors

pool: rpool

state: ONLINE

scan: scrub repaired 0B in 0 days 01:42:59 with 0 errors on Sun Jan 10 02:07:02 2021

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ata-SAMSUNG_MZ7LM960HCHP-000MV_S2LBNXAH324668-part3 ONLINE 0 0 0

ata-SAMSUNG_MZ7LM960HCHP-000MV_S2LBNXAH324365-part3 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

ata-SAMSUNG_MZ7LM960HCHP-000MV_S2LBNXAH324419 ONLINE 0 0 0

ata-SAMSUNG_MZ7LM960HCHP-000MV_S2LBNXAH324096 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

ata-SAMSUNG_MZ7LM960HCHP-000MV_S2LBNXAH324415 ONLINE 0 0 0

ata-SAMSUNG_MZ7LM960HCHP-000MV_S2LBNXAH324414 ONLINE 0 0 0

logs

nvme-INTEL_SSDPE21K100GA_PHKE831500DJ100EGN-part1 ONLINE 0 0 0

errors: No known data errors