URGENT: Local Storage Full Without Any Reason

- Thread starter an0n_anzerone

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hi Fabian,

thanks for the reply.

Output of

Output of

Output of

Output of

thanks for the reply.

Output of

mount:

sysfs on /sys type sysfs (rw,nosuid,nodev,noexec,relatime)

proc on /proc type proc (rw,relatime)

udev on /dev type devtmpfs (rw,nosuid,relatime,size=263985620k,nr_inodes=65996405,mode=755)

devpts on /dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000)

tmpfs on /run type tmpfs (rw,nosuid,noexec,relatime,size=52802496k,mode=755)

/dev/mapper/pve-root on / type ext4 (rw,relatime,errors=remount-ro,stripe=64)

securityfs on /sys/kernel/security type securityfs (rw,nosuid,nodev,noexec,relatime)

tmpfs on /dev/shm type tmpfs (rw,nosuid,nodev)

tmpfs on /run/lock type tmpfs (rw,nosuid,nodev,noexec,relatime,size=5120k)

tmpfs on /sys/fs/cgroup type tmpfs (ro,nosuid,nodev,noexec,mode=755)

cgroup2 on /sys/fs/cgroup/unified type cgroup2 (rw,nosuid,nodev,noexec,relatime)

cgroup on /sys/fs/cgroup/systemd type cgroup (rw,nosuid,nodev,noexec,relatime,xattr,name=systemd)

pstore on /sys/fs/pstore type pstore (rw,nosuid,nodev,noexec,relatime)

none on /sys/fs/bpf type bpf (rw,nosuid,nodev,noexec,relatime,mode=700)

cgroup on /sys/fs/cgroup/rdma type cgroup (rw,nosuid,nodev,noexec,relatime,rdma)

cgroup on /sys/fs/cgroup/memory type cgroup (rw,nosuid,nodev,noexec,relatime,memory)

cgroup on /sys/fs/cgroup/hugetlb type cgroup (rw,nosuid,nodev,noexec,relatime,hugetlb)

cgroup on /sys/fs/cgroup/devices type cgroup (rw,nosuid,nodev,noexec,relatime,devices)

cgroup on /sys/fs/cgroup/pids type cgroup (rw,nosuid,nodev,noexec,relatime,pids)

cgroup on /sys/fs/cgroup/perf_event type cgroup (rw,nosuid,nodev,noexec,relatime,perf_event)

cgroup on /sys/fs/cgroup/cpuset type cgroup (rw,nosuid,nodev,noexec,relatime,cpuset)

cgroup on /sys/fs/cgroup/freezer type cgroup (rw,nosuid,nodev,noexec,relatime,freezer)

cgroup on /sys/fs/cgroup/net_cls,net_prio type cgroup (rw,nosuid,nodev,noexec,relatime,net_cls,net_prio)

cgroup on /sys/fs/cgroup/blkio type cgroup (rw,nosuid,nodev,noexec,relatime,blkio)

cgroup on /sys/fs/cgroup/cpu,cpuacct type cgroup (rw,nosuid,nodev,noexec,relatime,cpu,cpuacct)

systemd-1 on /proc/sys/fs/binfmt_misc type autofs (rw,relatime,fd=33,pgrp=1,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=58608)

mqueue on /dev/mqueue type mqueue (rw,relatime)

debugfs on /sys/kernel/debug type debugfs (rw,relatime)

hugetlbfs on /dev/hugepages type hugetlbfs (rw,relatime,pagesize=2M)

sunrpc on /run/rpc_pipefs type rpc_pipefs (rw,relatime)

fusectl on /sys/fs/fuse/connections type fusectl (rw,relatime)

configfs on /sys/kernel/config type configfs (rw,relatime)

replication on /replication type zfs (rw,xattr,noacl)

replication/subvol-125-disk-0 on /replication/subvol-125-disk-0 type zfs (rw,xattr,posixacl)

replication/basevol-108-disk-0 on /replication/basevol-108-disk-0 type zfs (rw,xattr,posixacl)

replication/subvol-103-disk-0 on /replication/subvol-103-disk-0 type zfs (rw,xattr,posixacl)

replication/subvol-101-disk-0 on /replication/subvol-101-disk-0 type zfs (rw,xattr,posixacl)

replication/subvol-113-disk-0 on /replication/subvol-113-disk-0 type zfs (rw,xattr,posixacl)

replication/subvol-105-disk-0 on /replication/subvol-105-disk-0 type zfs (rw,xattr,posixacl)

replication/subvol-106-disk-0 on /replication/subvol-106-disk-0 type zfs (rw,xattr,posixacl)

replication/subvol-116-disk-0 on /replication/subvol-116-disk-0 type zfs (rw,xattr,posixacl)

replication/subvol-104-disk-0 on /replication/subvol-104-disk-0 type zfs (rw,xattr,posixacl)

replication/subvol-127-disk-0 on /replication/subvol-127-disk-0 type zfs (rw,xattr,posixacl)

lxcfs on /var/lib/lxcfs type fuse.lxcfs (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

binfmt_misc on /proc/sys/fs/binfmt_misc type binfmt_misc (rw,relatime)

/dev/fuse on /etc/pve type fuse (rw,nosuid,nodev,relatime,user_id=0,group_id=0,default_permissions,allow_other)

replication/subvol-135-disk-0 on /replication/subvol-135-disk-0 type zfs (rw,xattr,posixacl)

replication/subvol-136-disk-0 on /replication/subvol-136-disk-0 type zfs (rw,xattr,posixacl)

tmpfs on /run/user/0 type tmpfs (rw,nosuid,nodev,relatime,size=52802492k,mode=700)Output of

df -h

Filesystem Size Used Avail Use% Mounted on

udev 252G 0 252G 0% /dev

tmpfs 51G 4.1G 47G 9% /run

/dev/mapper/pve-root 55G 53G 0 100% /

tmpfs 252G 54M 252G 1% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 252G 0 252G 0% /sys/fs/cgroup

replication 4.6T 256K 4.6T 1% /replication

replication/subvol-125-disk-0 16G 1.5G 15G 10% /replication/subvol-125-disk-0

replication/basevol-108-disk-0 16G 964M 16G 6% /replication/basevol-108-disk-0

replication/subvol-103-disk-0 8.0G 4.6G 3.5G 57% /replication/subvol-103-disk-0

replication/subvol-101-disk-0 8.0G 1.2G 6.9G 15% /replication/subvol-101-disk-0

replication/subvol-113-disk-0 16G 4.6G 12G 29% /replication/subvol-113-disk-0

replication/subvol-105-disk-0 32G 12G 21G 35% /replication/subvol-105-disk-0

replication/subvol-106-disk-0 16G 3.2G 13G 20% /replication/subvol-106-disk-0

replication/subvol-116-disk-0 16G 3.1G 13G 20% /replication/subvol-116-disk-0

replication/subvol-104-disk-0 512G 101G 412G 20% /replication/subvol-104-disk-0

replication/subvol-127-disk-0 32G 2.0G 31G 7% /replication/subvol-127-disk-0

/dev/fuse 30M 60K 30M 1% /etc/pve

replication/subvol-135-disk-0 16G 3.0G 14G 19% /replication/subvol-135-disk-0

replication/subvol-136-disk-0 16G 2.3G 14G 15% /replication/subvol-136-disk-0

tmpfs 51G 0 51G 0% /run/user/0

Output of

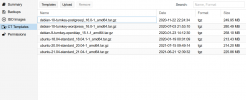

du -sm /var/lib/vz/*:

1 /var/lib/vz/dump

1 /var/lib/vz/images

6962 /var/lib/vz/template

Output of

/etc/pve/storage.cfg:

dir: local

path /var/lib/vz

content vztmpl,backup,iso

shared 0

lvmthin: local-lvm

thinpool data

vgname pve

content rootdir,images

zfspool: replication

pool replication

content rootdir,images

mountpoint /replication

sparse 0

dir: backup

path /backup

content backup

prune-backups keep-weekly=100

shared 1

Same problem here...I don't use local for backups or ISO or anything else, nothing shows in local from the UI, output of du -sm /var/lib/vz/* is 1, 1, and 1 for dump, images, and template. Yet - 94G of 100G full in local. I use pve-data for backups and anything else and that has 1.2T out of 1.7T free.

Wondering if there was a response to the earlier post.

Wondering if there was a response to the earlier post.

Based on your screen-shots doesn't look like you have much in

However, your output of

Did data get stored somewhere outside of

/var/lib/vz on that local partitionHowever, your output of

df -H shows you have 100% use on your root partition... anything that hits 100% usage is usually a problem.Did data get stored somewhere outside of

/var/lib/vz that could be filling up the disk? /home /root /mnt ?please provide the information from my last reply (and if you want to get a head-start on tracking down the usage, useSame problem here...I don't use local for backups or ISO or anything else, nothing shows in local from the UI, output of du -sm /var/lib/vz/* is 1, 1, and 1 for dump, images, and template. Yet - 94G of 100G full in local. I use pve-data for backups and anything else and that has 1.2T out of 1.7T free.

Wondering if there was a response to the earlier post.

du -sm /* to find out where the space is going to, repeating the command for dirs taking up lots of space and report back what you find)Output ofdf -h

Filesystem Size Used Avail Use% Mounted on tmpfs 51G 4.1G 47G 9% /run tmpfs 252G 54M 252G 1% /dev/shm tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs 252G 0 252G 0% /sys/fs/cgroup tmpfs 51G 0 51G 0% /run/user/0

These tmpfs reside on local and the sizes are quite large. These are temporary mounts which are volatile until reboot. I would try reboot and see if these temp mounts unmount freeing up the space. I had this happen when I was transferring a large virtual disk to the wrong path and when that path's root system became full, it started moving data to local. A reboot cleared the mounts and freed up that space after deleting the .partial file of the original transfer.

These tmpfs reside on local and the sizes are quite large. These are temporary mounts which are volatile until reboot. I would try reboot and see if these temp mounts unmount freeing up the space. I had this happen when I was transferring a large virtual disk to the wrong path and when that path's root system became full, it started moving data to local. A reboot cleared the mounts and freed up that space after deleting the .partial file of the original transfer.

I guess my question along with this post would be how to prevent writes to this dir: local. I don't need anything other than proxmox OS files on this as I have a ZFS pool for everything else.

I have the same issue, but I've noticed that it's only when the VM is Windows based. None of my Linux VM's cause this issue. I moved the Windows VM to different hosts and the problem followed it. I noticed there is another kernel update today and I'm currently installing it, so once I get machines migrated and the hosts upgraded I am going to fire the Windows VM up and see if the issue persists. If this kernel upgrade fixes it I will post back.Hello,

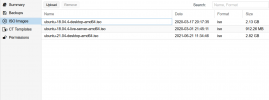

My local storage is full without any proper reason. It does not have so much data on it. Can anyone help me with it?

Is anyone else seeing this issue on non-Windows VM's?

Hi,I have the same issue, but I've noticed that it's only when the VM is Windows based. None of my Linux VM's cause this issue. I moved the Windows VM to different hosts and the problem followed it. I noticed there is another kernel update today and I'm currently installing it, so once I get machines migrated and the hosts upgraded I am going to fire the Windows VM up and see if the issue persists. If this kernel upgrade fixes it I will post back.

Is anyone else seeing this issue on non-Windows VM's?

i can fully agree. The problem occured yesterday after kernelupdate on my second/test machine. I moved the Windwos VMs to the main Proxmox-host with PVE 5.13.19-7 installed. I just installed the kernel-update from today, but the problem still remains on my test-host.

Any idea? Here´s the output.

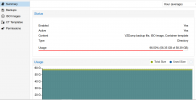

root@proxmox01:~# df -h

Filesystem Size Used Avail Use% Mounted on

udev 32G 0 32G 0% /dev

tmpfs 6.3G 1.8M 6.3G 1% /run

rpool/ROOT/pve-1 422G 386G 37G 92% / <--why?

tmpfs 32G 34M 32G 1% /dev/shm

tmpfs 5.0M 4.0K 5.0M 1% /run/lock

rpool 37G 128K 37G 1% /rpool

rpool/ROOT 37G 128K 37G 1% /rpool/ROOT

rpool/data 37G 128K 37G 1% /rpool/data

r1-tosh-3tb 1.6T 128K 1.6T 1% /r1-tosh-3tb

r1-tosh-3tb/data 1.6T 128K 1.6T 1% /r1-tosh-3tb/data

r1-tosh-3tb/vm 1.6T 128K 1.6T 1% /r1-tosh-3tb/vm

r1-tosh-3tb/vm/subvol-112-disk-0 2.0G 677M 1.4G 34% /r1-tosh-3tb/vm/subvol-112-disk-0

/dev/fuse 128M 24K 128M 1% /etc/pve

//nas-backup.stock.local/Proxmox 7.2T 6.6T 638G 92% /mnt/pve/nas-backup-cifs

tmpfs 6.3G 0 6.3G 0% /run/user/0

root@proxmox01:~# mount

sysfs on /sys type sysfs (rw,nosuid,nodev,noexec,relatime)

proc on /proc type proc (rw,relatime)

udev on /dev type devtmpfs (rw,nosuid,relatime,size=32859632k,nr_inodes=8214908,mode=755,inode64)

devpts on /dev/pts type devpts (rw,nosuid,noexec,relatime,gid=5,mode=620,ptmxmode=000)

tmpfs on /run type tmpfs (rw,nosuid,nodev,noexec,relatime,size=6577976k,mode=755,inode64)

rpool/ROOT/pve-1 on / type zfs (rw,relatime,xattr,noacl)

securityfs on /sys/kernel/security type securityfs (rw,nosuid,nodev,noexec,relatime)

tmpfs on /dev/shm type tmpfs (rw,nosuid,nodev,inode64)

tmpfs on /run/lock type tmpfs (rw,nosuid,nodev,noexec,relatime,size=5120k,inode64)

cgroup2 on /sys/fs/cgroup type cgroup2 (rw,nosuid,nodev,noexec,relatime)

pstore on /sys/fs/pstore type pstore (rw,nosuid,nodev,noexec,relatime)

none on /sys/fs/bpf type bpf (rw,nosuid,nodev,noexec,relatime,mode=700)

systemd-1 on /proc/sys/fs/binfmt_misc type autofs (rw,relatime,fd=30,pgrp=1,timeout=0,minproto=5,maxproto=5,direct,pipe_ino=25016)

mqueue on /dev/mqueue type mqueue (rw,nosuid,nodev,noexec,relatime)

hugetlbfs on /dev/hugepages type hugetlbfs (rw,relatime,pagesize=2M)

debugfs on /sys/kernel/debug type debugfs (rw,nosuid,nodev,noexec,relatime)

tracefs on /sys/kernel/tracing type tracefs (rw,nosuid,nodev,noexec,relatime)

fusectl on /sys/fs/fuse/connections type fusectl (rw,nosuid,nodev,noexec,relatime)

configfs on /sys/kernel/config type configfs (rw,nosuid,nodev,noexec,relatime)

sunrpc on /run/rpc_pipefs type rpc_pipefs (rw,relatime)

rpool on /rpool type zfs (rw,noatime,xattr,noacl)

rpool/ROOT on /rpool/ROOT type zfs (rw,noatime,xattr,noacl)

rpool/data on /rpool/data type zfs (rw,noatime,xattr,noacl)

r1-tosh-3tb on /r1-tosh-3tb type zfs (rw,noatime,xattr,noacl)

r1-tosh-3tb/data on /r1-tosh-3tb/data type zfs (rw,noatime,xattr,noacl)

r1-tosh-3tb/vm on /r1-tosh-3tb/vm type zfs (rw,noatime,xattr,noacl)

r1-tosh-3tb/vm/subvol-112-disk-0 on /r1-tosh-3tb/vm/subvol-112-disk-0 type zfs (rw,noatime,xattr,posixacl)

lxcfs on /var/lib/lxcfs type fuse.lxcfs (rw,nosuid,nodev,relatime,user_id=0,group_id=0,allow_other)

/dev/fuse on /etc/pve type fuse (rw,nosuid,nodev,relatime,user_id=0,group_id=0,default_permissions,allow_other)

//nas-backup.stock.local/Proxmox on /mnt/pve/nas-backup-cifs type cifs (rw,relatime,vers=3.1.1,cache=strict,username=admin,uid=0,noforceuid,gid=0,noforcegid,addr=10.83.84.102,file_mode=0755,dir_mode=0755,soft,nounix,serverino,mapposix,rsize=4194304,wsize=4194304,bsize=1048576,echo_interval=60,actimeo=1)

tmpfs on /run/user/0 type tmpfs (rw,nosuid,nodev,relatime,size=6577972k,nr_inodes=1644493,mode=700,inode64)

binfmt_misc on /proc/sys/fs/binfmt_misc type binfmt_misc (rw,nosuid,nodev,noexec,relatime)

tracefs on /sys/kernel/debug/tracing type tracefs (rw,nosuid,nodev,noexec,relatime)

root@proxmox01:~# du -sm /var/lib/vz/*

1 /var/lib/vz/dump

1 /var/lib/vz/images

1 /var/lib/vz/private

1 /var/lib/vz/snippets

1 /var/lib/vz/template

Sry ma fault, but i already checked "/" too. I´m unable to find the 300+ GB of lost space.it's/that is full, so just looking in/var/lib/vzmight not give you the answerI'd look at things like /var/cache and /var/log next, and if those are okay, start at /* and go from there.

Code:

Output:

root@proxmox01:~# du -sm /*

10 /bin

196 /boot

34 /dev

5 /etc

1 /home

620 /lib

1 /lib64

1 /media

2124909 /mnt

1 /opt

du: cannot read directory '/proc/15701/task/15701/net': Invalid argument

du: cannot read directory '/proc/15701/net': Invalid argument

du: cannot access '/proc/396751/task/396751/fd/4': No such file or directory

du: cannot access '/proc/396751/task/396751/fdinfo/4': No such file or directory

du: cannot access '/proc/396751/fd/3': No such file or directory

du: cannot access '/proc/396751/fdinfo/3': No such file or directory

0 /proc

688 /r1-tosh-3tb

1 /root

1 /rpool

2 /run

10 /sbin

1 /srv

0 /sys

1 /tbw_send.sh

1 /tmp

1114 /usr

du: cannot access '/var/lib/lxcfs/cgroup': Input/output error

392857 /varMaybe it´s a ZFS related problem?

Last edited:

it's possible the data got written while other mountpoints where not mounted, and it is now hidden by the mounted filesystems/datasets. but in your du output, /var looks rather big, and there are no additional mountpoints below /var in your mount output, so I'd first continue looking there..

I had the same symptoms but in my case it was files disguised as one of my mounts.

Clearly there must have been a time where my NAS drive were unavailable, maybe during an firmware update or something and Proxmox continued to write files to the directory but on local disk instead using the same file paths.

I unmounted my NAS drives, rebooted Proxmox and then I could see a file directory in /mnt/pve that looked like my NAS drives but wasn't.

I wasn't too precious of the files inside them so I simply deleted the directories and then my local storage dropped about 100GB

Clearly there must have been a time where my NAS drive were unavailable, maybe during an firmware update or something and Proxmox continued to write files to the directory but on local disk instead using the same file paths.

I unmounted my NAS drives, rebooted Proxmox and then I could see a file directory in /mnt/pve that looked like my NAS drives but wasn't.

I wasn't too precious of the files inside them so I simply deleted the directories and then my local storage dropped about 100GB

Last edited: