Hey,

over the weekend I brought our 2nd server online to redo the first one.

I moved all VMs/CTs to the 2nd server and deleted the first since everything seemed fine.

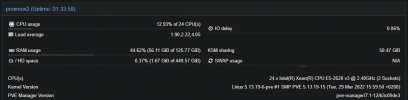

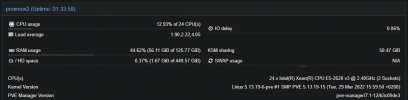

However when even the slightest IO operation occurs (e.g. opening a browser on a server) the IO delay skyrockets up to 90+%. Same ofc when backups are created. It goes back to 0% when the systems are idle.

I really need some sort of idea of what could cause this, since if the IO delay reaches 30+% websites become unavailable and other services come to a crawl!

The only real difference between these 2 servers is that the first one booted via iSCSI and not from a disk and that it didn't use multipath to connect to the main storage (I think).

Configuration:

Hard drives:

multipath -ll

LVM creation:

Standard VM config: (most of the VMs are the same)

over the weekend I brought our 2nd server online to redo the first one.

I moved all VMs/CTs to the 2nd server and deleted the first since everything seemed fine.

However when even the slightest IO operation occurs (e.g. opening a browser on a server) the IO delay skyrockets up to 90+%. Same ofc when backups are created. It goes back to 0% when the systems are idle.

I really need some sort of idea of what could cause this, since if the IO delay reaches 30+% websites become unavailable and other services come to a crawl!

The only real difference between these 2 servers is that the first one booted via iSCSI and not from a disk and that it didn't use multipath to connect to the main storage (I think).

Configuration:

Hard drives:

- Boot drive:

2x NAS SSDs SATA in Raidz1 (Nothing besides some ISOs and the OS is stored here) - VM/CT storage:

Fujitsu Eternus DX100 via 2x 10 Gib/s fiber over iSCSI (multipathed which I think could be the problem) - 3TB Raid6 LVM-Thin

Code:

defaults {

user_friendly_names yes

polling_interval 2

path_selector "round-robin 0"

path_grouping_policy multibus

path_checker readsector0

rr_min_io 100

rr_weight priorities

failback immediate

no_path_retry queue

}

blacklist {

devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*"

devnode "^hd[a-z][[0-9]*]"

wwid ".*"

}

blacklist_exceptions {

wwid "3600000e00d28000000281cc800000000"

wwid "3600000e00d28000000281cc800010000"

}

multipaths {

multipath {

# id retrieved with the utility /lib/udev/scsi_id

wwid 3600000e00d28000000281cc800000000

alias pm2_main_mpath

}

multipath {

wwid 3600000e00d28000000281cc800010000

alias pm2_ssd_mpath #nothing is on here yet

}

# Default from multipath -t

device {

vendor "FUJITSU"

product "ETERNUS_DX(H|L|M|400|8000)"

path_grouping_policy "group_by_prio"

prio "alua"

failback "immediate"

no_path_retry 10

}multipath -ll

Code:

pm2_main_mpath (3600000e00d28000000281cc800000000) dm-1 FUJITSU,ETERNUS_DXL

size=3.2T features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

|-+- policy='round-robin 0' prio=50 status=active

| `- 12:0:0:0 sde 8:64 active ready running

`-+- policy='round-robin 0' prio=10 status=enabled

`- 11:0:0:0 sdd 8:48 active ready running

pm2_ssd_mpath (3600000e00d28000000281cc800010000) dm-0 FUJITSU,ETERNUS_DXL

size=366G features='1 queue_if_no_path' hwhandler='1 alua' wp=rw

|-+- policy='round-robin 0' prio=50 status=active

| `- 11:0:0:1 sdf 8:80 active ready running

`-+- policy='round-robin 0' prio=10 status=enabled

`- 12:0:0:1 sdg 8:96 active ready runningLVM creation:

Code:

pvcreate /dev/mapper/pm2_main_mpath

vgcreate VMs_PM2 /dev/mapper/pm2_main_mpath

lvcreate -L 3.5T --thinpool main_thinpl_pm2 VMs_PM2Standard VM config: (most of the VMs are the same)

Code:

agent: 1

bios: ovmf

boot: order=scsi0

cores: 10

cpu: host,flags=+aes

efidisk0: Thin_2:vm-101-disk-0,efitype=4m,format=raw,pre-enrolled-keys=1,size=528K

machine: pc-i440fx-6.1

memory: 16384

meta: creation-qemu=6.1.0,ctime=1640785552

name: exchange

net0: virtio=36:B1:CE:95:59:E3,bridge=vmbr0

numa: 1

onboot: 1

ostype: win10

scsi0: Thin_2:vm-101-disk-1,cache=writeback,discard=on,format=raw,size=700G

scsihw: virtio-scsi-pci

smbios1: uuid=8672338d-9c84-4758-a99c-1ef44c798e4b

sockets: 1

startup: order=2

vga: qxl

vmgenid: 30360784-fb3d-462d-b814-e3fb088992d1

Last edited: