yeah, the PCI/bus/etc. routing changes and that does mess up an already installed windows... should be doing it from the get go to install on SCSI virtio else you'll have that fun"Unfortunately, the attempt did not work. The moment I change the virtual disk from virtio0 to scsi0, the server runs into a Blue Screen with 'inaccessible disk'.

[SOLVED] Unexplained Storage Growth in Windows VM on ZFS.

- Thread starter taumeister

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

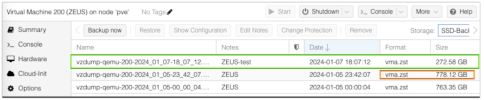

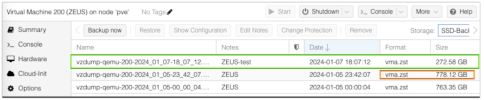

I'm reaching out with an issue I've recently encountered regarding a Windows VM running on ZFS storage. I've noticed that this VM is progressively using more storage space. This became apparent particularly during my daily full backups, where I observed the VM's storage consumption increased by over 100 GB within ~8 weeks.

Interestingly, the VM itself doesn't show any increase in storage usage. In fact, I have deliberately deleted some data, yet both the backup volume and the ZFS store volume continue to grow. This phenomenon is baffling to me.

I read about the Discard option in the VM disk settings in the forum. I've implemented this setting and restarted both the VM and the host, but there has been no noticeable change.

zpool trim neither.

Other VMs do not show this phenomenon.

All VMs are stored on 4 nvme raidz1 storage.

The Windows Server is 2019.

- Used Space in VM is ~300GB

- Used Space in ZFS is ~700GB (Pictures)

I just saw the email about the successful backup of the VMs, and it's quite evident there. The backup size has increased by over 14GB within two days, but no one is working in the office, no jobs are running, and the internal consumption of the VM remains the same... I'm starting to get a bit worried.

This makes it sound like you might have some snapshots still hanging around of that disk/image.

please provide a `zfs list tank -t all | grep -i vm-200-disk-3` output, yes, it'll be long/etc. but would have the info to confirm/deny my suspicions

a) ALWAYS enable compression on the ZFS - at an absolute minimum the zle, but the lz4 is bloody fast enough in most cases...( unless you need more storage, then the zstd is the beterer and gzip the "more trusted" versions) ZFS does only do things zero'd as sparse when you've enable compression

b) the virtio blockdevice can't do TRIM.. yeah, it's a bug imho, but the consensus is the virtio-scsi driver does everything as fast as need be and has discard. but yeah, install it as the beginning, don't change it for a windows system (Linux systems are more forgiving and I've done it, but the Windows had always been a format c: reinstall)

c) to "emulate" trim, once You've enabled/ensured compression is enabed for the image disk, I've used the equivalent of DD if=/dev/zero of=c:/file/bigzero count=##### bs=1024k, where you substitute ##### with the size in megabytes free you need to "trim"/zero

Hi hvisage,

thanks for your answer.

There is no snapshot hanging around.

The thing is, I did not have this problem before, it is roundabout since 8 Weeks..

thanks for your answer.

There is no snapshot hanging around.

Code:

root@pve:~# echo . && zfs list -t all | grep -i 'vm-200-disk'

.

tank/vm-200-disk-0 533K 1.90T 533K -

tank/vm-200-disk-2 90.6K 1.90T 90.6K -

tank/vm-200-disk-3 687G 1.90T 687G -The thing is, I did not have this problem before, it is roundabout since 8 Weeks..

fsutill is set to 0 from the beginningIf you get a 1 back with the commandfsutil behavior query DisableDeleteNotify, then set it to 0 withfsutil behavior set DisableDeleteNotify 0.

Then you could trydefrag /oand/oroptimize-Volume -DriveLetter C -ReTrim -Verbose.

You could then usesdelete.exe -z c:to see if it helps.

=> https://learn.microsoft.com/en-us/sysinternals/downloads/sdelete

You could also try moving the image to another storage and then back to the correct one. During import, the image is usually optimized accordingly, which could result in it being reduced in size again. I think there is at least a very high chance that this will have happened and with the other optimizations you shouldn't find yourself in such a situation again.

defrag /L / is the retrim command, was executed 2 times.

I could move the vDisk to another storage, but the only space for this is my qnap smb storage.

I could do this offline, do you think that would be enough or at least ok?

so no snapshots, which leaves the other possibility, that the disk layout might be a problem for some reason(s) perhaps related to ashift and volume settings.Hi hvisage,

thanks for your answer.

There is no snapshot hanging around.

Code:root@pve:~# echo . && zfs list -t all | grep -i 'vm-200-disk' . tank/vm-200-disk-0 533K 1.90T 533K - tank/vm-200-disk-2 90.6K 1.90T 90.6K - tank/vm-200-disk-3 687G 1.90T 687G -

The thing is, I did not have this problem before, it is roundabout since 8 Weeks..

output of

zfs get all tank/vm-200-disk-3 and zpool get all tank ?Thank you for taking the time, but I find it hard to believe. This was all set up by Proxmox itself through the installer, and I just believe that it chooses the best layout. I've been using it for so many years.

But..

But..

Code:

zfs get all tank/vm-200-disk-3

NAME PROPERTY VALUE SOURCE

tank/vm-200-disk-3 type volume -

tank/vm-200-disk-3 creation Sat Dec 2 15:40 2023 -

tank/vm-200-disk-3 used 687G -

tank/vm-200-disk-3 available 1.92T -

tank/vm-200-disk-3 referenced 687G -

tank/vm-200-disk-3 compressratio 1.13x -

tank/vm-200-disk-3 reservation none default

tank/vm-200-disk-3 volsize 1.51T local

tank/vm-200-disk-3 volblocksize 16K default

tank/vm-200-disk-3 checksum on default

tank/vm-200-disk-3 compression on inherited from tank

tank/vm-200-disk-3 readonly off default

tank/vm-200-disk-3 createtxg 4995597 -

tank/vm-200-disk-3 copies 1 default

tank/vm-200-disk-3 refreservation none default

tank/vm-200-disk-3 guid 11572191364924524656 -

tank/vm-200-disk-3 primarycache all default

tank/vm-200-disk-3 secondarycache all default

tank/vm-200-disk-3 usedbysnapshots 0B -

tank/vm-200-disk-3 usedbydataset 687G -

tank/vm-200-disk-3 usedbychildren 0B -

tank/vm-200-disk-3 usedbyrefreservation 0B -

tank/vm-200-disk-3 logbias latency default

tank/vm-200-disk-3 objsetid 159 -

tank/vm-200-disk-3 dedup off default

tank/vm-200-disk-3 mlslabel none default

tank/vm-200-disk-3 sync standard default

tank/vm-200-disk-3 refcompressratio 1.13x -

tank/vm-200-disk-3 written 687G -

tank/vm-200-disk-3 logicalused 746G -

tank/vm-200-disk-3 logicalreferenced 746G -

tank/vm-200-disk-3 volmode default default

tank/vm-200-disk-3 snapshot_limit none default

tank/vm-200-disk-3 snapshot_count none default

tank/vm-200-disk-3 snapdev hidden default

tank/vm-200-disk-3 context none default

tank/vm-200-disk-3 fscontext none default

tank/vm-200-disk-3 defcontext none default

tank/vm-200-disk-3 rootcontext none default

tank/vm-200-disk-3 redundant_metadata all default

tank/vm-200-disk-3 encryption off default

tank/vm-200-disk-3 keylocation none default

tank/vm-200-disk-3 keyformat none default

tank/vm-200-disk-3 pbkdf2iters 0 default

tank/vm-200-disk-3 snapshots_changed Sun Dec 24 13:27:39 2023 -

Code:

tank size 5.45T -

tank capacity 44% -

tank altroot - default

tank health ONLINE -

tank guid 4489099193939180288 -

tank version - default

tank bootfs - default

tank delegation on default

tank autoreplace off default

tank cachefile - default

tank failmode wait default

tank listsnapshots off default

tank autoexpand off default

tank dedupratio 1.00x -

tank free 3.05T -

tank allocated 2.41T -

tank readonly off -

tank ashift 12 local

tank comment - default

tank expandsize - -

tank freeing 0 -

tank fragmentation 16% -

tank leaked 0 -

tank multihost off default

tank checkpoint - -

tank load_guid 5989190827490250731 -

tank autotrim off default

tank compatibility off default

tank bcloneused 0 -

tank bclonesaved 0 -

tank bcloneratio 1.00x -

tank feature@async_destroy enabled local

tank feature@empty_bpobj active local

tank feature@lz4_compress active local

tank feature@multi_vdev_crash_dump enabled local

tank feature@spacemap_histogram active local

tank feature@enabled_txg active local

tank feature@hole_birth active local

tank feature@extensible_dataset active local

tank feature@embedded_data active local

tank feature@bookmarks enabled local

tank feature@filesystem_limits enabled local

tank feature@large_blocks enabled local

tank feature@large_dnode enabled local

tank feature@sha512 enabled local

tank feature@skein enabled local

tank feature@edonr enabled local

tank feature@userobj_accounting active local

tank feature@encryption enabled local

tank feature@project_quota active local

tank feature@device_removal enabled local

tank feature@obsolete_counts enabled local

tank feature@zpool_checkpoint enabled local

tank feature@spacemap_v2 active local

tank feature@allocation_classes enabled local

tank feature@resilver_defer enabled local

tank feature@bookmark_v2 enabled local

tank feature@redaction_bookmarks enabled local

tank feature@redacted_datasets enabled local

tank feature@bookmark_written enabled local

tank feature@log_spacemap active local

tank feature@livelist enabled local

tank feature@device_rebuild enabled local

tank feature@zstd_compress enabled local

tank feature@draid enabled local

tank feature@zilsaxattr disabled local

tank feature@head_errlog disabled local

tank feature@blake3 disabled local

tank feature@block_cloning disabled local

tank feature@vdev_zaps_v2 disabled local

Last edited:

fsutill is set to 0 from the beginning

defrag /L / is the retrim command, was executed 2 times.

I could move the vDisk to another storage, but the only space for this is my qnap smb storage.

I could do this offline, do you think that would be enough or at least ok?

the command that is actually the one I believe is *needed*:

You could then usesdelete.exe -z c:to see if it helps.

=> https://learn.microsoft.com/en-us/sysinternals/downloads/sdelete

You could also try moving the image to another storage and then back to the correct one. During import, the image is usually optimized accordingly, which could result in it being reduced in size again. I think there is at least a very high chance that this will have happened and with the other optimizations you shouldn't find yourself in such a situation again.

reason: the writing of ZEROES, not just a TRIM command, as the values (especially the 1.13 compressratio) indicates that there are data in the "empty/deleted" portions of the VM's disk (like when you've done a defrag like above) and the in betweens (like the virtioblock) doesn't support TRIM commands

That said, also what is the blocksize you are using inside the Windows NTFS/etc? It should be >= 16k (The Volblocksize of the volume)

known not to necessarily chose/use the best options for the underlying hardware, just the most common expected optimized values, so you need to know that yourself and check/tune accordingly. Especially newer SSD/NVMes you want ashift=13 (not 12 as in your case, good for 4k drives) else you have RMW latency on the storage you might not even know aboutThis was all set up by Proxmox itself through the installer,

hm. I didnt know that at all. Maybe I simply do a last backup , delete the pool, reinitiate it and restore my backup, what do you think?known not to necessarily chose/use the best options for the underlying hardware, just the most common expected optimized values, so you need to know that yourself and check/tune accordingly. Especially newer SSD/NVMes you want ashift=13 (not 12 as in your case, good for 4k drives) else you have RMW latency on the storage you might not even know about

Currently, I am moving the vDisk to another SSD storage and then back again.

Last edited:

What I'd want to do in your situation (having had funs with virtioblock in the past without TRIM support, and having had to do similar):

Attach a virtio-scsi device (enabling SSD & TRIM/etc. on the device) to the windows VM, copy the files of the current windows drives, and swap the drives around.

moving the vdisk artound, will not help, as I suspect the problem is the data inside vdisk, not the zfs side at present (having eliminated the problems)

SO, as a 2nd prize "quicker win":

Attach a virtio-scsi device (enabling SSD & TRIM/etc. on the device) to the windows VM, copy the files of the current windows drives, and swap the drives around.

moving the vdisk artound, will not help, as I suspect the problem is the data inside vdisk, not the zfs side at present (having eliminated the problems)

SO, as a 2nd prize "quicker win":

sdelete -z c:So where are we now:

I have performed all possible trim commands both in the virtual machine and on the ZFS pool, which led to no improvement.

I moved the vDisk to another storage and back again, but that didn't help either.

We can probably assume that there is no problem on the ZFS side.

I ran sdelete on volume C:, which took 1-2 hors.

For some reason, I aborted on drive D: after 4 hours without a change in the percentage display (0%),

I think I'll look for a window next week, maybe Friday evening to Sunday evening, hoping that it will go through.

If all this doesn't help, I might even have to plan to set up the server again, unfortunately, there is a lawyer software with an SQL database involved, and that probably also means a migration from the manufacturer's side, I would have to plan that more precisely.

I could consider installing Veeam Backup, creating a backup, and then retrieving it. Veeam only copys used Blocks.

But honestly, I don't know if that solves the problem in this particular case, because unreleased blocks (that's our theory) will probably also be retrieved from the backup, I'm not sure about that...yes, I could test this beforehand...

I have performed all possible trim commands both in the virtual machine and on the ZFS pool, which led to no improvement.

I moved the vDisk to another storage and back again, but that didn't help either.

We can probably assume that there is no problem on the ZFS side.

I ran sdelete on volume C:, which took 1-2 hors.

For some reason, I aborted on drive D: after 4 hours without a change in the percentage display (0%),

I think I'll look for a window next week, maybe Friday evening to Sunday evening, hoping that it will go through.

If all this doesn't help, I might even have to plan to set up the server again, unfortunately, there is a lawyer software with an SQL database involved, and that probably also means a migration from the manufacturer's side, I would have to plan that more precisely.

I could consider installing Veeam Backup, creating a backup, and then retrieving it. Veeam only copys used Blocks.

But honestly, I don't know if that solves the problem in this particular case, because unreleased blocks (that's our theory) will probably also be retrieved from the backup, I'm not sure about that...yes, I could test this beforehand...

Consider shutting down all apps, like the SQL server, and retry the sdelete -zsoftware with an SQL database involved

Things like SQL could be the cause of lots of file writes which will "consume" storage if they aren't TRIM/zeroed

ok I will try this.

A question left.

The virtual Disk I assigned for my windows guest is 1550GB.

When I do an sdelete the free storage is written with zeros, so the space on my thin provisioned vm will grow up to 1550GB?

A question left.

The virtual Disk I assigned for my windows guest is 1550GB.

When I do an sdelete the free storage is written with zeros, so the space on my thin provisioned vm will grow up to 1550GB?

Since new information has emerged, I will no longer be exploring and testing additional options. We need to set up the Windows Server anew (separating SQL Server and File Server and upgrade from 2019 to 2022 anyway), and during this process, I'll create a new pool and reinstall the Windows VMs with your new insights.known not to necessarily chose/use the best options for the underlying hardware, just the most common expected optimized values, so you need to know that yourself and check/tune accordingly. Especially newer SSD/NVMes you want ashift=13 (not 12 as in your case, good for 4k drives) else you have RMW latency on the storage you might not even know about

Greetings

Thomas

Last edited:

In the meantime, I've been experimenting a bit and stumbled upon a solution, or at least one solution.

I intended to clone the single large disk with various volumes into new, smaller virtual disks.

For preparation, I used Windows' defragmentation program.

I am aware that defragmentation isn't particularly useful on SSDs, but.....and while defragmenting each volume, I briefly noticed a 'trim volume...' operation.

Surprisingly, the virtual disk in the ZFS datastore has now shrunk from 770GB to just 270GB, resulting in significantly smaller backup sizes, too.

I just wanted to let you know...

I intended to clone the single large disk with various volumes into new, smaller virtual disks.

For preparation, I used Windows' defragmentation program.

I am aware that defragmentation isn't particularly useful on SSDs, but.....and while defragmenting each volume, I briefly noticed a 'trim volume...' operation.

Surprisingly, the virtual disk in the ZFS datastore has now shrunk from 770GB to just 270GB, resulting in significantly smaller backup sizes, too.

I just wanted to let you know...

So, I've consolidated the old server and transferred the tasks to others. I've decommissioned the old server. The solution, however, was to defragment the disk and, for the future (as I've done now), to set up the vDisks properly. Bye.