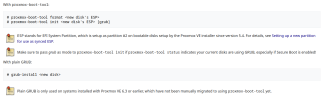

Given that Broadcom is essentially abandoning small businesses I decided to explore Proxmox for our growing data center.

As a start I decided to do a simple test using a Mac Mini that I already have ESXi installed on and load Proxmox on another drive so it can boot up either hypervisor.

Both ESXi and Proxmox are running off of their own Samsumg EVO 870 SATA SSD

I imported a VM from ESXi into Proxmox and ran the same realistic load test on an Ubuntu 20.04 server.

This test is rather database intensive. The results are as follows:

ESXi: 16 seconds

Proxmox: 112 seconds

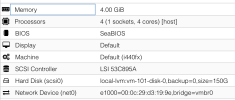

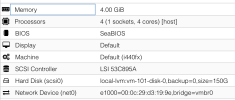

Configuration:

I've tried various caching and controller options with no measurable change.

The ESXi configuration has the same amount of resources assigned to it.

Any thoughts why this would be so slow? This is not useful.

As a start I decided to do a simple test using a Mac Mini that I already have ESXi installed on and load Proxmox on another drive so it can boot up either hypervisor.

Both ESXi and Proxmox are running off of their own Samsumg EVO 870 SATA SSD

I imported a VM from ESXi into Proxmox and ran the same realistic load test on an Ubuntu 20.04 server.

This test is rather database intensive. The results are as follows:

ESXi: 16 seconds

Proxmox: 112 seconds

Configuration:

I've tried various caching and controller options with no measurable change.

The ESXi configuration has the same amount of resources assigned to it.

Any thoughts why this would be so slow? This is not useful.