Thanks, I'll take a look at this later. Have to head off to do some other stuff just now....

Hmmm. I kind of wonder what'd happen if you reinitialised your boot partition using the instructions here?

...

Ubuntu 20 VM Much slower than in ESXi

- Thread starter GuyInCorner

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Not familiar with 7zzcan you run small 7zip single thread benchmark within your Ubuntu VM on ESXi then PVE ?

7zz b -mmt1

Looking it up it looks like a zip utility

I spun up a new VM to test rather than using one imported form ESXi and performed the same test.

Same results. Seems as though Proxmox is significantly slower than ESXi on the same hardware.

I'm going to abandon this path at least for now.

Same results. Seems as though Proxmox is significantly slower than ESXi on the same hardware.

I'm going to abandon this path at least for now.

From some discussion about this in a Discord chat a while ago, the difference is most likely ESXi not applying cpu mitigations to VMs by default, whereas Proxmox does. If the cpu mitigations are applied in ESXi, then apparently it has the same "slowdown" effect.

Turning off mitigations did help, but still didn't come close as per reply #17 of this thread so I don't think it's just that.

I recently setup KVM on a server too. A VM there was taking about 2 times as long to do the same task as on ESXi but I gather that's a tuning issue. It sounds like there's a ton of things to tune to get the correct performance out of KVM.

I recently setup KVM on a server too. A VM there was taking about 2 times as long to do the same task as on ESXi but I gather that's a tuning issue. It sounds like there's a ton of things to tune to get the correct performance out of KVM.

Any idea if it was cpu bound, io bound, or something else?A VM there was taking about 2 times as long to do the same task

Also, did you use the VirtIO drivers for everything?

I tried many drivers including VirtIO. Never figured it out. Finally gave up and concluded that Proxmox is just plain slower than ESXi. Too slow for my uses unfortunately. Proxmox has a lot of great features I'd like to use and ESXi licensing isn't very friendly for small companies now it's part of BroadcomAny idea if it was cpu bound, io bound, or something else?

Also, did you use the VirtIO drivers for everything?

Again, do you remember if it was cpu bound, io bound, or something else?Never figured it out.

No idea. My standard test is a task that is regularly performed by my server. It reads a lot of data from a database, processes it and puts the results back. The task itself is typically cpu limited when using SSD drive and a reasonable bus because it's single threaded. I use it as a benchmark test and know how long it should take on different machines.Again, do you remember if it was cpu bound, io bound, or something else?

The fact on the same machine it was taking 4 times longer says that something was a problem. Maybe there were more than one limitations. Seems like it was cpu but I don't know for sure. I should have been watching the CPU load to see. Normally one CPU is just about pegged from the task and another has a bit of a load from servicing the DB.

Ahhh well. Yeah it's unfortunate the system isn't still around for looking into this in further detail.

It's possible there was something happening in the system to cause io (or other) problems, which would then lead to this "multiple time slower" problem. What you're describing sounds (to me) like the kind of thing I'd expect from an io problem (ie wrong storage or network driver).

If you do ever want to get around to investigating this in depth please let me know. You'll need a bunch of patience though as it'd be a case of running the test a bunch of times with various settings to figure out exactly wtf is going wrong and how to fix it.

(I'm actually super time limited myself at the moment, but hopefully that'll improve in the new year)

It's possible there was something happening in the system to cause io (or other) problems, which would then lead to this "multiple time slower" problem. What you're describing sounds (to me) like the kind of thing I'd expect from an io problem (ie wrong storage or network driver).

If you do ever want to get around to investigating this in depth please let me know. You'll need a bunch of patience though as it'd be a case of running the test a bunch of times with various settings to figure out exactly wtf is going wrong and how to fix it.

(I'm actually super time limited myself at the moment, but hopefully that'll improve in the new year)

I have another server that I've just commissioned but it isn't actually needed until the new year. Maybe next week I'll have time to throw another drive in and load up Proxmox for another test.

I took some time this week and did some more testing.

The test I'm doing isn't some random benchmark. This is a real world task that's performed on our servers frequently. It ends up fetching about 13k records from a database, processes the data and inserting about 4k records as a result. The database and queries have all been optimized. During this process two threads each vary from 20-50% of a CPU. This exercises CPU speed and I/O throughput.

First I loaded Linux onto the mac mini where I'd previously been testing Proxmox. The test results there were dismal. Something about that mini will not run Linux very quickly. ESXi (busy box) runs great but Linux and Proxmox are slow. Not sure why so I just abandoned that test mule.

Next I loaded up a server that is currently idle and performed the same tests in Ubuntu 24.04 running on ESXi, Proxmox, and bare metal. Here are the results:

Ubuntu - bare metal

Average Test completion time 9198 ms (baseline)

ESXi

Average Test completion time 9710 ms (5.6% slower than Ubuntu on bare metal which is reasonable)

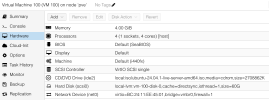

Proxmox - (everything virtio, cpu host, see attached image of VM)

Average Test completion time 11641 ms (26.5% slower than Ubuntu on bare metal which seems slow)

The test I'm doing isn't some random benchmark. This is a real world task that's performed on our servers frequently. It ends up fetching about 13k records from a database, processes the data and inserting about 4k records as a result. The database and queries have all been optimized. During this process two threads each vary from 20-50% of a CPU. This exercises CPU speed and I/O throughput.

First I loaded Linux onto the mac mini where I'd previously been testing Proxmox. The test results there were dismal. Something about that mini will not run Linux very quickly. ESXi (busy box) runs great but Linux and Proxmox are slow. Not sure why so I just abandoned that test mule.

Next I loaded up a server that is currently idle and performed the same tests in Ubuntu 24.04 running on ESXi, Proxmox, and bare metal. Here are the results:

Ubuntu - bare metal

Average Test completion time 9198 ms (baseline)

ESXi

Average Test completion time 9710 ms (5.6% slower than Ubuntu on bare metal which is reasonable)

Proxmox - (everything virtio, cpu host, see attached image of VM)

Average Test completion time 11641 ms (26.5% slower than Ubuntu on bare metal which seems slow)

Attachments

Last edited:

@GuyInCorner Awesome, thanks for getting around to this. Would you also be ok to screenshot the "Options" for the VM as well, as it's probably easier to do that rather than a bunch of back and forth questions to get the info.

With that host box, and the VM running on it, were any kind of cpu mitigations enabled?

If not, what's the approach you used for disabling them and verifying they're disabled? Asking because this thread has a decent chance of becoming solid reference information if we can get a fairly complete set of answers and drill into testing things out to figure what's going wrong.

With that host box, and the VM running on it, were any kind of cpu mitigations enabled?

If not, what's the approach you used for disabling them and verifying they're disabled? Asking because this thread has a decent chance of becoming solid reference information if we can get a fairly complete set of answers and drill into testing things out to figure what's going wrong.

A good point to bring up, but depending upon what the VM is doing it can vary. I've tried all caching options and found 'direct sync' to be fastest.afaik, you need set cache=none in PVE to be closer to baremetal and esxi

'none' was actually 21% slower.

This round of testing is being done on a Dell R730 with 2 x E5-2630 v3 @ 2.40GHz@GuyInCorner Awesome, thanks for getting around to this. Would you also be ok to screenshot the "Options" for the VM as well, as it's probably easier to do that rather than a bunch of back and forth questions to get the info.

With that host box, and the VM running on it, were any kind of cpu mitigations enabled?

If not, what's the approach you used for disabling them and verifying they're disabled? Asking because this thread has a decent chance of becoming solid reference information if we can get a fairly complete set of answers and drill into testing things out to figure what's going wrong.

Drives are SSD RAID 1 mirrors (which of interest allow things to run slightly faster than Raid 3 or Raid 10)

Attached are the VM options

I have done no mitigations. Here are the vulnerabilities listed from lscpu:

r730 - PVE - Host

Code:

Vulnerabilities:

Gather data sampling: Not affected

Itlb multihit: KVM: Mitigation: Split huge pages

L1tf: Mitigation; PTE Inversion; VMX conditional cache flushes, SMT vulnerable

Mds: Mitigation; Clear CPU buffers; SMT vulnerable

Meltdown: Mitigation; PTI

Mmio stale data: Mitigation; Clear CPU buffers; SMT vulnerable

Reg file data sampling: Not affected

Retbleed: Not affected

Spec rstack overflow: Not affected

Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Spectre v2: Mitigation; Retpolines; IBPB conditional; IBRS_FW; STIBP conditional; RSB filling; PBRSB-eIBRS Not affected; BHI Not affected

Srbds: Not affected

Tsx async abort: Not affectedr730 - PVE - Ubuntu VM

Code:

Vulnerabilities:

Gather data sampling: Not affected

Itlb multihit: Not affected

L1tf: Mitigation; PTE Inversion; VMX flush not necessary, SMT disabled

Mds: Mitigation; Clear CPU buffers; SMT Host state unknown

Meltdown: Mitigation; PTI

Mmio stale data: Mitigation; Clear CPU buffers; SMT Host state unknown

Reg file data sampling: Not affected

Retbleed: Not affected

Spec rstack overflow: Not affected

Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Spectre v2: Mitigation; Retpolines; IBPB conditional; IBRS_FW; STIBP disabled; RSB filling; PBRSB-eIBRS Not affected; BHI Retpoline

Srbds: Not affected

Tsx async abort: Not affectedr730 - Ubuntu - bare metal

Code:

Vulnerabilities:

Gather data sampling: Not affected

Itlb multihit: KVM: Mitigation: VMX disabled

L1tf: Mitigation; PTE Inversion; VMX conditional cache flushes, SMT vulnerable

Mds: Mitigation; Clear CPU buffers; SMT vulnerable

Meltdown: Mitigation; PTI

Mmio stale data: Mitigation; Clear CPU buffers; SMT vulnerable

Reg file data sampling: Not affected

Retbleed: Not affected

Spec rstack overflow: Not affected

Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Spectre v2: Mitigation; Retpolines; IBPB conditional; IBRS_FW; STIBP conditional; RSB filling; PBRSB-eIBRS Not affected; BHI Not affected

Srbds: Not affected

Tsx async abort: Not affectedr730 - ESXi - Ubuntu VM

Code:

Vulnerabilities:

Gather data sampling: Not affected

Itlb multihit: KVM: Mitigation: VMX unsupported

L1tf: Mitigation; PTE Inversion

Mds: Mitigation; Clear CPU buffers; SMT Host state unknown

Meltdown: Mitigation; PTI

Mmio stale data: Mitigation; Clear CPU buffers; SMT Host state unknown

Reg file data sampling: Not affected

Retbleed: Mitigation; IBRS

Spec rstack overflow: Not affected

Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Spectre v2: Mitigation; IBRS; IBPB conditional; STIBP disabled; RSB filling; PBRSB-eIBRS Not affected; BHI SW loop, KVM SW loop

Srbds: Not affected

Tsx async abort: Not affectedAttachments

Last edited: