When I first installed Proxmox on a serevr with 2 x 2TB SATA disks in a RAID1 I went with the defaults but now it looks like I need to change a few things. The system is running a few LXC containers in production mode though.

For one I need a bigger SWAP partition but I don't know much at all about LVM. Any pointers or maybe keywords to google besides resize LVM?

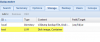

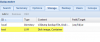

The next issue is that my storage is added as local. I now noticed I could add the same storage (using the volume group pve) as LVM and was wondering what the difference is here? See screenshot.

Also, given a default configuration of 2 HDs would it have been advisable to skip the hardware raid and get ZFS running?

Code:

#lsblk /dev/sda

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 1.8T 0 disk

├─sda1 8:1 0 1004.5K 0 part

├─sda2 8:2 0 19.5G 0 part /

├─sda3 8:3 0 1023M 0 part [SWAP]

└─sda4 8:4 0 1.8T 0 part

└─pve-data 252:0 0 1.8T 0 lvm /var/lib/vz

[63:563] 11:56 [root@james] ~For one I need a bigger SWAP partition but I don't know much at all about LVM. Any pointers or maybe keywords to google besides resize LVM?

The next issue is that my storage is added as local. I now noticed I could add the same storage (using the volume group pve) as LVM and was wondering what the difference is here? See screenshot.

Also, given a default configuration of 2 HDs would it have been advisable to skip the hardware raid and get ZFS running?