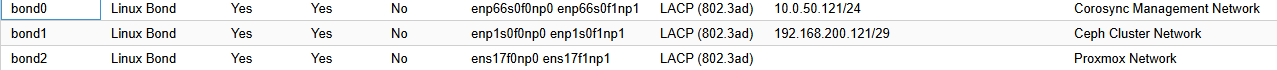

After our new cluster deployment was working well, we encountered an issue on two separate occasions over a two-week period: the entire cluster rebooted randomly without notifying us of any errors or fencing off the node that appeared to be causing issues. The logs all point to the watchdog timer expiring across our three-node cluster, forcing a full reboot of all three nodes. We’re puzzled why this is happening, as there are no relevant entries in the system logs for nodes 1–3. I have attached logs for pve2, which are virtually identical to those for all three nodes. On the networking side, there were no connection losses on the ports—each node is uplinked via LACP with 2×10 Gbps links into a dedicated untagged VLAN.

The first Corosync retransmit message appeared at 10:32:18, and that’s when things started to go downhill. By 11:23:52, the issues had worsened until the machine rebooted at 13:37:20. The root connection logs are from Veeam. Our switch handling the Corosync traffic is a Mikrotik switch, and I have also validated the LACP configuration. I've attached the relevant log file.

Thanks for your help!

The first Corosync retransmit message appeared at 10:32:18, and that’s when things started to go downhill. By 11:23:52, the issues had worsened until the machine rebooted at 13:37:20. The root connection logs are from Veeam. Our switch handling the Corosync traffic is a Mikrotik switch, and I have also validated the LACP configuration. I've attached the relevant log file.

Thanks for your help!