Hi, new to ProxMox (in a production environment), not too new to Virtualization as a whole. We recently migrated from Xenserver to Proxmox, using the following the procedure:

Shutdown Xenserver guest

In ProxMox node shell, use wget to get the xenserver machine downloaded

tar xf [exported Xen VM]

use xenmigrate.py to convert to .img

qm importdisk [diskname].img local-zfs

configure guest disk (attach) and tweak boot order, install guest agent, etc

Now, because we ran the wget and extracted on the shell (node root) storage, I've since noticed that our total storage in both the local and local-zfs volume summaries has shrank by a commensurate amount. Once the VMs are imported, tweaked, running, and snapshotted (with off machine backups as well), I went and used rm -r [Migrated VM folder] from the node shell and the local and local-zfs totals remain in their reduce capacity. This is a bit of a killer, as the VMs we've imported add up to a lot of storage and having them reduce the total storage available and (after importing to local-zfs) the used storage makes it so they essentially take up the space twice.

I'm looking for how to get the node shell root storage back down to a reasonable level and ensure that the local and local-zfs storages have their maximum storage back to where it needs to be. I unfortunately, am starting to think that we've made a grave mistake and need to rebuild the node. Please tell me I'm missing a way to re-organize the storage to fix this.

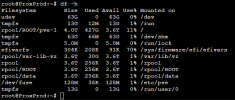

Attached pictures are of the local and local-zfs storage summaries, "du" command output, "df -h" command output

Shutdown Xenserver guest

In ProxMox node shell, use wget to get the xenserver machine downloaded

tar xf [exported Xen VM]

use xenmigrate.py to convert to .img

qm importdisk [diskname].img local-zfs

configure guest disk (attach) and tweak boot order, install guest agent, etc

Now, because we ran the wget and extracted on the shell (node root) storage, I've since noticed that our total storage in both the local and local-zfs volume summaries has shrank by a commensurate amount. Once the VMs are imported, tweaked, running, and snapshotted (with off machine backups as well), I went and used rm -r [Migrated VM folder] from the node shell and the local and local-zfs totals remain in their reduce capacity. This is a bit of a killer, as the VMs we've imported add up to a lot of storage and having them reduce the total storage available and (after importing to local-zfs) the used storage makes it so they essentially take up the space twice.

I'm looking for how to get the node shell root storage back down to a reasonable level and ensure that the local and local-zfs storages have their maximum storage back to where it needs to be. I unfortunately, am starting to think that we've made a grave mistake and need to rebuild the node. Please tell me I'm missing a way to re-organize the storage to fix this.

Attached pictures are of the local and local-zfs storage summaries, "du" command output, "df -h" command output