Hi,

As suggested here I tried to use a sync task to copy/move all backup groups with all snapshots from the root namespace to some custom namespaces:

Tried it previously with the local IP instead of 127.0.0.1 and the token that owns my backup groups instead of root@pam but its the same problem.

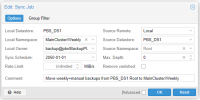

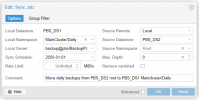

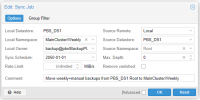

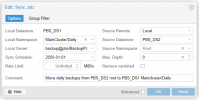

Then I created these sync tasks to run them once manually to migrate my backups to the new namespace or even new datastore+namespace:

"Local Owner" is my token that owns my backup groups in the root namespaces and I want to keep it that way for the custom namespaces too.

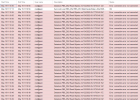

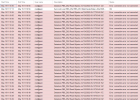

But when I run sync task they finish with "OK" but the task log is spammed with red errors:

I didn't looked at all errors but looks like they all should contain something like this:

To me it looks like the sync was bascially successful but I want to move those backup groups to the new namespace, not just copy them. So next I would need to delete all the backups in my root namespaces and I don`t want to end up with deleted backups that worked fine and a bunch of partial not working syned backups.

But according to what @fabian wrote here...

Is there a way to verify that all synced groups/snapshots are correct and complete before deleting the snapshots from the root namespace?

Looks like a verify task isn't working for that as all snapshots were verified in the root namespace before doing the sync and the synced snapshots are still shown as "all ok" even if I never ran a verify job for that namespace.

As suggested here I tried to use a sync task to copy/move all backup groups with all snapshots from the root namespace to some custom namespaces:

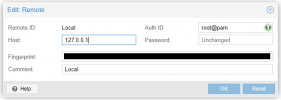

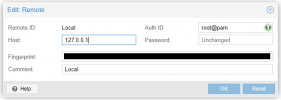

For that I created a local remote like this:to actually "move" (copy) the backup groups and snapshots, you can use a sync job (with the remote pointing to the same PBS instance) or a one-off proxmox-backup-manager pull. when moving from datastore A root namespace to datastore A namespace foo, only the metadata (manifest and indices and so on) will be copied, the chunks will be re-used. when moving from datastore A to datastore B the chunks that are not already contained in B will of course be copied as well, no matter whether namespaces are involved at either side.

Tried it previously with the local IP instead of 127.0.0.1 and the token that owns my backup groups instead of root@pam but its the same problem.

Then I created these sync tasks to run them once manually to migrate my backups to the new namespace or even new datastore+namespace:

"Local Owner" is my token that owns my backup groups in the root namespaces and I want to keep it that way for the custom namespaces too.

But when I run sync task they finish with "OK" but the task log is spammed with red errors:

I didn't looked at all errors but looks like they all should contain something like this:

Code:

2022-05-19T11:18:52+02:00: starting new backup reader datastore 'PBS_DS2': "/mnt/pbs2"

2022-05-19T11:18:52+02:00: protocol upgrade done

2022-05-19T11:18:52+02:00: GET /download

2022-05-19T11:18:52+02:00: download "/mnt/pbs2/vm/124/2022-05-16T03:45:11Z/index.json.blob"

2022-05-19T11:18:52+02:00: GET /download

2022-05-19T11:18:52+02:00: download "/mnt/pbs2/vm/124/2022-05-16T03:45:11Z/qemu-server.conf.blob"

2022-05-19T11:18:52+02:00: GET /download

2022-05-19T11:18:52+02:00: download "/mnt/pbs2/vm/124/2022-05-16T03:45:11Z/fw.conf.blob"

2022-05-19T11:18:52+02:00: GET /download

2022-05-19T11:18:52+02:00: download "/mnt/pbs2/vm/124/2022-05-16T03:45:11Z/drive-scsi1.img.fidx"

2022-05-19T11:18:52+02:00: register chunks in 'drive-scsi1.img.fidx' as downloadable.

2022-05-19T11:18:52+02:00: GET /download

2022-05-19T11:18:52+02:00: download "/mnt/pbs2/vm/124/2022-05-16T03:45:11Z/drive-scsi0.img.fidx"

2022-05-19T11:18:52+02:00: register chunks in 'drive-scsi0.img.fidx' as downloadable.

2022-05-19T11:18:52+02:00: GET /download

2022-05-19T11:18:52+02:00: download "/mnt/pbs2/vm/124/2022-05-16T03:45:11Z/client.log.blob"

2022-05-19T11:18:52+02:00: TASK ERROR: connection error: not connectedTo me it looks like the sync was bascially successful but I want to move those backup groups to the new namespace, not just copy them. So next I would need to delete all the backups in my root namespaces and I don`t want to end up with deleted backups that worked fine and a bunch of partial not working syned backups.

But according to what @fabian wrote here...

...I can ignore all those "TASK ERROR: connection error: not connected" errors? Doesn't expect the sync client that dopped connection because it is a local one?these are benign - the connection error is because the sync client drops the connection and the endpoint doesn't yet expect that (should be improved). downloading the log is just best effort - it doesn't have to be there at all (or yet), and unless your host backup scripts upload a log (after the backup) there won't be one

Is there a way to verify that all synced groups/snapshots are correct and complete before deleting the snapshots from the root namespace?

Looks like a verify task isn't working for that as all snapshots were verified in the root namespace before doing the sync and the synced snapshots are still shown as "all ok" even if I never ran a verify job for that namespace.

Last edited: