Hello,

I am facing memory exhaustion with two out of three Proxmox nodes in the same HA cluster.

I did a lot of searching, but failed to find my exact situation, so below I extracted the info asked in the other similar cases.

To rule out the first suspect, I am not using ZFS.

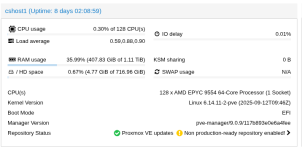

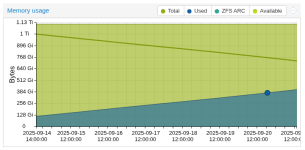

And the summary page is as follows:

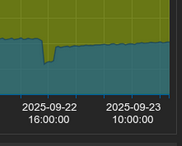

Here is the second node affected:

And this is the status page from the not-affected node:

There are very few things that are running on this cluster:

Total memory usage of all VMs is barely 8 GB on all nodes combined.

Please help me identify what causes memory leaks.

I am facing memory exhaustion with two out of three Proxmox nodes in the same HA cluster.

I did a lot of searching, but failed to find my exact situation, so below I extracted the info asked in the other similar cases.

To rule out the first suspect, I am not using ZFS.

arcstat shows:

Bash:

root@ovh-px-01:~# arcstat

time read ddread ddh% dmread dmh% pread ph% size c avail

16:27:44 0 0 0 0 0 0 0 2.8K 2.0G -1.2Garc_summary -s arc shows:

Bash:

root@ovh-px-01:~# arc_summary -s arc

------------------------------------------------------------------------

ZFS Subsystem Report Wed Sep 03 16:32:01 2025

Linux 6.14.11-1-pve 2.3.4-pve1

Machine: ovh-px-01 (x86_64) 2.3.4-pve1

ARC status:

Total memory size: 62.4 GiB

Min target size: 3.1 % 2.0 GiB

Max target size: 19.2 % 12.0 GiB

Target size (adaptive): < 0.1 % 2.0 GiB

Current size: < 0.1 % 2.8 KiB

Free memory size: 860.2 MiB

Available memory size: -1441892224 Bytes

ARC structural breakdown (current size): 2.8 KiB

Compressed size: 0.0 % 0 Bytes

Overhead size: 0.0 % 0 Bytes

Bonus size: 0.0 % 0 Bytes

Dnode size: 0.0 % 0 Bytes

Dbuf size: 0.0 % 0 Bytes

Header size: 100.0 % 2.8 KiB

L2 header size: 0.0 % 0 Bytes

ABD chunk waste size: 0.0 % 0 Bytes

ARC types breakdown (compressed + overhead): 0 Bytes

Data size: n/a 0 Bytes

Metadata size: n/a 0 Bytes

ARC states breakdown (compressed + overhead): 0 Bytes

Anonymous data size: n/a 0 Bytes

Anonymous metadata size: n/a 0 Bytes

MFU data target: 37.5 % 0 Bytes

MFU data size: n/a 0 Bytes

MFU evictable data size: n/a 0 Bytes

MFU ghost data size: 0 Bytes

MFU metadata target: 12.5 % 0 Bytes

MFU metadata size: n/a 0 Bytes

MFU evictable metadata size: n/a 0 Bytes

MFU ghost metadata size: 0 Bytes

MRU data target: 37.5 % 0 Bytes

MRU data size: n/a 0 Bytes

MRU evictable data size: n/a 0 Bytes

MRU ghost data size: 0 Bytes

MRU metadata target: 12.5 % 0 Bytes

MRU metadata size: n/a 0 Bytes

MRU evictable metadata size: n/a 0 Bytes

MRU ghost metadata size: 0 Bytes

Uncached data size: n/a 0 Bytes

Uncached metadata size: n/a 0 Bytes

ARC hash breakdown:

Elements: 0

Collisions: 0

Chain max: 0

Chains: 0

ARC misc:

Uncompressed size: n/a 0 Bytes

Memory throttles: 0

Memory direct reclaims: 0

Memory indirect reclaims: 0

Deleted: 0

Mutex misses: 0

Eviction skips: 0

Eviction skips due to L2 writes: 0

L2 cached evictions: 0 Bytes

L2 eligible evictions: 0 Bytes

L2 eligible MFU evictions: n/a 0 Bytes

L2 eligible MRU evictions: n/a 0 Bytes

L2 ineligible evictions: 0 Bytesfree -h shows:

Bash:

root@ovh-px-01:~# free -h

total used free shared buff/cache available

Mem: 62Gi 61Gi 789Mi 44Mi 534Mi 652Mi

Swap: 2.0Gi 2.0Gi 1.5Mitop -co%MEM shows:

And the summary page is as follows:

Here is the second node affected:

And this is the status page from the not-affected node:

echo 1 > /proc/sys/vm/drop_caches does virtually nothing.There are very few things that are running on this cluster:

Total memory usage of all VMs is barely 8 GB on all nodes combined.

Please help me identify what causes memory leaks.

Last edited: