I am running the latest pfSense (2.4.5 p1)

I have a fiber gigabit connection to the internet and my nics are 1gb. When I install Suricata and turn it on It reduces my speeds to 280mb/s. That is a 72% drop in speed.

I have turned off the detection rules, changed the modes, and none of it changes much at all. As soon as I disable Suricata my speed goes back to 950/950.

Doing pfSense in Hyper-V I was getting the desired speeds. I really like Proxmox, but am considering getting another server to run pfSense bare metal to be able to run Suricata.

Hardware Checksum Offloading, Hardware TCP Segmentation Offloading, and Hardware Large Receive Offloading are all disabled in pfSense

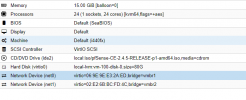

I've included a screenshot of my configuration. The VM has plenty of resources. Please help!

I have a fiber gigabit connection to the internet and my nics are 1gb. When I install Suricata and turn it on It reduces my speeds to 280mb/s. That is a 72% drop in speed.

I have turned off the detection rules, changed the modes, and none of it changes much at all. As soon as I disable Suricata my speed goes back to 950/950.

Doing pfSense in Hyper-V I was getting the desired speeds. I really like Proxmox, but am considering getting another server to run pfSense bare metal to be able to run Suricata.

Hardware Checksum Offloading, Hardware TCP Segmentation Offloading, and Hardware Large Receive Offloading are all disabled in pfSense

I've included a screenshot of my configuration. The VM has plenty of resources. Please help!

Attachments

Last edited: