Hi everyone, hoping someone can point me in the right direction

After using a zfs based disk image for approx the last 2 years, after upgrading to Proxmox v7.1 and rebooting once or twice, I was greeted with a boot error for a vm, complaining it cannot mount the data disk referenced in fstab.

The zfs pool is based on a single 500GB HD, so no raid config used.

Unfortunately whatever web page / tutorial I used to set up the HD & zfs pool, has evaded me, which is making this scenario hard for me to diagnose.

I've commented out the fstab entry, for the data disk, to allow the VM to boot. Unfortunately I have a few docker containers all pointing to the mount point, as data locations.

My concerns are that the data has been lost, or that any attempt to restore the working config will require overwriting any existing data. As such I have been hesitant to do much more than READ only commands, while trying to find the root cause of my problem.

For reference the affected partition is /dev/sdb1. zfs pool is ZFS1 and the disk image referenced by the vm as: vm-101-disk-0

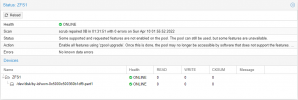

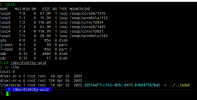

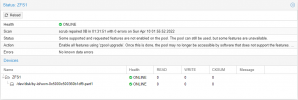

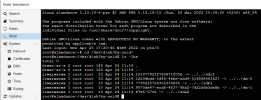

From my single Proxmox node, I see the following information

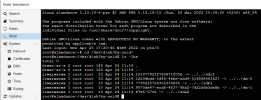

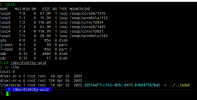

And finally from within the VM, this is what I see

Any comments regarding the health of the disk image, or suggestions for commands to try discover / mount or / dump the disk image would be greatly appreciated. Thanks for reading this far...

After using a zfs based disk image for approx the last 2 years, after upgrading to Proxmox v7.1 and rebooting once or twice, I was greeted with a boot error for a vm, complaining it cannot mount the data disk referenced in fstab.

The zfs pool is based on a single 500GB HD, so no raid config used.

Unfortunately whatever web page / tutorial I used to set up the HD & zfs pool, has evaded me, which is making this scenario hard for me to diagnose.

I've commented out the fstab entry, for the data disk, to allow the VM to boot. Unfortunately I have a few docker containers all pointing to the mount point, as data locations.

My concerns are that the data has been lost, or that any attempt to restore the working config will require overwriting any existing data. As such I have been hesitant to do much more than READ only commands, while trying to find the root cause of my problem.

For reference the affected partition is /dev/sdb1. zfs pool is ZFS1 and the disk image referenced by the vm as: vm-101-disk-0

From my single Proxmox node, I see the following information

And finally from within the VM, this is what I see

Any comments regarding the health of the disk image, or suggestions for commands to try discover / mount or / dump the disk image would be greatly appreciated. Thanks for reading this far...