Hi all,

Just wanted to share a success case that may be useful for the Proxmox team and the community.

Hardware:

• HPE DL385 Gen11

• 2× NVIDIA H200 NVL (NVLink4, 18 links per GPU)

Proxmox Version:

• Proxmox VE 8.4

• ZFS root

• Standard VFIO passthrough (q35 + OVMF)

Guest OS:

• Ubuntu 22.04

• Ubuntu 24.04

• NVIDIA driver 580.95.05

• CUDA 13.0

Result:

Both GPUs passed through successfully.

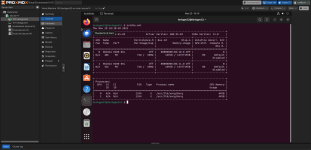

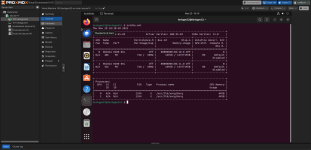

nvidia-smi nvlink --status shows all 18× NVLink lanes active per GPU (26.562 GB/s each), meaning full NVLink (NV18) is functional inside a VM.

Measured aggregate NVLink bandwidth ≈ 478 GB/s per GPU, matching bare-metal H200 NVL performance.

NUMA affinity and P2P memory access also working correctly.

Why I’m sharing:

This setup is not documented anywhere, and Proxmox does not officially claim NVLink support in VMs — so this result may help others working with HPC GPUs or modern NVIDIA architectures.

Happy to provide sanitized logs or additional validation info if useful for future documentation.

Just wanted to share a success case that may be useful for the Proxmox team and the community.

Hardware:

• HPE DL385 Gen11

• 2× NVIDIA H200 NVL (NVLink4, 18 links per GPU)

Proxmox Version:

• Proxmox VE 8.4

• ZFS root

• Standard VFIO passthrough (q35 + OVMF)

Guest OS:

• Ubuntu 22.04

• Ubuntu 24.04

• NVIDIA driver 580.95.05

• CUDA 13.0

Result:

Both GPUs passed through successfully.

nvidia-smi nvlink --status shows all 18× NVLink lanes active per GPU (26.562 GB/s each), meaning full NVLink (NV18) is functional inside a VM.

Measured aggregate NVLink bandwidth ≈ 478 GB/s per GPU, matching bare-metal H200 NVL performance.

NUMA affinity and P2P memory access also working correctly.

Why I’m sharing:

This setup is not documented anywhere, and Proxmox does not officially claim NVLink support in VMs — so this result may help others working with HPC GPUs or modern NVIDIA architectures.

Happy to provide sanitized logs or additional validation info if useful for future documentation.