I am running PBS as a VM on the same Proxmox host where I back up LXC containers and VMs.

Backups usually work, but sometimes they fail with errors like:

This happens intermittently and usually only for certain containers, while other containers back up successfully before and after.

Additionally, PBS is accessed through an Nginx reverse proxy running in a separate LXC container on the same Proxmox host.

in

Nginx config:

Environment:

Questions:

Thanks for any help

Backups usually work, but sometimes they fail with errors like:

Bash:

catalog upload error - pipelined request failed: connection closed because of a broken pipe

Error: error at "usr/include/xercesc/framework/psvi"

Caused by: sending on a closed channel

--or--

catalog upload error - channel closedError: pipelined request failed: connection closed because of a broken pipeAdditionally, PBS is accessed through an Nginx reverse proxy running in a separate LXC container on the same Proxmox host.

in

journalctl -f -b I constantly see the following message:

C-like:

Aug 21 17:31:58 pbs proxmox-backup-proxy[826]: [[::ffff:172.16.100.65]:54132] failed to check for TLS handshake: couldn't peek into incoming TCP stream

Aug 21 17:32:28 pbs proxmox-backup-proxy[826]: [[::ffff:172.16.100.65]:33634] failed to check for TLS handshake: couldn't peek into incoming TCP stream

Aug 21 17:32:58 pbs proxmox-backup-proxy[826]: [[::ffff:172.16.100.65]:43834] failed to check for TLS handshake: couldn't peek into incoming TCP stream

Aug 21 17:33:28 pbs proxmox-backup-proxy[826]: [[::ffff:172.16.100.65]:34628] failed to check for TLS handshake: couldn't peek into incoming TCP stream

Aug 21 17:33:58 pbs proxmox-backup-proxy[826]: [[::ffff:172.16.100.65]:57200] failed to check for TLS handshake: couldn't peek into incoming TCP streamNginx config:

NGINX:

server {

listen 80 ;

listen [::]:80;

server_name domain.com;

rewrite ^(.*) https://$host$1 permanent;

}

server {

listen 443 ssl;

listen [::]:443 ssl;

server_name domain.com;

ssl_certificate /etc/letsencrypt/live/domain.com/fullchain.pem;

ssl_certificate_key /etc/letsencrypt/live/domain.com/privkey.pem;

proxy_redirect off;

location / {

proxy_http_version 1.1;

proxy_set_header Host $host;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection "upgrade";

proxy_pass https://172.16.100.62:8007;

proxy_buffering off;

client_max_body_size 0;

proxy_connect_timeout 3600s;

proxy_read_timeout 3600s;

proxy_send_timeout 3600s;

send_timeout 3600s;

}

}Environment:

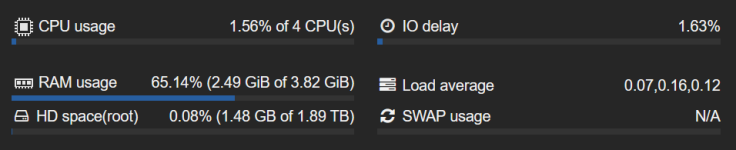

- PBS runs inside a VM on the Proxmox host

- Reverse proxy: Nginx in a separate LXC container

172.16.100.62- pbs172.16.100.65- lxc with nginx

Bash:

proxmox-backup 4.0.0 running kernel: 6.14.8-2-pve

proxmox-backup-server 4.0.11-2 running version: 4.0.11

proxmox-kernel-helper 9.0.3

proxmox-kernel-6.14.8-2-pve-signed 6.14.8-2

proxmox-kernel-6.14 6.14.8-2

ifupdown2 3.3.0-1+pmx9

libjs-extjs 7.0.0-5

proxmox-backup-docs 4.0.11-2

proxmox-backup-client 4.0.11-1

proxmox-mail-forward 1.0.2

proxmox-mini-journalreader 1.6

proxmox-offline-mirror-helper 0.7.0

proxmox-widget-toolkit 5.0.5

pve-xtermjs 5.5.0-2

smartmontools 7.4-pve1

zfsutils-linux 2.3.3-pve1Questions:

- Could the repeated TLS handshake errors in the PBS journal be caused by my Nginx reverse proxy setup?

- Are the intermittent pxar backup failures (broken pipe, catalog upload error) related to the proxy, or are they more likely caused by PBS itself running inside the VM?

- What steps can I take to troubleshoot or handle these errors?

Thanks for any help

Last edited: